This is the multi-page printable view of this section. Click here to print.

Project

- 1: Platform 101: Conceptual Onboarding

- 1.1: Introduction

- 1.2: Edge Developer Framework

- 1.3: Platform Engineering aka Platforming

- 1.4: Orchestrators

- 1.5: CNOE

- 1.6: CNOE Showtime

- 1.7: Conclusio

- 1.8:

- 2: Bootstrapping Infrastructure

- 3: Plan in 2024

- 3.1: Workstreams

- 3.1.1: Fundamentals

- 3.1.1.1: Activity 'Platform Definition'

- 3.1.1.2: Activity 'CI/CD Definition'

- 3.1.2: POCs

- 3.1.2.1: Activity 'CNOE Investigation'

- 3.1.2.2: Activity 'SIA Asset Golden Path Development'

- 3.1.2.3: Activity 'Kratix Investigation'

- 3.1.3: Deployment

- 3.1.3.1: Activity 'Forgejo'

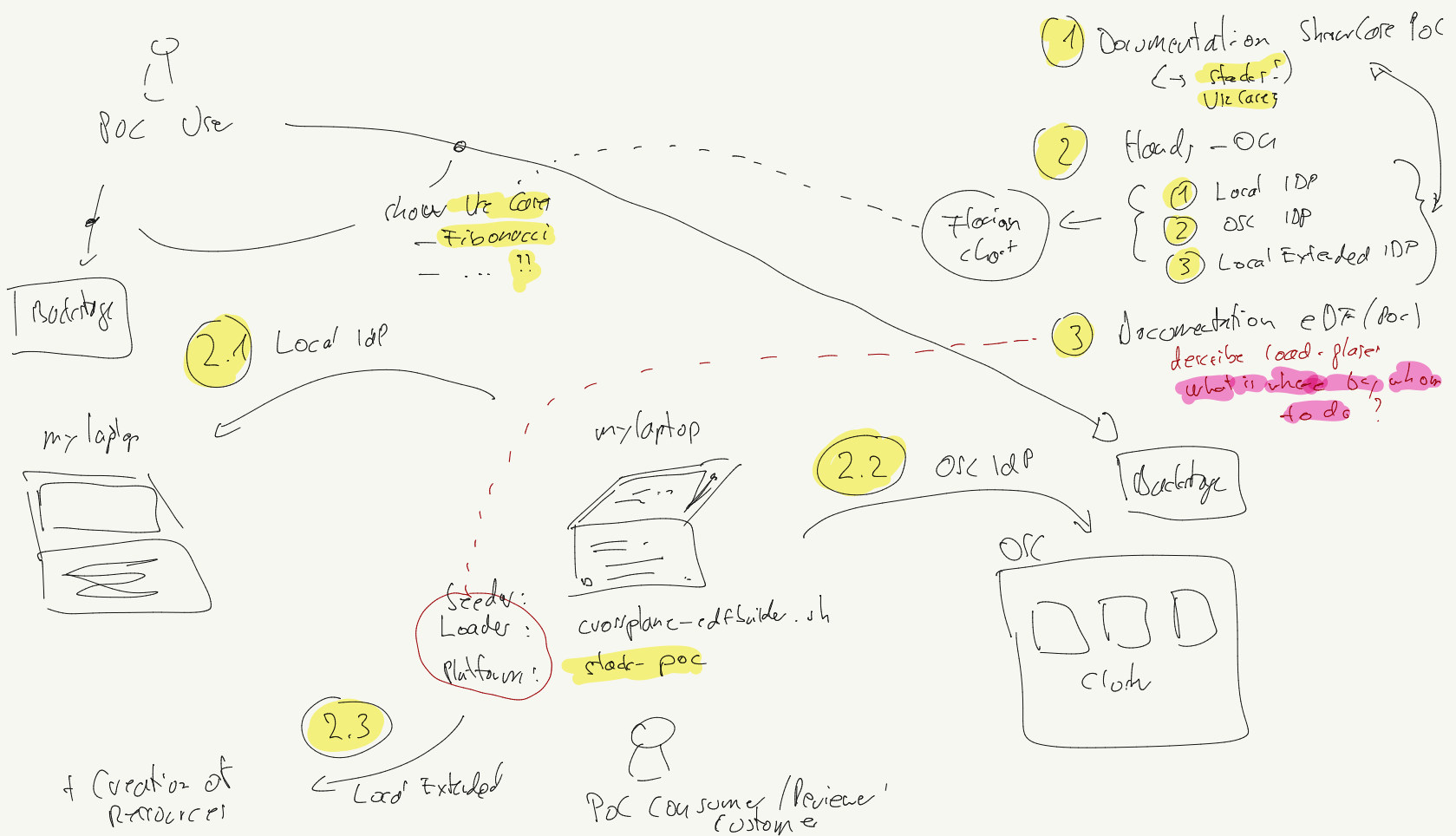

- 3.2: PoC Structure

- 4: Stakeholder Workshop Intro

- 5: Team and Work Structure

- 6:

1 - Platform 101: Conceptual Onboarding

1.1 - Introduction

Summary

This onboarding section is for you when are new to IPCEI-CIS subproject ‘Edge Developer Framework (EDF)’ and you want to know about

- its context to ‘Platform Engineering’

- and why we think it’s the stuff we need to care about in the EDF

Storyline of our current project plan (2024)

- We have the ‘Edge Developer Framework’

- We think the solution for EDF is in relation to ‘Platforming’ (Digital Platforms)

- The next evolution after DevOps

- Gartner predicts 80% of SWE companies to have platforms in 2026

- Platforms have a history since roundabout 2019

- CNCF has a working group which created capabilities and a maturity model

- Platforms evolve - nowadys there are Platform Orchestrators

- Humanitec set up a Reference Architecture

- There is this ‘Orchestrator’ thing - declaratively describe, customize and change platforms!

- Mapping our assumptions to the CNOE solution

- CNOE is a hot candidate to help and fulfill our platform building

- CNOE aims to embrace change and customization!

- Showtime CNOE

Please challenge this story!

Please do not think this story and the underlying assumptions are carved in stone!

- Don’t miss to further investigate and truely understand EDF specification needs

- Don’t miss to further investigate and truely understand Platform capabilities on top of DevOps

- Don’t miss to further investigate and truely understand Platform orchestration

- Don’t miss to further investigate and truely understand specific orchestratiing solutions like CNOE

Your role as ‘Framework Engineer’ in the Domain Architecture

Pls be aware of the the following domain and task structure of our mission:

1.2 - Edge Developer Framework

Summary

The ‘Edge Developer Framework’ is both the project and the product we are working for. Out of the leading ‘Portfolio Document’ we derive requirements which are ought to be fulfilled by Platform Engineering.

This is our claim!

What are the specifications we know from the IPCEI-CIS Project Portfolio document

Reference: IPCEI-CIS Project Portfolio Version 5.9, November 17, 2023

DTAG´s IPCEI-CIS Project Portfolio (p.12)

e. Development of DTAG/TSI Edge Developer Framework

- Goal: All developed innovations must be accessible to developer communities in a highly user-friendly and easy way

Development of DTAG/TSI Edge Developer Framework (p.14)

| capability | major novelties | ||

|---|---|---|---|

| e.1. Edge Developer full service framework (SDK + day1 +day2 support for edge installations) | Adaptive CI/CD pipelines for heterogeneous edge environments | Decentralized and self healing deployment and management | edge-driven monitoring and analytics |

| e.2. Built-in sustainability optimization in Edge developer framework | sustainability optimized edge-aware CI/CD tooling | sustainability-optimized configuration management | sustainability-optimized efficient deployment strategies |

| e.3. Sustainable-edge management-optimized user interface for edge developers | sustainability optimized User Interface | Ai-Enabled intelligent experience | AI/ML-based automated user experience testing and optimization |

DTAG objectives & contributions (p.27)

DTAG will also focus on developing an easy-to-use Edge Developer framework for software developers to manage the whole lifecycle of edge applications, i.e. for day-0-, day-1- and up to day-2- operations. With this DTAG will strongly enable the ecosystem building for the entire IPCEI-CIS edge to cloud continuum and ensure openness and accessibility for anyone or any company to make use and further build on the edge to cloud continuum. Providing the use of the tool framework via an open-source approach will further reduce entry barriers and enhance the openness and accessibility for anyone or any organization (see innovations e.).

WP Deliverables (p.170)

e.1 Edge developer full-service framework

This tool set and related best practices and guidelines will adapt, enhance and further innovate DevOps principles and their related, necessary supporting technologies according to the specific requirements and constraints associated with edge or edge cloud development, in order to keep the healthy and balanced innovation path on both sides, the (software) development side and the operations side in the field of DevOps.

What comes next?

Next we’ll see how these requirements seem to be fulfilled by platforms!

1.3 - Platform Engineering aka Platforming

Summary

Since 2010 we have DevOps. This brings increasing delivery speed and efficiency at scale. But next we got high ‘cognitive loads’ for developers and production congestion due to engineering lifecycle complexity. So we need on top of DevOps an instrumentation to ensure and enforce speed, quality, security in modern, cloud native software development. This instrumentation is called ‘golden paths’ in intenal develoepr platforms (IDP).

History of Platform Engineering

Let’s start with a look into the history of platform engineering. A good starting point is Humanitec, as they nowadays are one of the biggest players (’the market leader in IDPs.’) in platform engineering.

They create lots of beautiful articles and insights, their own platform products and basic concepts for the platform architecture (we’ll meet this later on!).

Further nice reference to the raise of platforms

- What we call a Platform

- Platforms and the cloud native connectionwhat-platform-engineering-and-why-do-we-need-it#why_we_need_platform_engineering

- Platforms and microservices

- Platforms in the product perspective

Benefit of Platform Engineering, Capabilities

In The Evolution of Platform Engineering the interconnection inbetween DevOps, Cloud Native, and the Rise of Platform Engineering is nicely presented and summarizes:

Platform engineering can be broadly defined as the discipline of designing and building toolchains and workflows that enable self-service capabilities for software engineering organizations

When looking at these ‘capabilities’, we have CNCF itself:

CNCF Working group / White paper defines layerwed capabilities

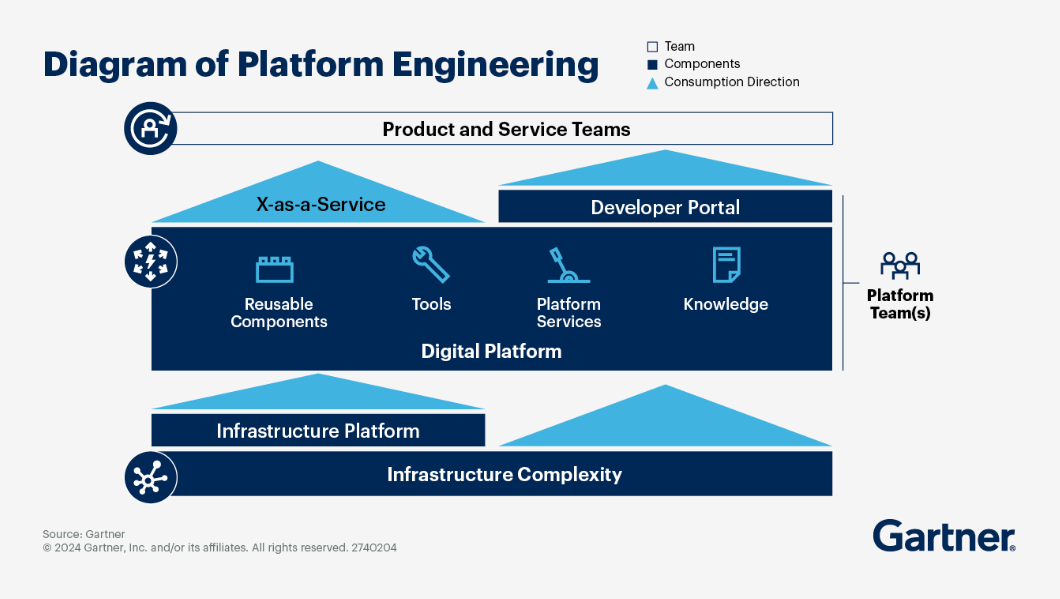

There is a CNCF working group which provides the definition of Capabilities of platforms and shows a first idea of the layered architecture of platforms as service layer for developers (“product and application teams”):

Important: As Platform engineer also notice the platform-eng-maturity-model

Platform Engineering Team

Or, in another illustration for the platform as a developer service interface, which also defines the ‘Platform Engineering Team’ inbetween:

How to set up Platforms

As we now have evidence about the nescessity of platforms, their capabilities and benefit as self service layer for developers, we want to thin about how to build them.

First of all some important wording to motivate the important term ‘internal developer platfoms’ (we will go into this deeper in the next section with the general architecture), which is clear today, but took years to evolve and is still some amount if effort to jump in:

- Platform: As defined above: A product which serves software engineering teams

- Platform Engineering: Creating such a product

- Internal Developer Platforms (IDP): A platform for developers :-)

- Internal Developer Portal: The entry point of a developer to such an IDP

CNCF mapping from capabilities to (CNCF) projects/tools

Ecosystems in InternalDeveloperPlatform

Build or buy - this is also in pltaform engineering a tweaked discussion, which one of the oldest player answers like this with some oppinioated internal capability structuring:

[internaldeveloperplatform.org[(https://internaldeveloperplatform.org/platform-tooling/)

What comes next?

Next we’ll see how these concepts got structured!

Addendum

Digital Platform defintion from What we call a Platform

Words are hard, it seems. ‘Platform’ is just about the most ambiguous term we could use for an approach that is super-important for increasing delivery speed and efficiency at scale. Hence the title of this article, here is what I’ve been talking about most recently.

Definitions for software and hardware platforms abound, generally describing an operating environment upon which applications can execute and which provides reusable capabilities such as file systems and security.

Zooming out, at an organisational level a ‘digital platform’ has similar characteristics - an operating environment which teams can build upon to deliver product features to customers more quickly, supported by reusable capabilities.

A digital platform is a foundation of self-service APIs, tools, services, knowledge and support which are arranged as a compelling internal product. Autonomous delivery teams can make use of the platform to deliver product features at a higher pace, with reduced co-ordination.

Myths :-) - What are platforms not

common-myths-about-platform-engineering

Platform Teams

This is about you :-), the platform engineering team:

how-to-build-your-platform-engineering-team

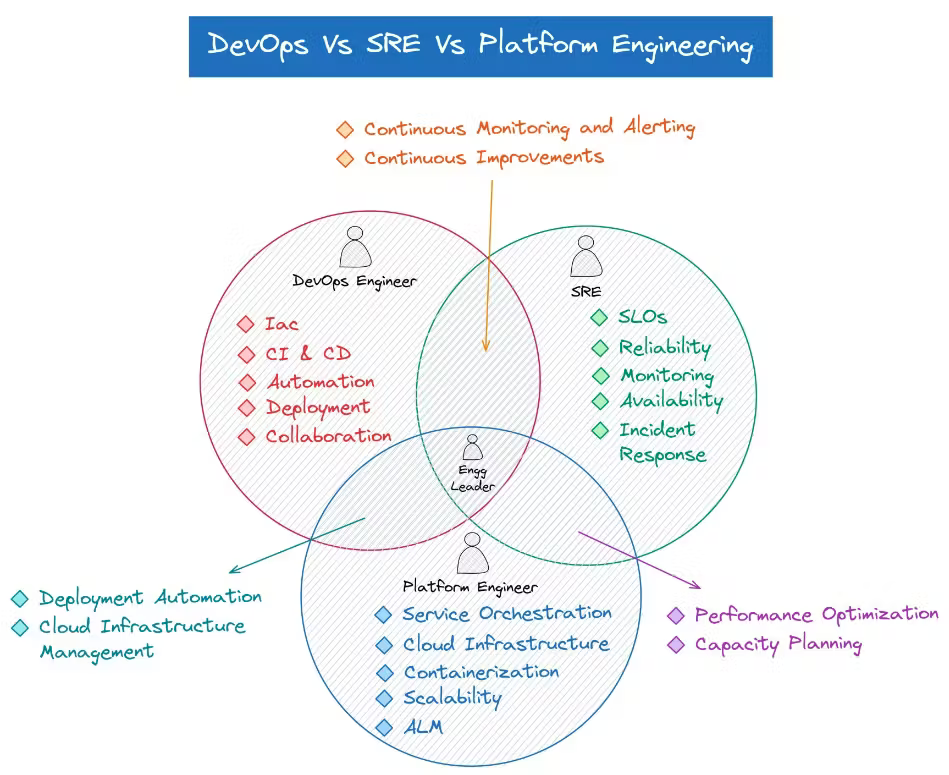

in comparison: devops vs sre vs platform

https://www.qovery.com/blog/devops-vs-platform-engineering-is-there-a-difference/

1.4 - Orchestrators

Summary

When defining and setting up platforms next two intrinsic problems arise:

- it is not declarative and automated

- it is not or least not easily changable

Thus the technology of ‘Platform Orchestrating’ emerged recently, in late 2023.

Platform reference architecture

An interesting difference between the CNCF white paper building blocks and them from Internaldeveloperplatforms is the part orchestrators.

This is something extremely new since late 2023 - the rise of the automation of platform engineering!

It was Humanitec creating a definition of platform architecture, as they needed to defien what they are building with their ‘orchestrator’:

Declarative Platform Orchestration

Based on the refence architecture you next can build - or let’s say ‘orchestrate’ - different platform implementations, based on declarative definitions of the platform design.

https://humanitec.com/reference-architectures

Hint: There is a slides tool provided by McKinsey to set up your own platform deign based on the reference architecture

What comes next?

Next we’ll see how we are going to do platform orchestration with CNOE!

Addendum

Building blocks from Humanitecs ‘state-of-platform-engineering-report-volume-2’

You remember the capability mappings from the time before orchestration? Here we have a similar setup based on Humanitecs platform engineering status ewhite paper:

1.5 - CNOE

Summary

In late 2023 platform orchestration raised - the discipline of declarativley dinfing, building, orchestarting and reconciling building blocks of (digital) platforms.

The famost one ist the platform orchestrator from Humanitec. They provide lots of concepts and access, also open sourced tools and schemas. But they do not have open sourced the ocheastartor itself.

Thus we were looking for open source means for platform orchestrating and found CNOE.

Requirements for an Orchestrator

When we want to set up a complete platform we expect to have

- a schema which defines the platform, its ressources and internal behaviour

- a dynamic configuration or templating mechanism to provide a concrete specification of a platform

- a deployment mechanism to deploy and reconcile the platform

This is what CNOE delivers:

For seamless transition into a CNOE-compliant delivery pipeline, CNOE will aim at delivering “packaging specifications”, “templating mechanisms”, as well as “deployer technologies”, an example of which is enabled via the idpBuilder tool we have released. The combination of templates, specifications, and deployers allow for bundling and then unpacking of CNOE recommended tools into a user’s DevOps environment. This enables teams to share and deliver components that are deemed to be the best tools for the job.

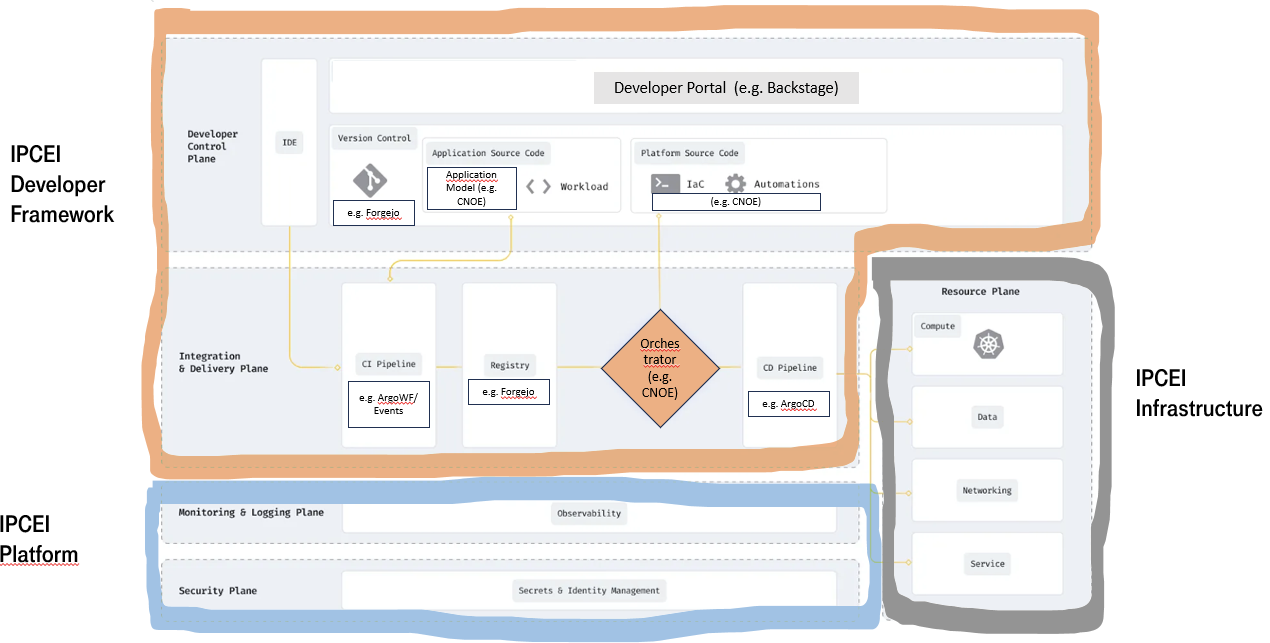

CNOE (capabilities) architecture

Architecture

CNOE architecture looks a bit different than the reference architecture from Humanitec, but this just a matter of details and arrangement:

Hint: This has to be further investigated! This is subject to an Epic.

Capabilities

You have a definition of all the capabilities here:

Hint: This has to be further investigated! This is subject to an Epic.

Stacks

CNOE calls the schema and templating mechnanism ‘stacks’:

Hint: This has to be further investigated! This is subject to an Epic.

There are already some example stacks:

What comes next?

Next we’ll see how a CNOE stacked Internal Developer Platform is deployed on you local laptop!

1.6 - CNOE Showtime

Summary

CNOE is a ‘Platform Engineering Framework’ (Danger: Our wording!) - it is open source and locally runnable.

It consists of the orchestrator ‘idpbuilder’ and both of some predefined building blocks and also some predefined platform configurations.

Orchestrator ‘idpbuilder’, initial run

The orchestrator in CNOE is called ‘idpbuilder’. It is locally installable binary

A typipcal first setup ist described here: https://cnoe.io/docs/reference-implementation/technology

# this is a local linux shell

# check local installation

type idpbuilder

idpbuilder is /usr/local/bin/idpbuilder

# check version

idpbuilder version

idpbuilder 0.8.0-nightly.20240914 go1.22.7 linux/amd64

# do some completion and aliasing

source <(idpbuilder completion bash)

alias ib=idpbuilder

complete -F __start_idpbuilder ib

# check and remove all existing kind clusters

kind delete clusters --all

kind get clusters

# sth. like 'No kind clusters found.'

# run

$ib create --use-path-routing --log-level debug --package-dir https://github.com/cnoe-io/stacks//ref-implementation

You get output like

stl@ubuntu-vpn:~/git/mms/ipceicis-developerframework$ idpbuilder create

Oct 1 10:09:18 INFO Creating kind cluster logger=setup

Oct 1 10:09:18 INFO Runtime detected logger=setup provider=docker

########################### Our kind config ############################

# Kind kubernetes release images https://github.com/kubernetes-sigs/kind/releases

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

image: "kindest/node:v1.30.0"

labels:

ingress-ready: "true"

extraPortMappings:

- containerPort: 443

hostPort: 8443

protocol: TCP

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."gitea.cnoe.localtest.me:8443"]

endpoint = ["https://gitea.cnoe.localtest.me"]

[plugins."io.containerd.grpc.v1.cri".registry.configs."gitea.cnoe.localtest.me".tls]

insecure_skip_verify = true

######################### config end ############################

Show time steps

Goto https://cnoe.io/docs/reference-implementation/installations/idpbuilder/usage, and follow the flow

Prepare a k8s cluster with kind

You may have seen: when starting idpbuilder without an existing cluster named localdev it first creates this cluster by calling kindwith an internally defined config.

It’s an important feature of idpbuilder that it will set up on an existing cluster localdev when called several times in a row e.g. to append new packages to the cluster.

That’s why we here first create the kind cluster localdevitself:

cat << EOF | kind create cluster --name localdev --config=-

# Kind kubernetes release images https://github.com/kubernetes-sigs/kind/releases

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

image: "kindest/node:v1.30.0"

labels:

ingress-ready: "true"

extraPortMappings:

- containerPort: 443

hostPort: 8443

protocol: TCP

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."gitea.cnoe.localtest.me:8443"]

endpoint = ["https://gitea.cnoe.localtest.me"]

[plugins."io.containerd.grpc.v1.cri".registry.configs."gitea.cnoe.localtest.me".tls]

insecure_skip_verify = true

# alternatively, if you have the kind config as file:

kind create cluster --name localdev --config kind-config.yaml

Output

A typical raw kind setup kubernetes cluster would look like this with respect to running pods:

be sure all pods are in status ‘running’

stl@ubuntu-vpn:~/git/mms/idpbuilder$ k get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-76f75df574-lb7jz 1/1 Running 0 15s

kube-system coredns-76f75df574-zm2wp 1/1 Running 0 15s

kube-system etcd-localdev-control-plane 1/1 Running 0 27s

kube-system kindnet-8qhd5 1/1 Running 0 13s

kube-system kindnet-r4d6n 1/1 Running 0 15s

kube-system kube-apiserver-localdev-control-plane 1/1 Running 0 27s

kube-system kube-controller-manager-localdev-control-plane 1/1 Running 0 27s

kube-system kube-proxy-vrh64 1/1 Running 0 15s

kube-system kube-proxy-w8ks9 1/1 Running 0 13s

kube-system kube-scheduler-localdev-control-plane 1/1 Running 0 27s

local-path-storage local-path-provisioner-6f8956fb48-6fvt2 1/1 Running 0 15s

First run: Start with core applications, ‘core package’

Now we run idpbuilder the first time:

# now idpbuilder reuses the already existing cluster

# pls apply '--use-path-routing' otherwise as we discovered currently the service resolving inside the cluster won't work

ib create --use-path-routing

Output

idpbuilder log

stl@ubuntu-vpn:~/git/mms/idpbuilder$ ib create --use-path-routing

Oct 1 10:32:50 INFO Creating kind cluster logger=setup

Oct 1 10:32:50 INFO Runtime detected logger=setup provider=docker

Oct 1 10:32:50 INFO Cluster already exists logger=setup cluster=localdev

Oct 1 10:32:50 INFO Adding CRDs to the cluster logger=setup

Oct 1 10:32:51 INFO Setting up CoreDNS logger=setup

Oct 1 10:32:51 INFO Setting up TLS certificate logger=setup

Oct 1 10:32:51 INFO Creating localbuild resource logger=setup

Oct 1 10:32:51 INFO Starting EventSource controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository source=kind source: *v1alpha1.GitRepository

Oct 1 10:32:51 INFO Starting Controller controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository

Oct 1 10:32:51 INFO Starting EventSource controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild source=kind source: *v1alpha1.Localbuild

Oct 1 10:32:51 INFO Starting Controller controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild

Oct 1 10:32:51 INFO Starting EventSource controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage source=kind source: *v1alpha1.CustomPackage

Oct 1 10:32:51 INFO Starting Controller controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage

Oct 1 10:32:51 INFO Starting workers controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild worker count=1

Oct 1 10:32:51 INFO Starting workers controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage worker count=1

Oct 1 10:32:51 INFO Starting workers controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository worker count=1

Oct 1 10:32:54 INFO Waiting for Deployment my-gitea to become ready controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=6fc396d4-e0d5-4c80-aaee-20c1bbffea54

Oct 1 10:32:54 INFO Waiting for Deployment ingress-nginx-controller to become ready controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=6fc396d4-e0d5-4c80-aaee-20c1bbffea54

Oct 1 10:33:24 INFO Waiting for Deployment my-gitea to become ready controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=6fc396d4-e0d5-4c80-aaee-20c1bbffea54

Oct 1 10:33:24 INFO Waiting for Deployment ingress-nginx-controller to become ready controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=6fc396d4-e0d5-4c80-aaee-20c1bbffea54

Oct 1 10:33:54 INFO Waiting for Deployment my-gitea to become ready controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=6fc396d4-e0d5-4c80-aaee-20c1bbffea54

Oct 1 10:34:24 INFO installing bootstrap apps to ArgoCD controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=6fc396d4-e0d5-4c80-aaee-20c1bbffea54

Oct 1 10:34:24 INFO expected annotation, cnoe.io/last-observed-cli-start-time, not found controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=6fc396d4-e0d5-4c80-aaee-20c1bbffea54

Oct 1 10:34:24 INFO Checking if we should shutdown controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=6fc396d4-e0d5-4c80-aaee-20c1bbffea54

Oct 1 10:34:25 INFO installing bootstrap apps to ArgoCD controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=0667fa85-af1c-403f-bcd9-16ff8f2fad7e

Oct 1 10:34:25 INFO expected annotation, cnoe.io/last-observed-cli-start-time, not found controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=0667fa85-af1c-403f-bcd9-16ff8f2fad7e

Oct 1 10:34:25 INFO Checking if we should shutdown controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=0667fa85-af1c-403f-bcd9-16ff8f2fad7e

Oct 1 10:34:40 INFO installing bootstrap apps to ArgoCD controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=ec720aeb-02cd-4974-a991-cf2f19ce8536

Oct 1 10:34:40 INFO Checking if we should shutdown controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=ec720aeb-02cd-4974-a991-cf2f19ce8536

Oct 1 10:34:40 INFO Shutting Down controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=ec720aeb-02cd-4974-a991-cf2f19ce8536

Oct 1 10:34:40 INFO Stopping and waiting for non leader election runnables

Oct 1 10:34:40 INFO Stopping and waiting for leader election runnables

Oct 1 10:34:40 INFO Shutdown signal received, waiting for all workers to finish controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository

Oct 1 10:34:40 INFO Shutdown signal received, waiting for all workers to finish controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage

Oct 1 10:34:40 INFO All workers finished controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage

Oct 1 10:34:40 INFO Shutdown signal received, waiting for all workers to finish controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild

Oct 1 10:34:40 INFO All workers finished controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild

Oct 1 10:34:40 INFO All workers finished controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository

Oct 1 10:34:40 INFO Stopping and waiting for caches

Oct 1 10:34:40 INFO Stopping and waiting for webhooks

Oct 1 10:34:40 INFO Stopping and waiting for HTTP servers

Oct 1 10:34:40 INFO Wait completed, proceeding to shutdown the manager

########################### Finished Creating IDP Successfully! ############################

Can Access ArgoCD at https://cnoe.localtest.me:8443/argocd

Username: admin

Password can be retrieved by running: idpbuilder get secrets -p argocd

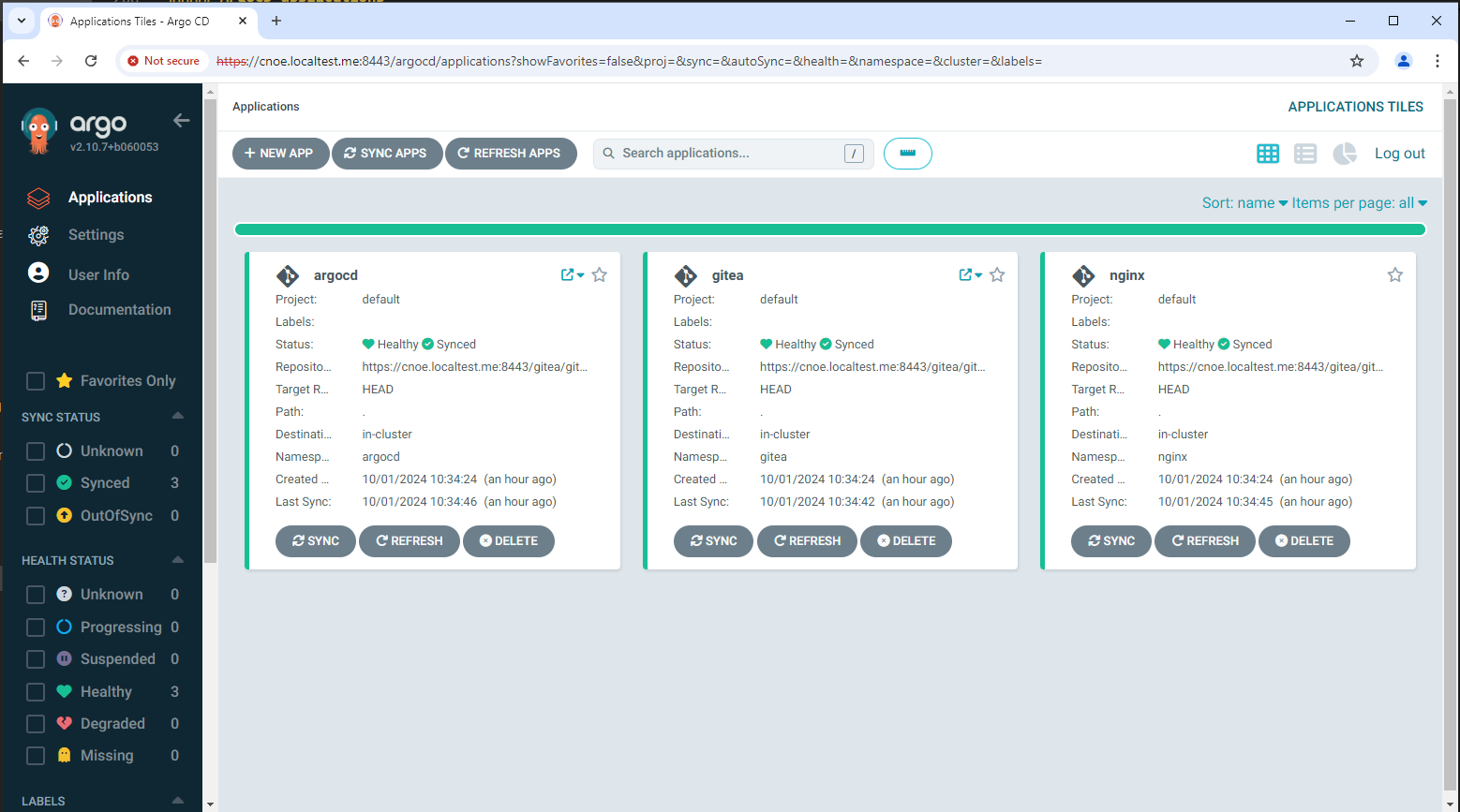

ArgoCD applications

When running idpbuilder ‘barely’ (without package option) you get the ‘core applications’ deployed in your cluster:

stl@ubuntu-vpn:~/git/mms/ipceicis-developerframework$ k get applications -A

NAMESPACE NAME SYNC STATUS HEALTH STATUS

argocd argocd Synced Healthy

argocd gitea Synced Healthy

argocd nginx Synced Healthy

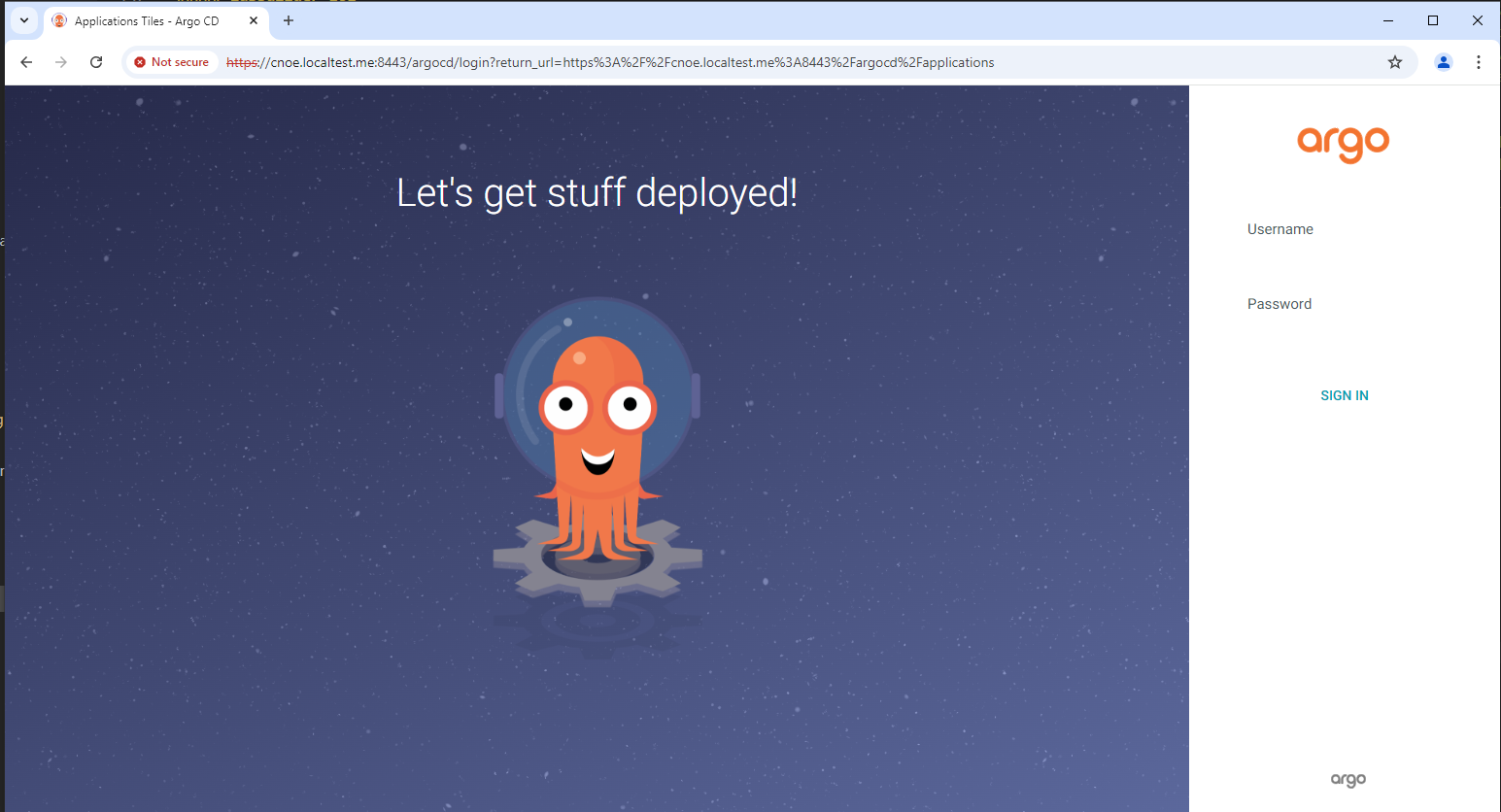

ArgoCD UI

Open ArgoCD locally:

https://cnoe.localtest.me:8443/argocd

Next find the provided credentials for ArgoCD (here: argocd-initial-admin-secret):

stl@ubuntu-vpn:~/git/mms/idpbuilder$ ib get secrets

---------------------------

Name: argocd-initial-admin-secret

Namespace: argocd

Data:

password : 2MoMeW30wSC9EraF

username : admin

---------------------------

Name: gitea-credential

Namespace: gitea

Data:

password : LI$T?o>N{-<|{^dm$eTg*gni1(2:Y0@q344yqQIS

username : giteaAdmin

In ArgoCD you will see the deployed three applications of the core package:

Second run: Append ‘package1’ from the CNOE-stacks repo

CNOE provides example packages in https://github.com/cnoe-io/stacks.git. Having cloned this repo you can locally refer to theses packages:

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ git remote -v

origin https://github.com/cnoe-io/stacks.git (fetch)

origin https://github.com/cnoe-io/stacks.git (push)

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ ls -al

total 64

drwxr-xr-x 12 stl stl 4096 Sep 28 13:55 .

drwxr-xr-x 26 stl stl 4096 Sep 30 11:53 ..

drwxr-xr-x 8 stl stl 4096 Sep 28 13:56 .git

drwxr-xr-x 4 stl stl 4096 Jul 29 10:57 .github

-rw-r--r-- 1 stl stl 11341 Sep 28 09:12 LICENSE

-rw-r--r-- 1 stl stl 1079 Sep 28 13:55 README.md

drwxr-xr-x 4 stl stl 4096 Jul 29 10:57 basic

drwxr-xr-x 4 stl stl 4096 Sep 14 15:54 crossplane-integrations

drwxr-xr-x 3 stl stl 4096 Aug 13 14:52 dapr-integration

drwxr-xr-x 3 stl stl 4096 Sep 14 15:54 jupyterhub

drwxr-xr-x 6 stl stl 4096 Aug 16 14:36 local-backup

drwxr-xr-x 3 stl stl 4096 Aug 16 14:36 localstack-integration

drwxr-xr-x 8 stl stl 4096 Sep 28 13:02 ref-implementation

drwxr-xr-x 2 stl stl 4096 Aug 16 14:36 terraform-integrations

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ ls -al basic/

total 20

drwxr-xr-x 4 stl stl 4096 Jul 29 10:57 .

drwxr-xr-x 12 stl stl 4096 Sep 28 13:55 ..

-rw-r--r-- 1 stl stl 632 Jul 29 10:57 README.md

drwxr-xr-x 3 stl stl 4096 Jul 29 10:57 package1

drwxr-xr-x 2 stl stl 4096 Jul 29 10:57 package2

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ ls -al basic/package1

total 16

drwxr-xr-x 3 stl stl 4096 Jul 29 10:57 .

drwxr-xr-x 4 stl stl 4096 Jul 29 10:57 ..

-rw-r--r-- 1 stl stl 655 Jul 29 10:57 app.yaml

drwxr-xr-x 2 stl stl 4096 Jul 29 10:57 manifests

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ ls -al basic/package2

total 16

drwxr-xr-x 2 stl stl 4096 Jul 29 10:57 .

drwxr-xr-x 4 stl stl 4096 Jul 29 10:57 ..

-rw-r--r-- 1 stl stl 498 Jul 29 10:57 app.yaml

-rw-r--r-- 1 stl stl 500 Jul 29 10:57 app2.yaml

Output

Now we run idpbuilder the second time with -p basic/package1

idpbuilder log

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ ib create --use-path-routing -p basic/package1

Oct 1 12:09:27 INFO Creating kind cluster logger=setup

Oct 1 12:09:27 INFO Runtime detected logger=setup provider=docker

Oct 1 12:09:27 INFO Cluster already exists logger=setup cluster=localdev

Oct 1 12:09:28 INFO Adding CRDs to the cluster logger=setup

Oct 1 12:09:28 INFO Setting up CoreDNS logger=setup

Oct 1 12:09:28 INFO Setting up TLS certificate logger=setup

Oct 1 12:09:28 INFO Creating localbuild resource logger=setup

Oct 1 12:09:28 INFO Starting EventSource controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild source=kind source: *v1alpha1.Localbuild

Oct 1 12:09:28 INFO Starting Controller controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild

Oct 1 12:09:28 INFO Starting EventSource controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage source=kind source: *v1alpha1.CustomPackage

Oct 1 12:09:28 INFO Starting Controller controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage

Oct 1 12:09:28 INFO Starting EventSource controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository source=kind source: *v1alpha1.GitRepository

Oct 1 12:09:28 INFO Starting Controller controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository

Oct 1 12:09:28 INFO Starting workers controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild worker count=1

Oct 1 12:09:28 INFO Starting workers controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository worker count=1

Oct 1 12:09:28 INFO Starting workers controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage worker count=1

Oct 1 12:09:29 INFO installing bootstrap apps to ArgoCD controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=0ed7ccc2-dec7-4ab8-909c-791a7d1b67a8

Oct 1 12:09:29 INFO unknown field "status.history[0].initiatedBy" logger=KubeAPIWarningLogger

Oct 1 12:09:29 INFO Checking if we should shutdown controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=0ed7ccc2-dec7-4ab8-909c-791a7d1b67a8

Oct 1 12:09:29 ERROR failed updating repo status controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage name=app-my-app namespace=idpbuilder-localdev namespace=idpbuilder-localdev name=app-my-app reconcileID=f9873560-5dcd-4e59-b6f7-ce5d1029ef3d err=Operation cannot be fulfilled on custompackages.idpbuilder.cnoe.io "app-my-app": the object has been modified; please apply your changes to the latest version and try again

Oct 1 12:09:29 ERROR Reconciler error controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage name=app-my-app namespace=idpbuilder-localdev namespace=idpbuilder-localdev name=app-my-app reconcileID=f9873560-5dcd-4e59-b6f7-ce5d1029ef3d err=updating argocd application object my-app: Operation cannot be fulfilled on applications.argoproj.io "my-app": the object has been modified; please apply your changes to the latest version and try again

Oct 1 12:09:31 INFO installing bootstrap apps to ArgoCD controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=531cc2d4-6250-493a-aca8-fecf048a608d

Oct 1 12:09:31 INFO Checking if we should shutdown controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=531cc2d4-6250-493a-aca8-fecf048a608d

Oct 1 12:09:44 INFO installing bootstrap apps to ArgoCD controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=022b9813-8708-4f4e-90d7-38f1e114c46f

Oct 1 12:09:44 INFO Checking if we should shutdown controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=022b9813-8708-4f4e-90d7-38f1e114c46f

Oct 1 12:10:00 INFO installing bootstrap apps to ArgoCD controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=79a85c21-42c1-41ec-ad03-2bb77aeae027

Oct 1 12:10:00 INFO Checking if we should shutdown controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=79a85c21-42c1-41ec-ad03-2bb77aeae027

Oct 1 12:10:00 INFO Shutting Down controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild name=localdev name=localdev reconcileID=79a85c21-42c1-41ec-ad03-2bb77aeae027

Oct 1 12:10:00 INFO Stopping and waiting for non leader election runnables

Oct 1 12:10:00 INFO Stopping and waiting for leader election runnables

Oct 1 12:10:00 INFO Shutdown signal received, waiting for all workers to finish controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage

Oct 1 12:10:00 INFO Shutdown signal received, waiting for all workers to finish controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository

Oct 1 12:10:00 INFO All workers finished controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage

Oct 1 12:10:00 INFO Shutdown signal received, waiting for all workers to finish controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild

Oct 1 12:10:00 INFO All workers finished controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild

Oct 1 12:10:00 INFO All workers finished controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository

Oct 1 12:10:00 INFO Stopping and waiting for caches

Oct 1 12:10:00 INFO Stopping and waiting for webhooks

Oct 1 12:10:00 INFO Stopping and waiting for HTTP servers

Oct 1 12:10:00 INFO Wait completed, proceeding to shutdown the manager

########################### Finished Creating IDP Successfully! ############################

Can Access ArgoCD at https://cnoe.localtest.me:8443/argocd

Username: admin

Password can be retrieved by running: idpbuilder get secrets -p argocd

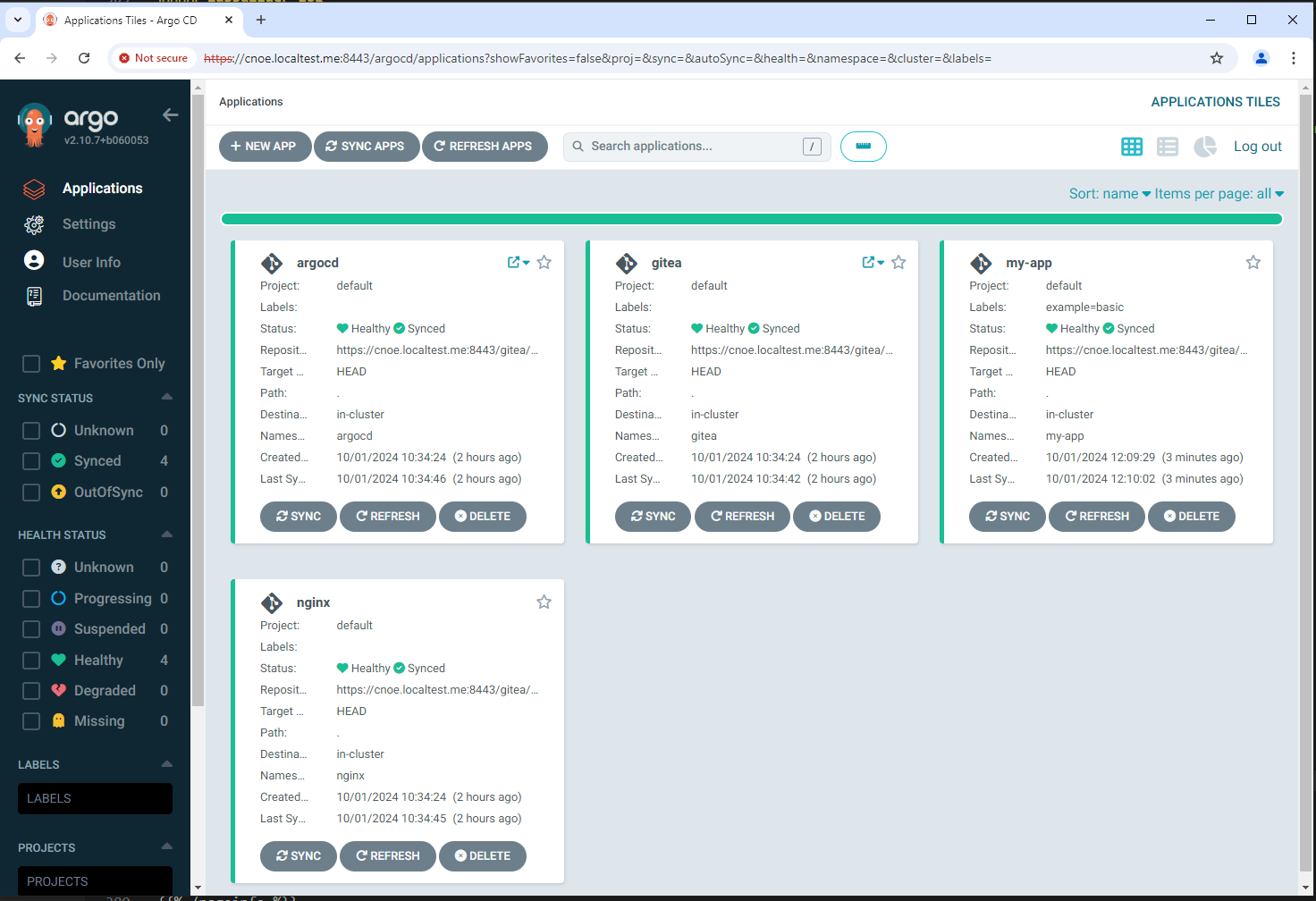

ArgoCD applications

Now we have additionally the ‘my-app’ deployed in the cluster:

stl@ubuntu-vpn:~$ k get applications -A

NAMESPACE NAME SYNC STATUS HEALTH STATUS

argocd argocd Synced Healthy

argocd gitea Synced Healthy

argocd my-app Synced Healthy

argocd nginx Synced Healthy

ArgoCD UI

Third run: Finally we append ‘ref-implementation’ from the CNOE-stacks repo

We finally append the so called ‘reference-implementation’, which provides a real basic IDP:

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ ib create --use-path-routing -p ref-implementation

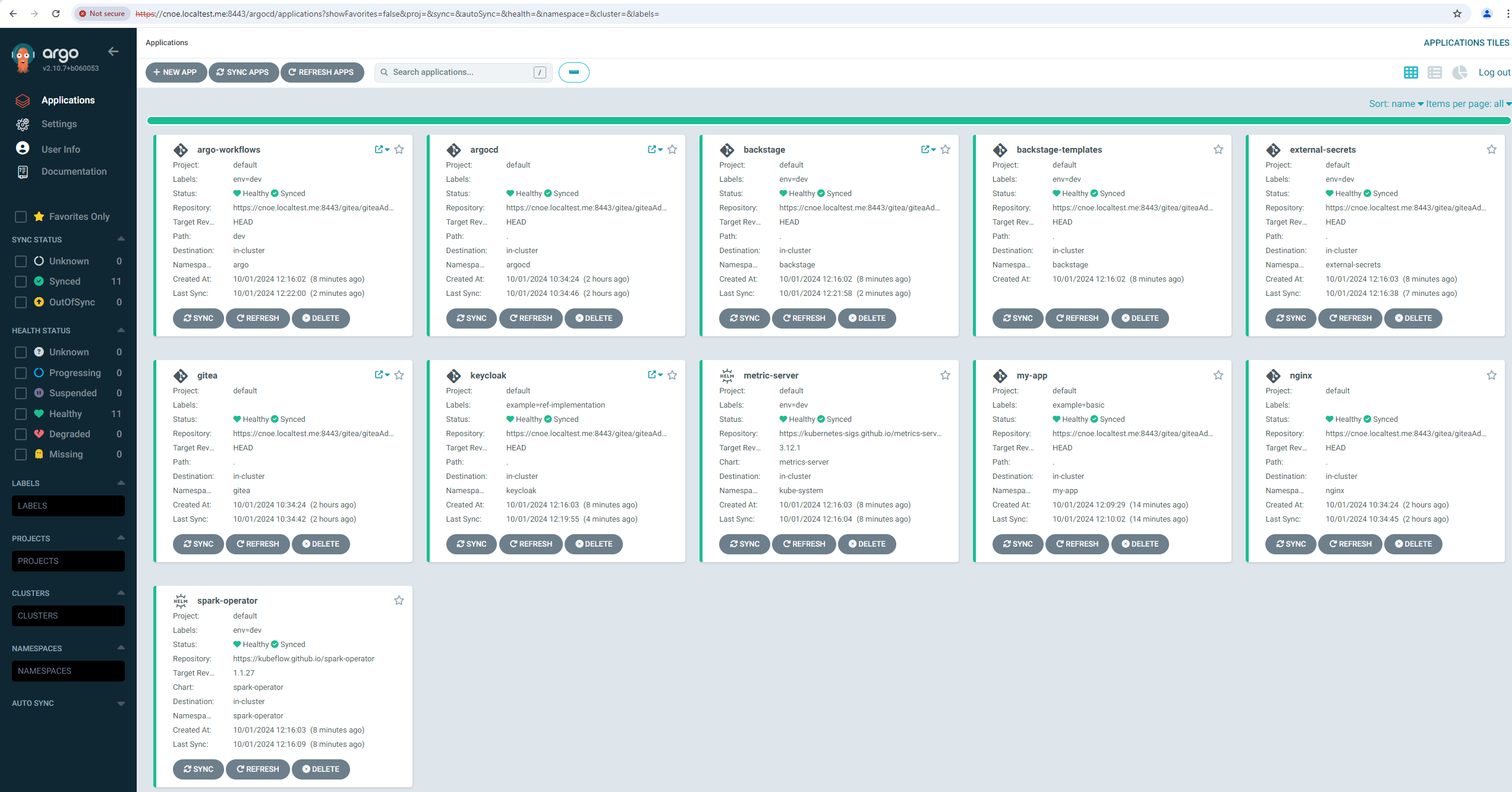

ArgoCD applications

stl@ubuntu-vpn:~$ k get applications -A

NAMESPACE NAME SYNC STATUS HEALTH STATUS

argocd argo-workflows Synced Healthy

argocd argocd Synced Healthy

argocd backstage Synced Healthy

argocd included-backstage-templates Synced Healthy

argocd external-secrets Synced Healthy

argocd gitea Synced Healthy

argocd keycloak Synced Healthy

argocd metric-server Synced Healthy

argocd my-app Synced Healthy

argocd nginx Synced Healthy

argocd spark-operator Synced Healthy

ArgoCD UI

ArgoCD shows all provissioned applications:

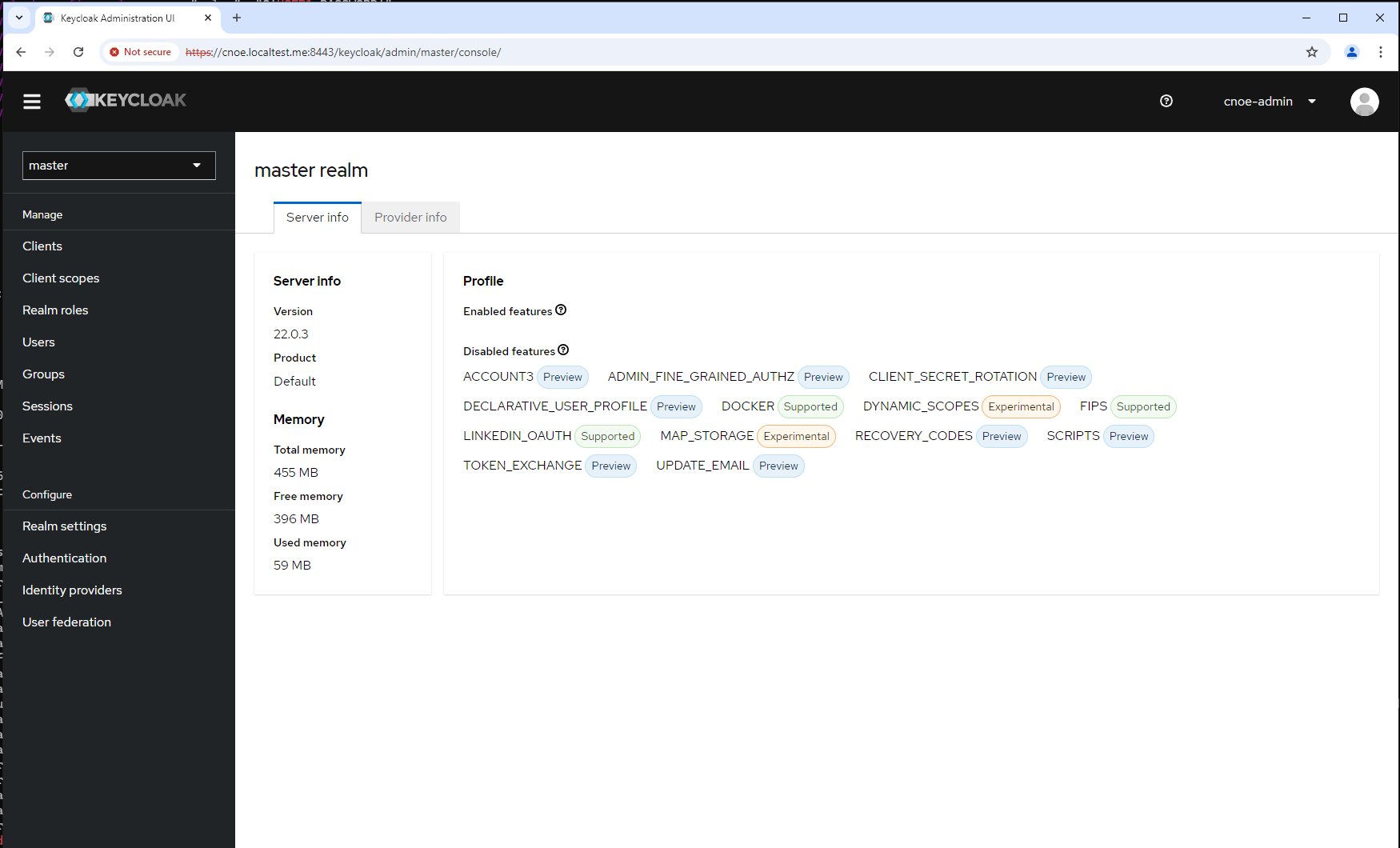

Keycloak UI

In our cluster there is also keycloak as IAM provisioned.

Login into Keycloak with ‘cnoe-admin’ and the KEYCLOAK_ADMIN_PASSWORD.

These credentails are defined in the package:

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ cat ref-implementation/keycloak/manifests/keycloak-config.yaml | grep -i admin

group-admin-payload.json: |

{"name":"admin"}

"/admin"

ADMIN_PASSWORD=$(cat /var/secrets/KEYCLOAK_ADMIN_PASSWORD)

--data-urlencode "username=cnoe-admin" \

--data-urlencode "password=${ADMIN_PASSWORD}" \

stl@ubuntu-vpn:~/git/mms/cnoe-stacks$ ib get secrets

---------------------------

Name: argocd-initial-admin-secret

Namespace: argocd

Data:

password : 2MoMeW30wSC9EraF

username : admin

---------------------------

Name: gitea-credential

Namespace: gitea

Data:

password : LI$T?o>N{-<|{^dm$eTg*gni1(2:Y0@q344yqQIS

username : giteaAdmin

---------------------------

Name: keycloak-config

Namespace: keycloak

Data:

KC_DB_PASSWORD : k3-1kgxxd/X2Cw//pX-uKMsmgWogEz5YGnb5

KC_DB_USERNAME : keycloak

KEYCLOAK_ADMIN_PASSWORD : zMSjv5eA0l/+0-MDAaaNe+rHRMrB2q0NssP-

POSTGRES_DB : keycloak

POSTGRES_PASSWORD : k3-1kgxxd/X2Cw//pX-uKMsmgWogEz5YGnb5

POSTGRES_USER : keycloak

USER_PASSWORD : Kd+0+/BqPRAvnLPZO-L2o/6DoBrzUeMsr29U

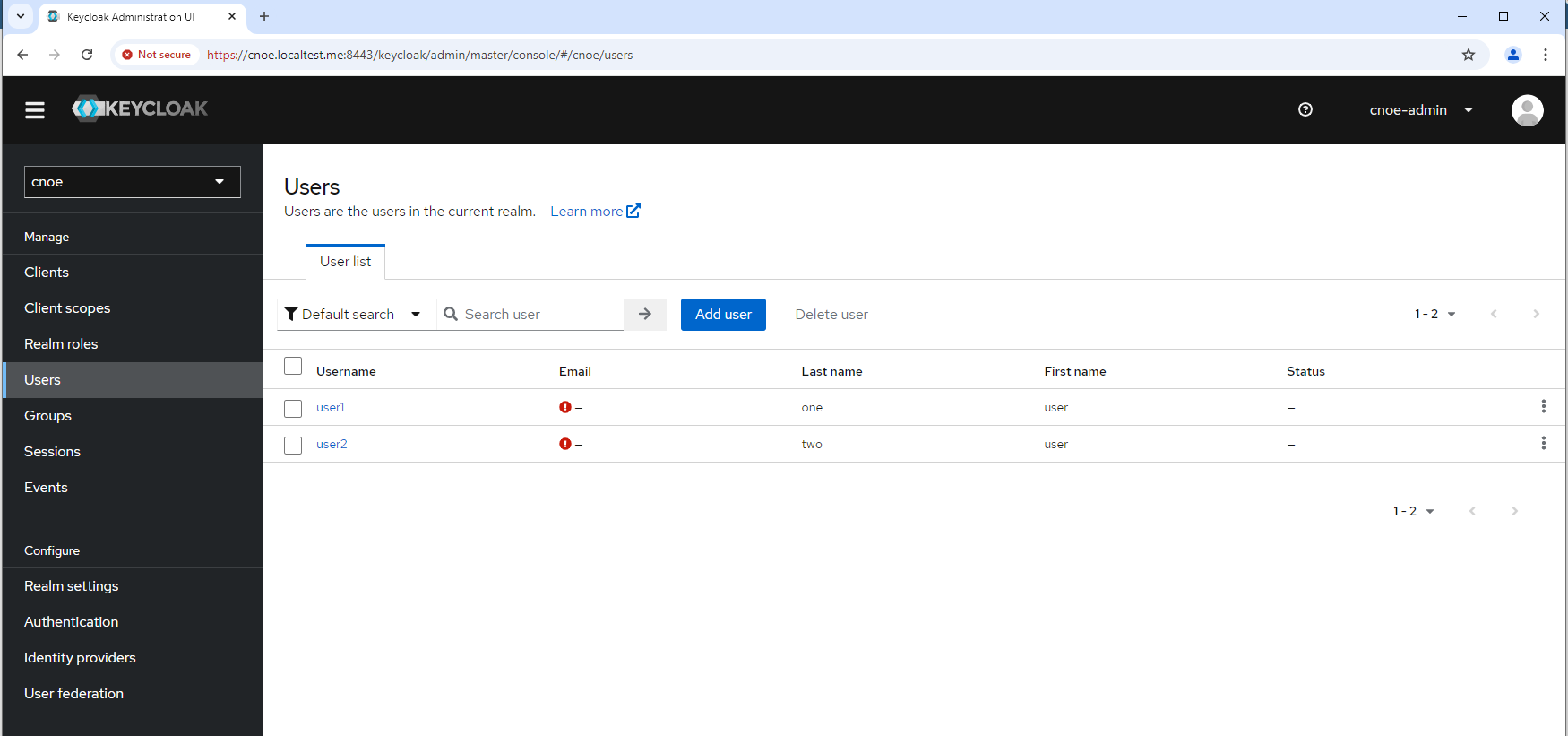

Backstage UI

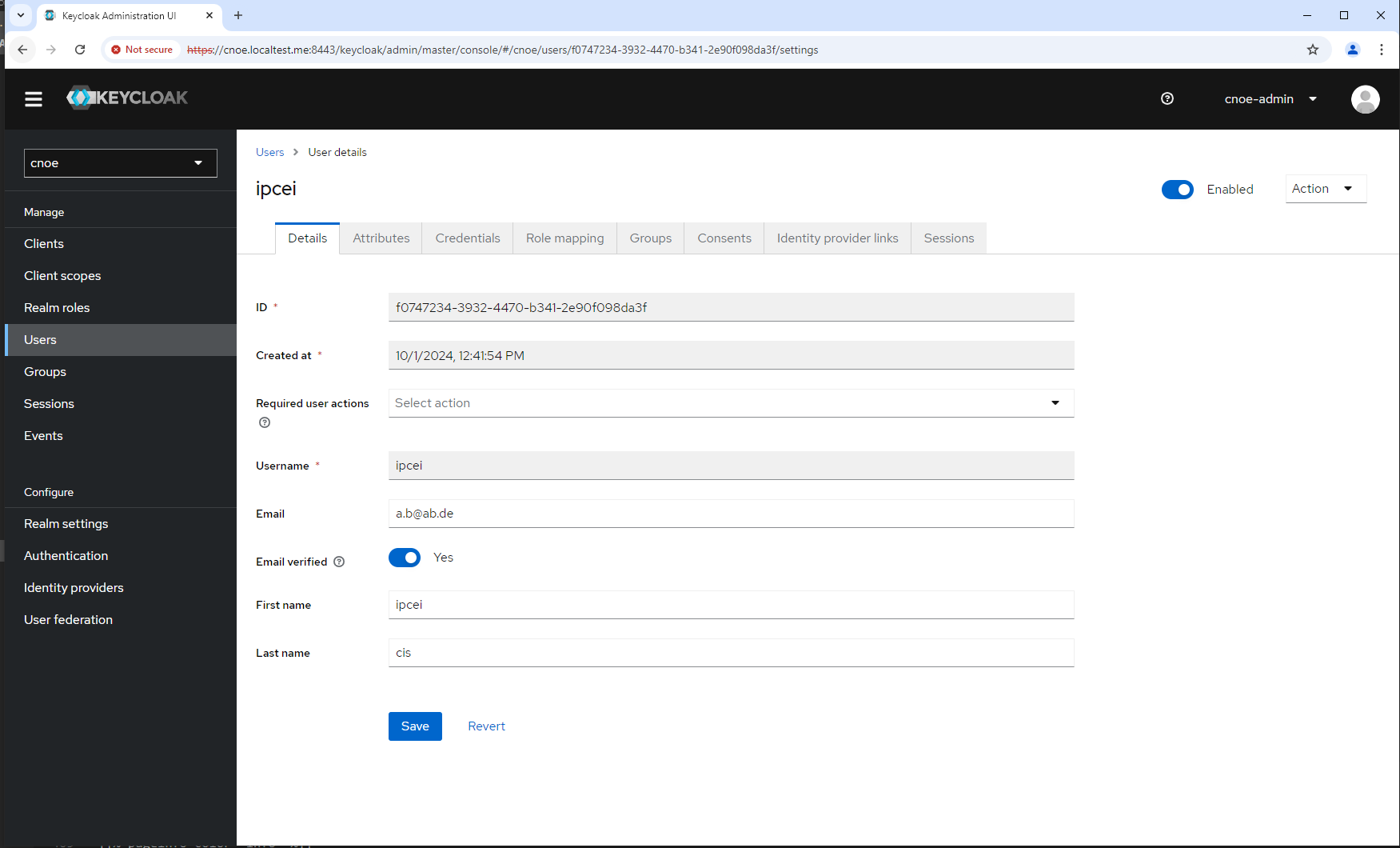

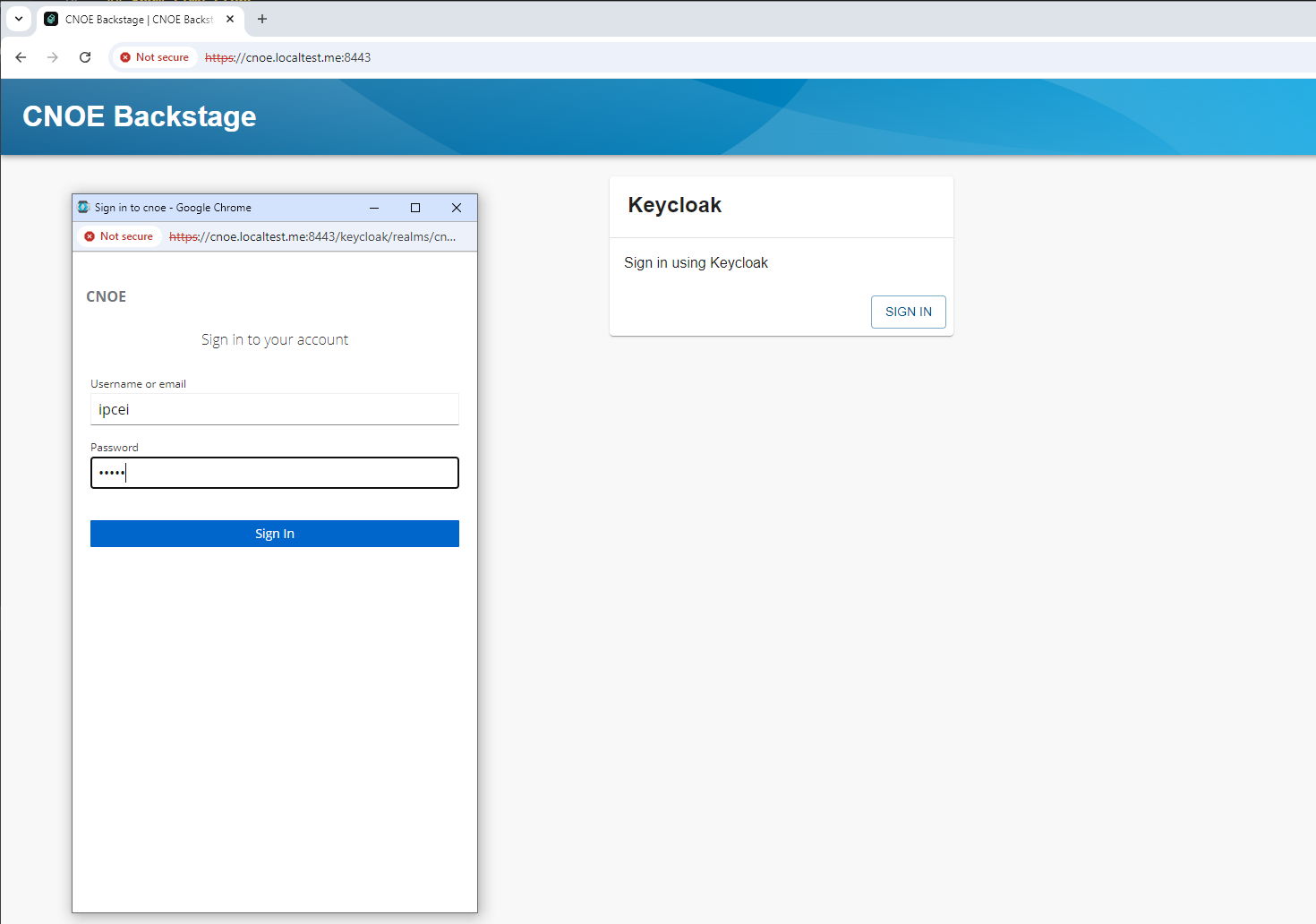

As Backstage login you either can use the ‘user1’ with USER_PASSWORD : Kd+0+/BqPRAvnLPZO-L2o/6DoBrzUeMsr29U or you create a new user in keycloak

We create user ‘ipcei’ and also set a password (in tab ‘Credentials’):

Now we can log into backstage (rember: you could have already existing usr ‘user1’):

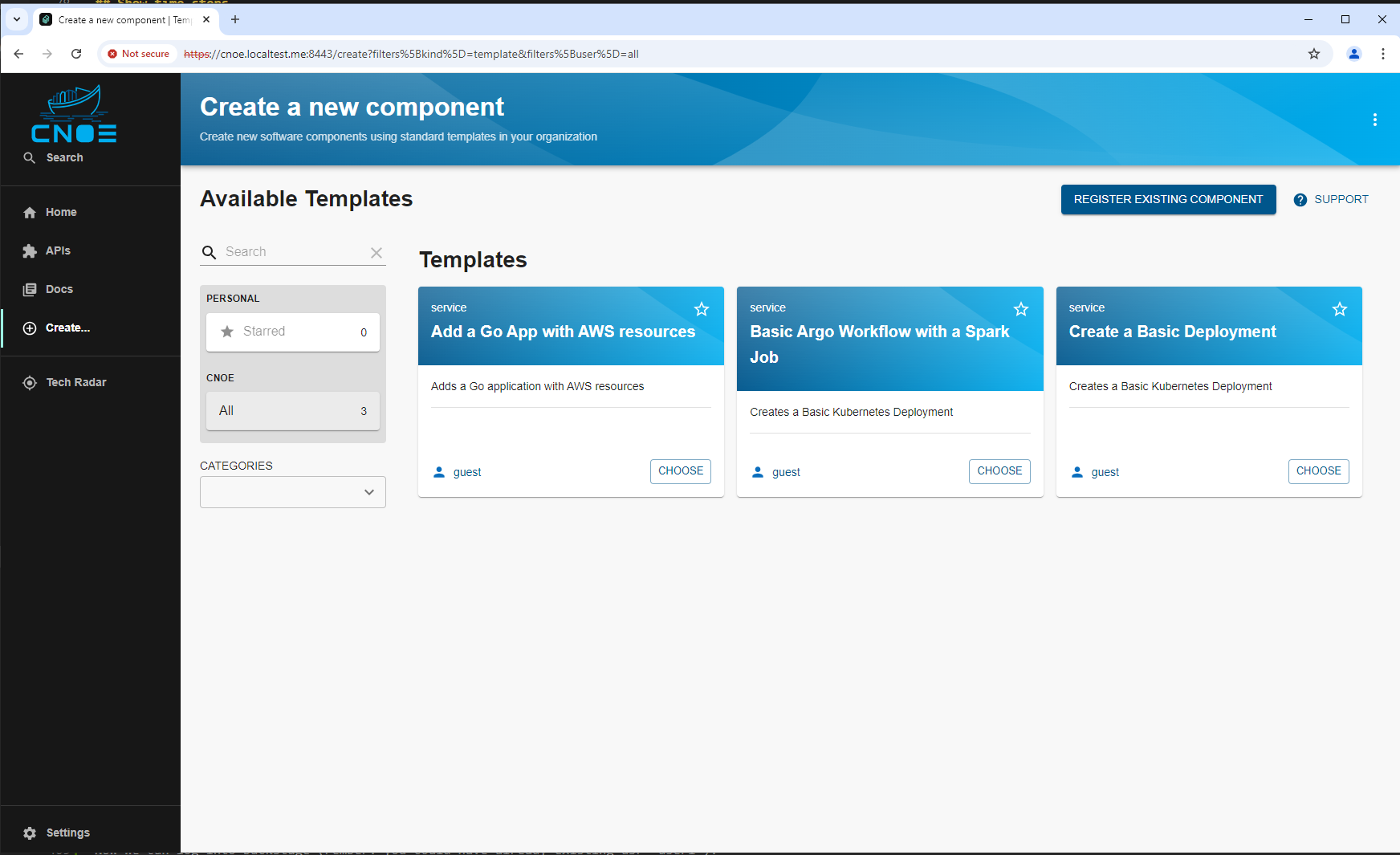

and see the basic setup of the Backstage portal:

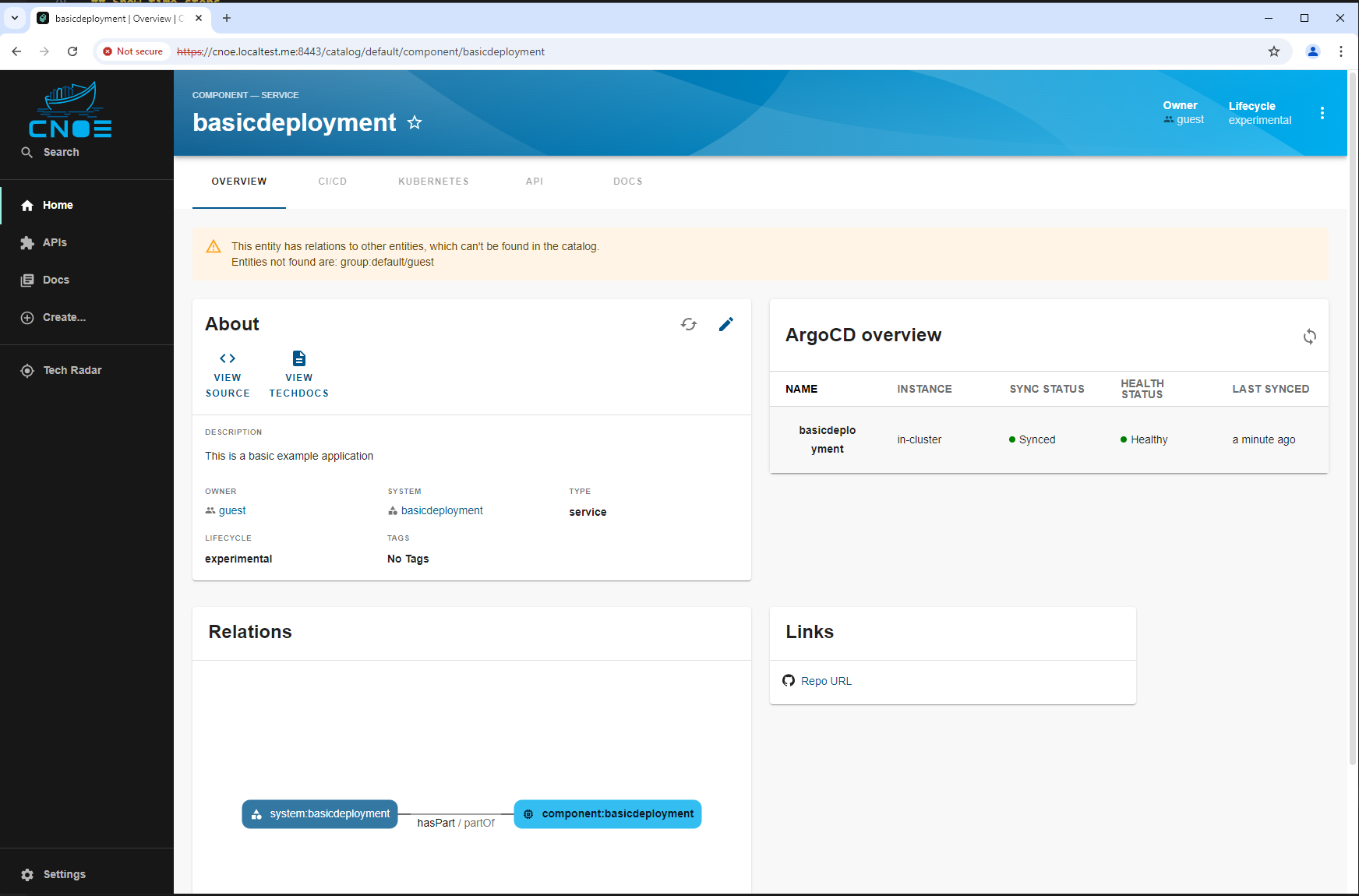

Use a Golden Path: ‘Basic Deployment’

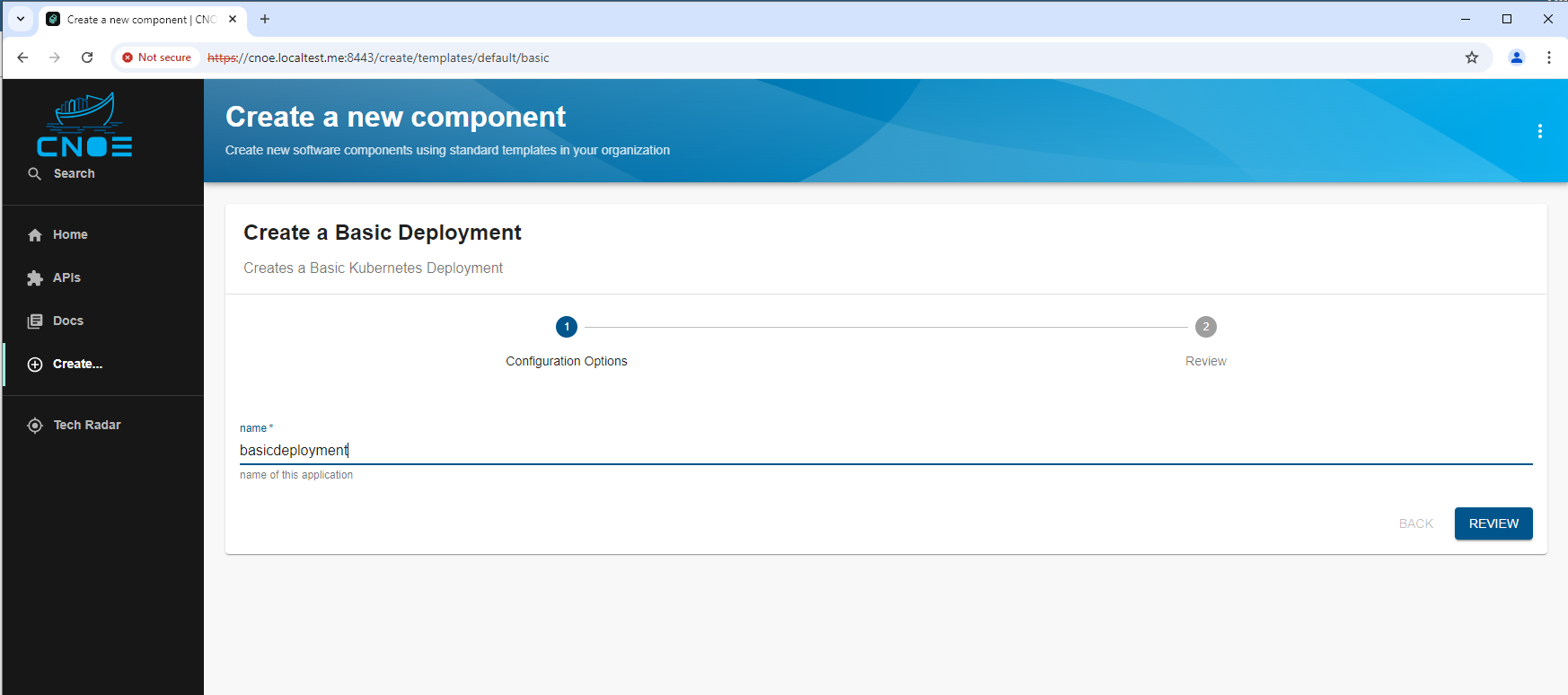

Now we want to use the Backstage portal as a developer. We create in Backstage our own platform based activity by using the golden path template ‘Basic Deployment:

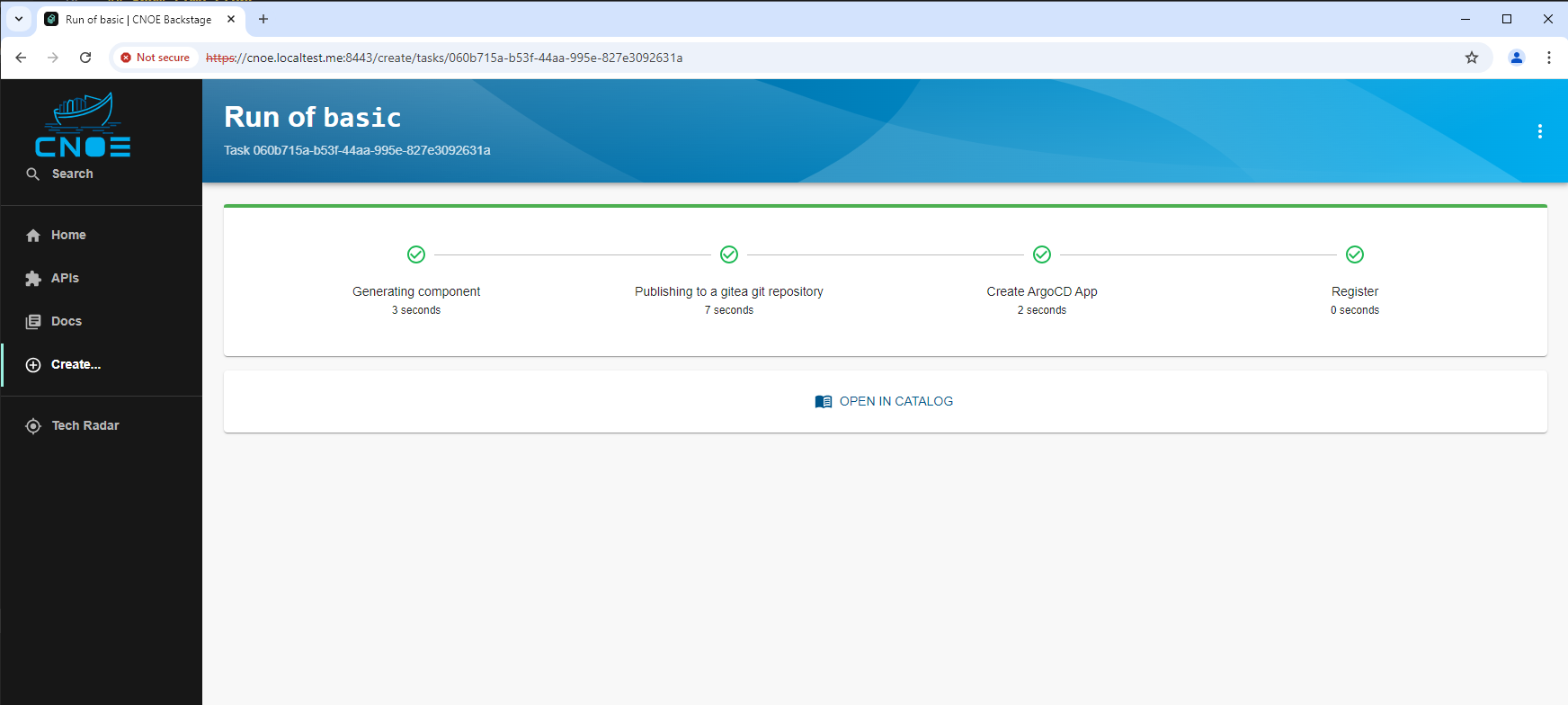

When we run it, we see ‘golden path activities’

which finally result in a new catalogue entry:

Software development lifecycle

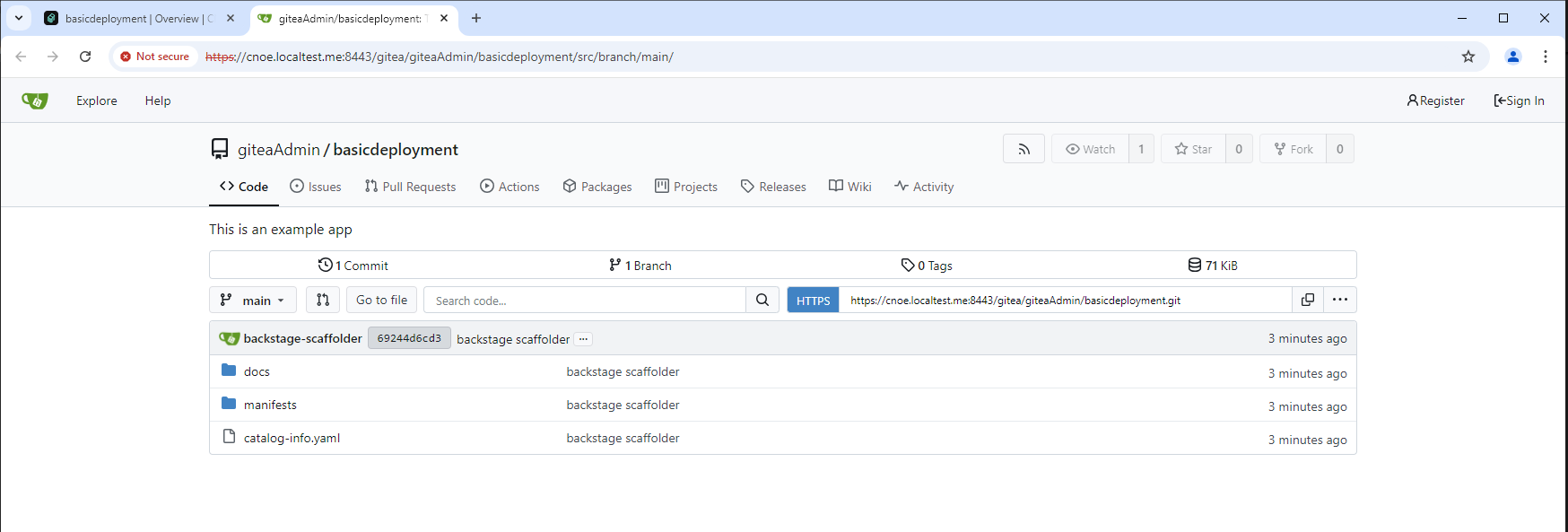

When we follow the ‘view source’ link we are directly linked to the git repo of our newly created application:

Check it out by cloning into a local git repo (watch the GIT_SSL_NO_VERIFY=true env setting):

stl@ubuntu-vpn:~/git/mms/idp-temporary$ GIT_SSL_NO_VERIFY=true git clone https://cnoe.localtest.me:8443/gitea/giteaAdmin/basicdeployment.git

Cloning into 'basicdeployment'...

remote: Enumerating objects: 10, done.

remote: Counting objects: 100% (10/10), done.

remote: Compressing objects: 100% (8/8), done.

remote: Total 10 (delta 0), reused 0 (delta 0), pack-reused 0 (from 0)

Receiving objects: 100% (10/10), 47.62 KiB | 23.81 MiB/s, done.

stl@ubuntu-vpn:~/git/mms/idp-temporary$ cd basicdeployment/

stl@ubuntu-vpn:~/git/mms/idp-temporary/basicdeployment$ ll

total 24

drwxr-xr-x 5 stl stl 4096 Oct 1 13:00 ./

drwxr-xr-x 4 stl stl 4096 Oct 1 13:00 ../

drwxr-xr-x 8 stl stl 4096 Oct 1 13:00 .git/

-rw-r--r-- 1 stl stl 928 Oct 1 13:00 catalog-info.yaml

drwxr-xr-x 3 stl stl 4096 Oct 1 13:00 docs/

drwxr-xr-x 2 stl stl 4096 Oct 1 13:00 manifests/

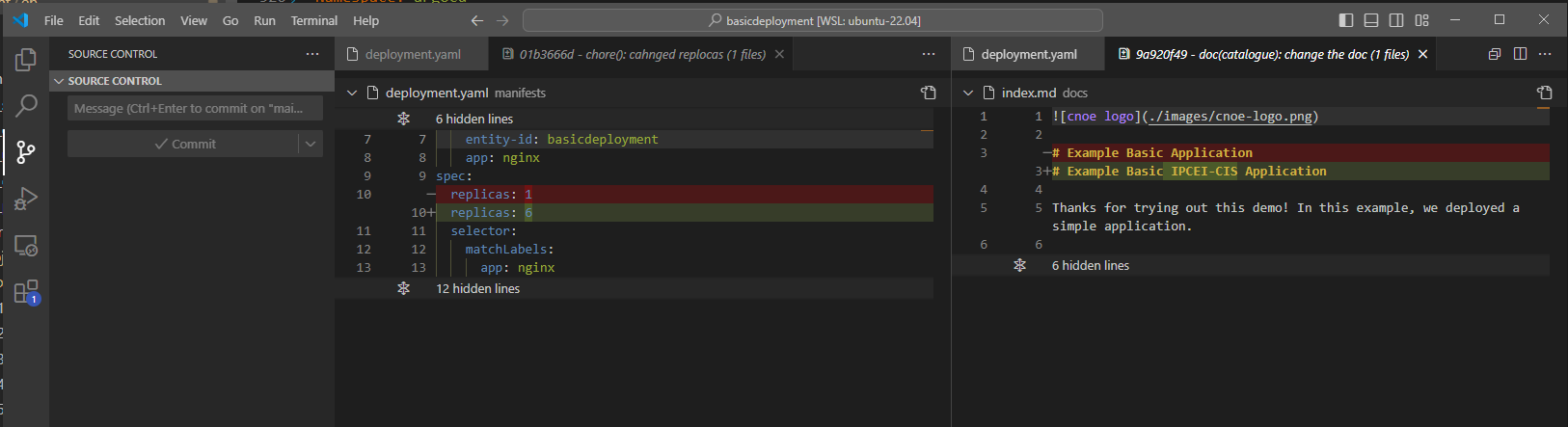

Edit and change

Change some things, like the decription and the replicas:

Push

Push your changes, use the giteaAdmin user to authenticate:

stl@ubuntu-vpn:~/git/mms/idp-temporary/basicdeployment$ ib get secrets

---------------------------

Name: argocd-initial-admin-secret

Namespace: argocd

Data:

password : 2MoMeW30wSC9EraF

username : admin

---------------------------

Name: gitea-credential

Namespace: gitea

Data:

password : LI$T?o>N{-<|{^dm$eTg*gni1(2:Y0@q344yqQIS

username : giteaAdmin

---------------------------

Name: keycloak-config

Namespace: keycloak

Data:

KC_DB_PASSWORD : k3-1kgxxd/X2Cw//pX-uKMsmgWogEz5YGnb5

KC_DB_USERNAME : keycloak

KEYCLOAK_ADMIN_PASSWORD : zMSjv5eA0l/+0-MDAaaNe+rHRMrB2q0NssP-

POSTGRES_DB : keycloak

POSTGRES_PASSWORD : k3-1kgxxd/X2Cw//pX-uKMsmgWogEz5YGnb5

POSTGRES_USER : keycloak

USER_PASSWORD : Kd+0+/BqPRAvnLPZO-L2o/6DoBrzUeMsr29U

stl@ubuntu-vpn:~/git/mms/idp-temporary/basicdeployment$ GIT_SSL_NO_VERIFY=true git push

Username for 'https://cnoe.localtest.me:8443': giteaAdmin

Password for 'https://giteaAdmin@cnoe.localtest.me:8443':

Enumerating objects: 5, done.

Counting objects: 100% (5/5), done.

Delta compression using up to 8 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 382 bytes | 382.00 KiB/s, done.

Total 3 (delta 1), reused 0 (delta 0), pack-reused 0

remote: . Processing 1 references

remote: Processed 1 references in total

To https://cnoe.localtest.me:8443/gitea/giteaAdmin/basicdeployment.git

69244d6..1269617 main -> main

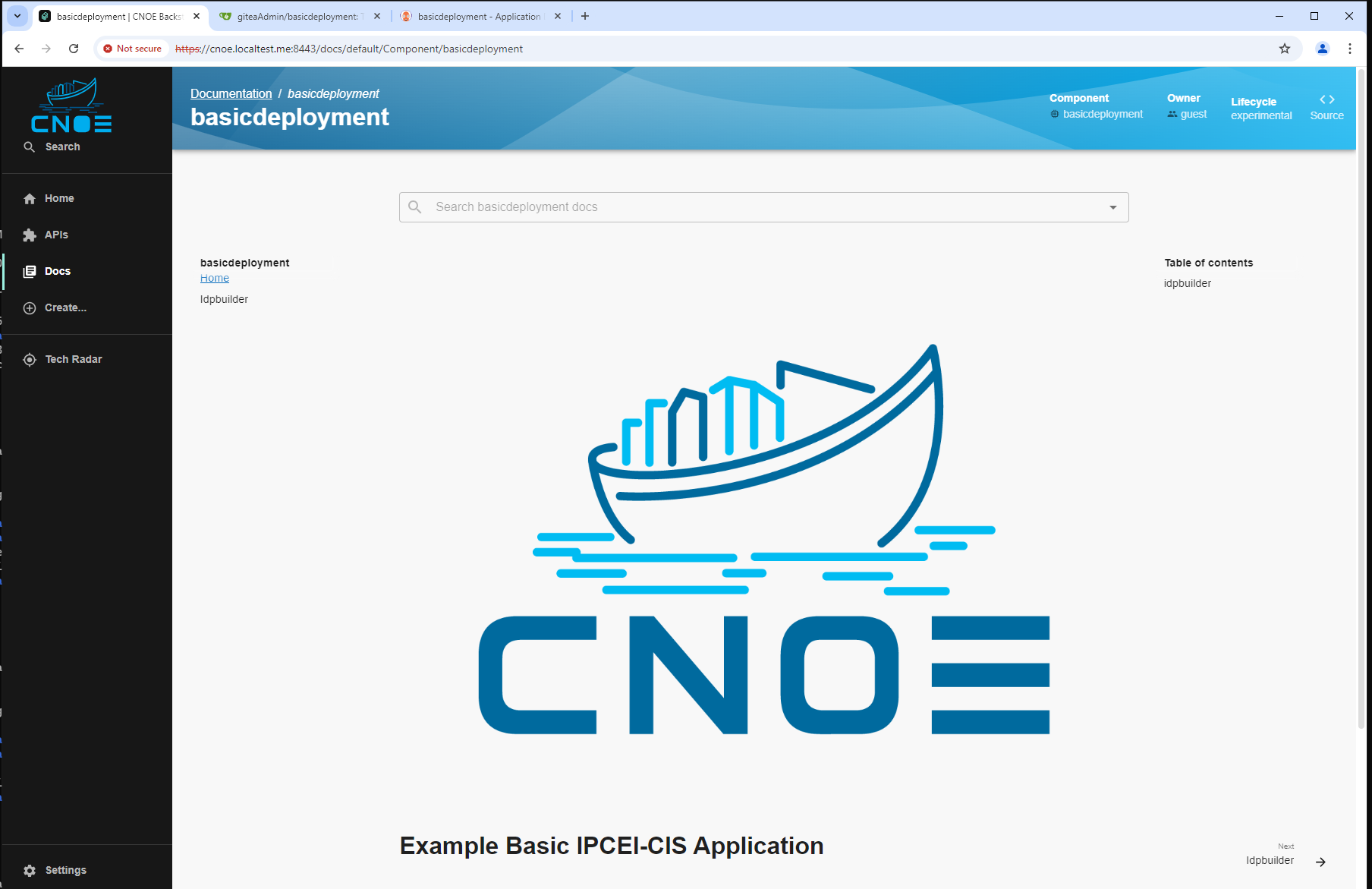

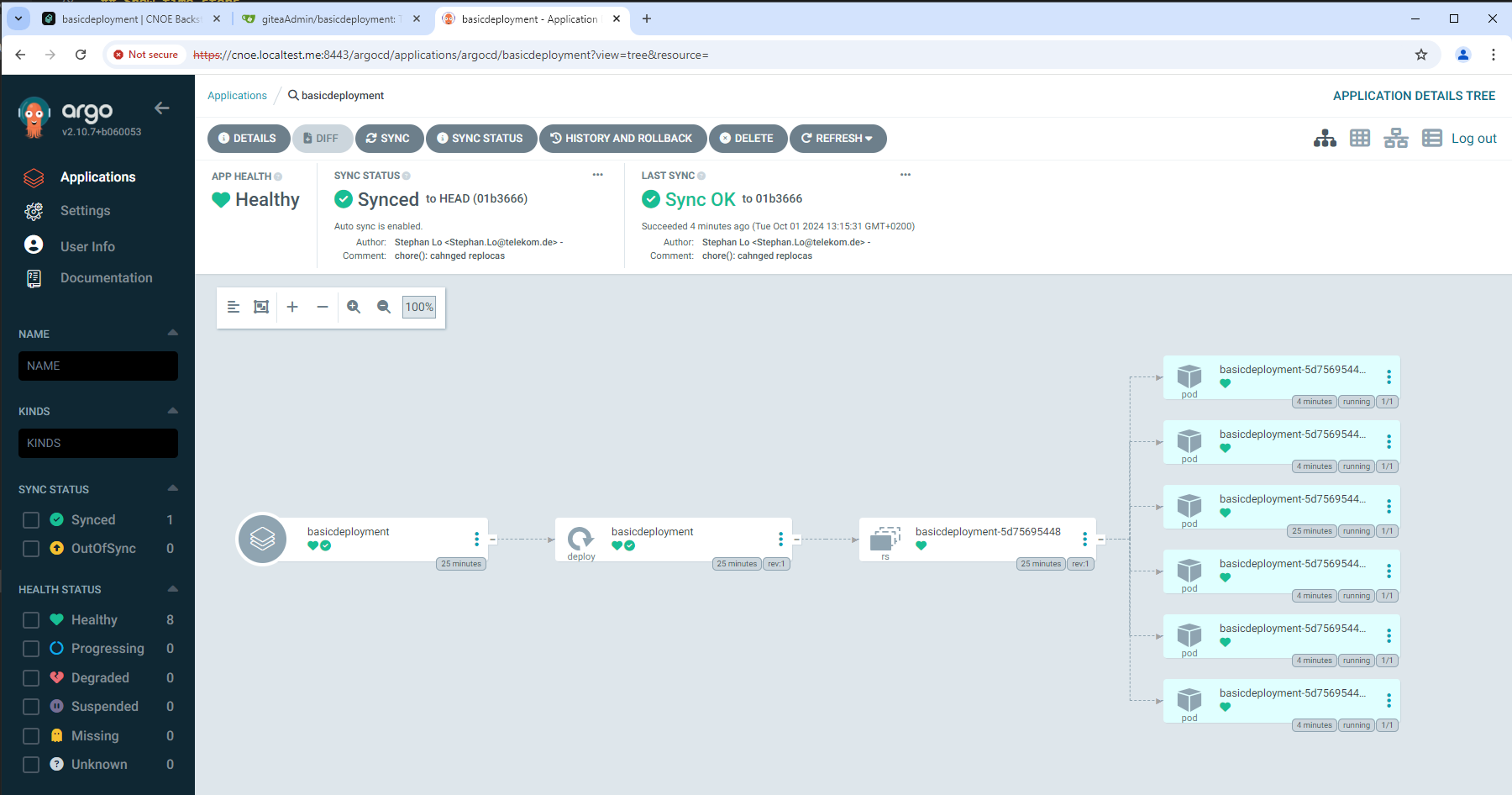

Wait for gitops magic: deployment into the ‘production’ cluster

Next wait a bit until Gitops does its magic and our ‘wanted’ state in the repo gets automatically deployed to the ‘production’ cluster:

What comes next?

The showtime of CNOE high level behaviour and usage scenarios is now finished. We setup an initial IDP and used a backstage golden path to init and deploy a simple application.

Last not least we want to sum up the whole way from Devops to ‘Frameworking’ (is this the correct wording???)

1.7 - Conclusio

Summary

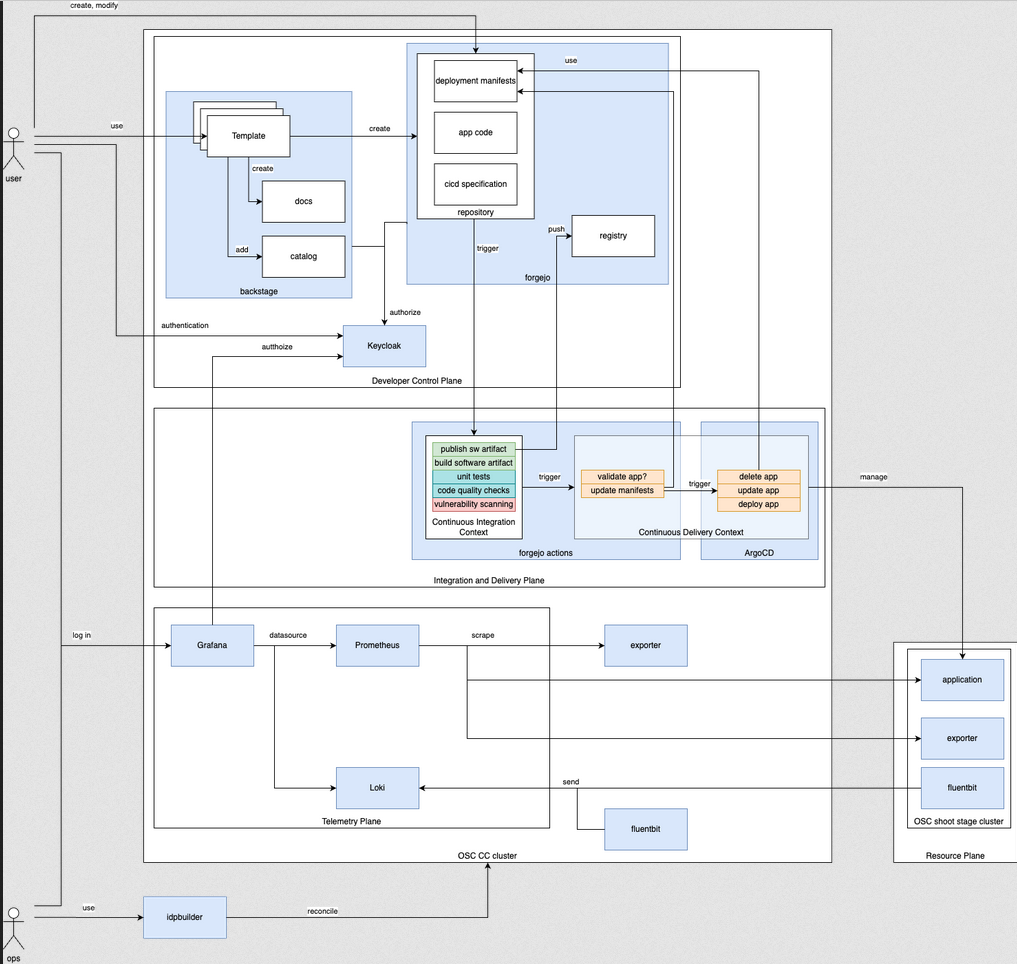

In the project ‘Edge Developer Framework’ we start with DevOps, set platforms on top to automate golden paths, and finally set ‘frameworks’ (aka Orchestrators’) on top to have declarative,automated and reconcilable platforms.

From Devops over Platform to Framework Engineering

We come along from a quite well known, but already complex discipline called ‘Platform Engineering’, which is the next level devops. On top of these two domains we now have ‘Framework Engineering’, i.e. buildung dynamic, orchestrated and reconciling platforms:

| Classic Platform engineering | New: Framework Orchestration on top of Platforms | Your job: Framework Engineer |

|---|---|---|

|  |  |

The whole picture of engineering

So always keep in mind that as as ‘Framework Engineer’ you

- include the skills of a platform and a devops engineer,

- you do Framework, Platform and Devops Engineering at the same time

- and your results have impact on Frameworks, Platforms and Devops tools, layers, processes.

The following diamond is illustrating this: on top is you, on the bottom is our baseline ‘DevOps’

1.7.1 -

// how to create/export c4 images: // see also https://likec4.dev/tooling/cli/

docker run -it –rm –name likec4 –user node -v $PWD:/app node bash npm install likec4 exit

docker commit likec4 likec4 docker run -it –rm –user node -v $PWD:/app -p 5173:5173 likec4 bash

// as root npx playwright install-deps npx playwright install

npm install likec4

// render node@e20899c8046f:/app/content/en/docs/project/onboarding$ ./node_modules/.bin/likec4 export png -o ./images .

1.8 -

Storyline

- We have the ‘Developer Framework’

- We think the solution for DF is ‘Platforming’ (Digital Platforms)

- The next evolution after DevOps

- Gartner predicts 80% of SWE companies to have platforms in 2026

- Platforms have a history since roundabout 2019

- CNCF has a working group which created capabilities and a maturity model

- Platforms evolve - nowadys there are Platform Orchestrators

- Humanitec set up a Reference Architecture

- There is this ‘Orchestrator’ thing - declaratively describe, customize and change platforms!

- Mapping our assumptions to solutions

- CNOE is a hot candidate to help and fulfill our platform building

- CNOE aims to embrace change and customization!

- Showtime CNOE

Challenges

- Don’t miss to further investigate and truely understand DF needs

- Don’t miss to further investigate and truely understand Platform capabilities

- Don’t miss to further investigate and truely understand Platform orchestration

- Don’t miss to further investigate and truely understand CNOE solution

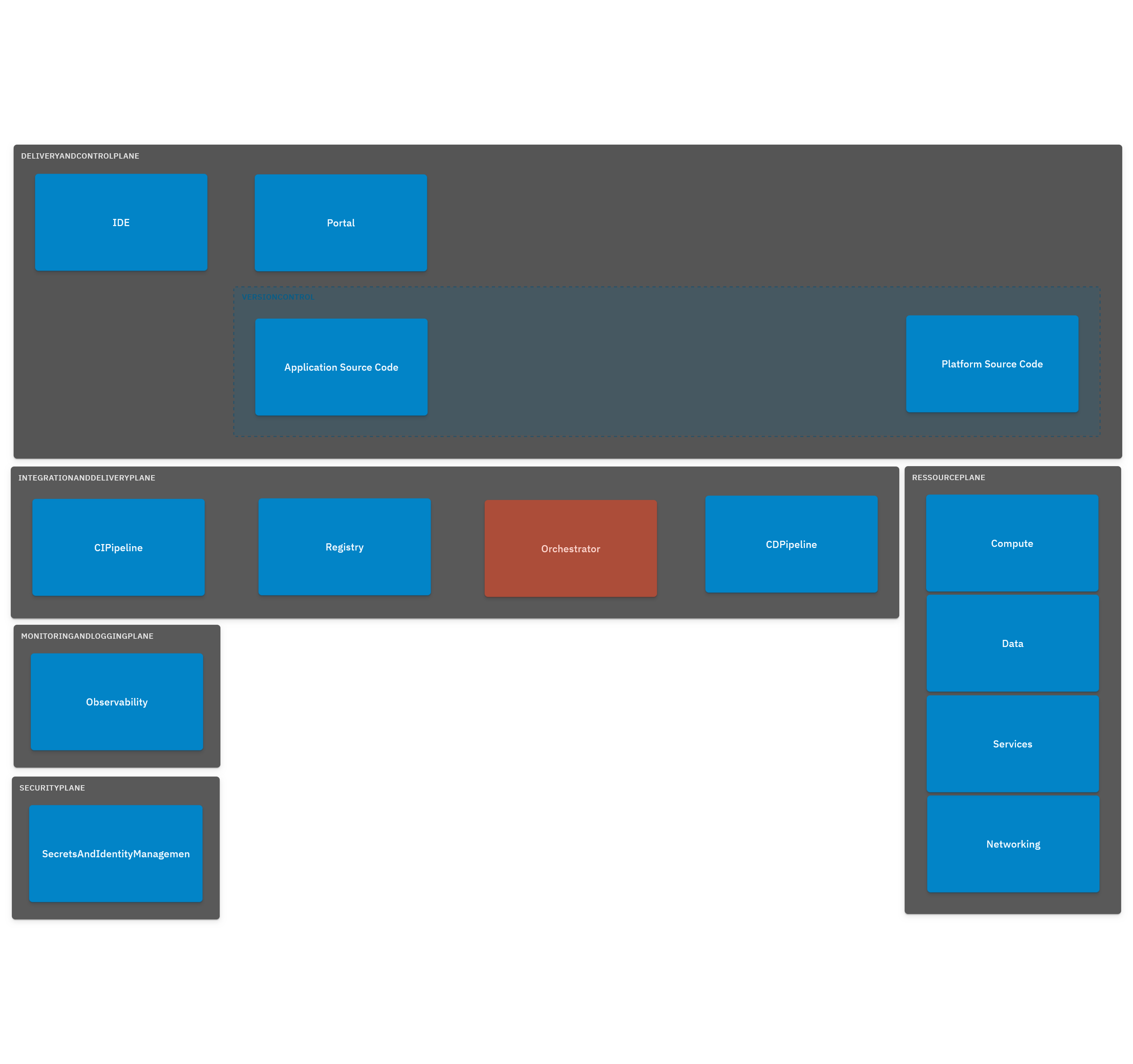

Architecture

2 - Bootstrapping Infrastructure

In order to be able to do useful work, we do need a number of applications right away. We’re deploying these manually so we have the necessary basis for our work. Once the framework has been developed far enough, we will deploy this infrastructure with the framework itself.

2.1 - Backup of the Bootstrapping Cluster

Velero

We are using Velero for backup and restore of the deployed applications.

Installing Velero Tools

To manage a Velero install in a cluster, you need to have Velero command line tools installed locally. Please follow the instructions for Basic Install.

Setting Up Velero For a Cluster

Installing and configuring Velero for a cluster can be accomplished with the CLI.

- Create a file with the credentials for the S3 compatible bucket that is storing the backups, for example

credentials.ini.

[default]

aws_access_key_id = "Access Key Value"

aws_secret_access_key = "Secret Key Value"

- Install Velero in the cluster

velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.2.1 \

--bucket osc-backup \

--secret-file ./credentials.ini \

--use-volume-snapshots=false \

--use-node-agent=true \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=https://obs.eu-de.otc.t-systems.com

- Delete

credentials.ini, it is not needed anymore (a secret has been created in the cluster). - Create a schedule to back up the relevant resources in the cluster:

velero schedule create devfw-bootstrap --schedule="23 */2 * * *" "--include-namespaces=forgejo"

Working with Velero

You can now use Velero to create backups, restore them, or perform other operations. Please refer to the Velero Documentation.

To list all currently available backups:

velero backup get

Setting up a Service Account for Access to the OTC Object Storage Bucket

We are using the S3-compatible Open Telekom Cloud Object Storage service to make available some storage for the backups. We picked OTC specifically because we’re not using it for anything else, so no matter what catastrophy we create in Open Sovereign Cloud, the backups should be safe.

Create an Object Storage Service Bucket

- Log in to the OTC Console with the correct tenant.

- Navigate to Object Storage Service.

- Click Create Bucket in the upper right hand corner. Give your bucket a name. No further configuration should be necessary.

Create a Service User to Access the Bucket

- Log in to the OTC Console with the correct tenant.

- Navigate to Identity and Access Management.

- Navigate to User Groups, and click Create User Group in the upper right hand corner.

- Enter a suitable name (“OSC Cloud Backup”) and click OK.

- In the group list, locate the group just created and click its name.

- Click Authorize to add the necessary roles. Enter “OBS” in the search box to filter for Object Storage roles.

- Select “OBS OperateAccess”, if there are two roles, select them both.

- 2024-10-15 Also select the “OBS Administrator” role. It is unclear why the “OBS OperateAccess” role is not sufficient, but without the admin role, the service user will not have write access to the bucket.

- Click Next to save the roles, then click OK to confirm, then click Finish.

- Navigate to Users, and click Create User in the upper right hand corner.

- Give the user a sensible name (“ipcei-cis-devfw-osc-backups”).

- Disable Management console access

- Enable Access key, disable Password, disable Login protection.

- Click Next

- Pick the group created earlier.

- Download the access key when prompted.

The access key is a CSV file with the Access Key and the Secret Key listed in the second line.

3 - Plan in 2024

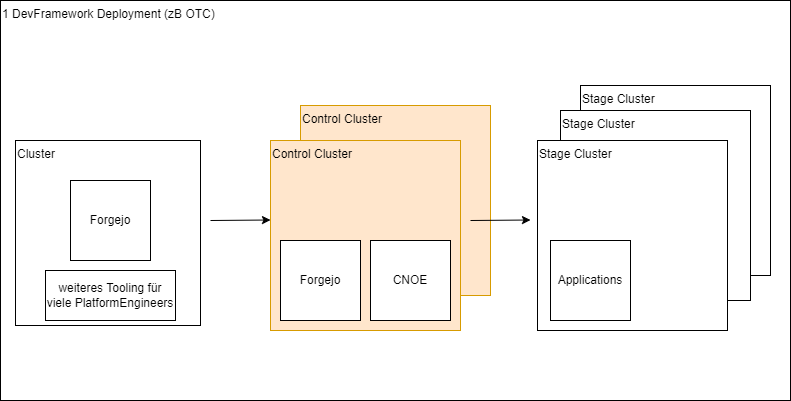

First Blue Print in 2024

Our first architectural blue print for the IPCEI-CIS Developer Framework derives from Humanitecs Reference Architecture, see links in Blog

C4 Model

(sources see in ./ressources/architecture-c4)

How to use: install C4lite VSC exension and/or C4lite cli - then open *.c4 files in ./ressources/architecture-c4

First system landscape C4 model:

In Confluence

https://confluence.telekom-mms.com/display/IPCEICIS/Architecture

Dimensionierung Cloud für initiales DevFramework

28.08.24, Stefan Bethke, Florian Fürstenberg, Stephan Lo

- zuerst viele DevFrameworkPlatformEngineers arbeiten lokal, mit zentralem Deployment nach OTC in einen/max zwei Control-Cluster

- wir gehen anfangs von ca. 5 clustern aus

- jeder cluster mit 3 Knoten/VM (in drei AvailabilityZones)

- pro VM 4 CPU, 16 GB Ram, 50 GB Storage read/write once, PVCs ‘ohne limit’

- public IPs, plus Loadbalancer

- Keycloak vorhanden

- Wildcard Domain ?? –> Eher ja

Next Steps: (Vorschlag: in den nächsten 2 Wochen)

- Florian spezifiziert an Tobias

- Tobias stellt bereit, kubeconfig kommt an uns

- wir deployen

3.1 - Workstreams

This page is WiP (23.8.2024).

Continued discussion on 29th Aug 24

- idea: Top down mit SAFe, Value Streams

- paralell dazu bottom up (die zB aus den technisch/operativen Tätigkeietn entstehen)

- Scrum Master?

- Claim: Self Service im Onboarding (BTW, genau das Versprechen vom Developer Framework)

- Org-Struktur: Scrum of Scrum (?), max. 8,9 Menschen

Stefan and Stephan try to solve the mission ‘wir wollen losmachen’.

Solution Idea:

- First we define a rough overall structure (see ‘streams’) and propose some initial activities (like user stories) within them.

- Next we work in iterative steps and produce iteratively progress and knowledge and outcomes in these activities.

- Next the whole team decides which are the next valuable steps

Overall Structure: Streams

We discovered three streams in the first project steps (see also blog):

- Research, Fundamentals, Architecture

- POCs (Applications, Platform-variants, …)

- Deployment, production-lifecycle

#

## Stream 'Fundamentals'

### [Platform-Definition](./fundamentals/platform-definition/)

### [CI/CD Definition](./fundamentals/cicd-definition/)

## Stream 'POC'

### [CNOE](./pocs/cnoe/)

### [Kratix](./pocs/kratix/)

### [SIA Asset](./pocs/sia-asset/)

### Backstage

### Telemetry

## Stream 'Deployment'

### [Forgejo](./deployment/forgejo/)

DoR - Definition of Ready

Bevor eine Aufgabe umgesetzt wird, muss ein Design vorhanden sein.

Bezüglich der ‘Bebauung’ von Plaztform-Komponenten gilt für das Design:

- Die Zielstellung der Komponenet muss erfasst sein

3.1.1 - Fundamentals

References

Fowler / Thoughtworks

nice article about platform orchestration automation (introducing BACK stack)

3.1.1.1 - Activity 'Platform Definition'

Summary

Das theoretische Fundament unserer Plattform-Architektur soll begründet und weitere wesentliche Erfahrungen anderer Player durch Recherche erhoben werden, so dass unser aktuelles Zielbild abgesichert ist.

Rationale

Wir starten gerade auf der Bais des Referenzmodells zu Platform-Engineering von Gartner und Huamitec. Es gibt viele weitere Grundlagen und Entwicklungen zu Platform Engineering.

Task

- Zusammentragen, wer was federführend macht in der Plattform Domäne, vgl. auch Linkliste im Blog

- Welche trendsettenden Plattformen gibt es?

- Beschreiben der Referenzarchitektur in unserem Sinn

- Begriffsbildung, Glossar erstellen (zB Stacks oder Ressource-Bundles)

- Architekturen erstellen mit Control Planes, Seedern, Targets, etc. die mal zusammenliegen, mal nicht

- Beschreibung der Wirkungsweise der Platform-Orchestration (Score, Kubevela, DSL, … und Controlern hierzu) in verscheidenen Platform-Implemnetierungen

- Ableiten, wie sich daraus unser Zielbild und Strategie ergeben.

- Argumentation für unseren Weg zusammentragen.

- Best Practices und wichtige Tipps und Erfahrungen zusammentragen.

3.1.1.2 - Activity 'CI/CD Definition'

Summary

Der Produktionsprozess für Applikationen soll im Kontext von Gitops und Plattformen entworfen und mit einigen Workflowsystemen im Leerlauf implementiert werden.

Rationale

In Gitops basierten Plattformen (Anm.: wie es zB. CNOE und Humanitec mit ArgoCD sind) trifft das klassische Verständnis von Pipelining mit finalem Pushing des fertigen Builds auf die Target-Plattform nicht mehr zu.

D.h. in diesem fall is Argo CD = Continuous Delivery = Pulling des desired state auf die Target plattform. Eine pipeline hat hier keien Rechte mehr, single source of truth ist das ‘Control-Git’.

D.h. es stellen sich zwei Fragen:

- Wie sieht der adaptierte Workflow aus, der die ‘Single Source of Truth’ im ‘Control-Git’ definiert? Was ist das gewünschte korrekte Wording? Was bedeuen CI und CD in diesem (neuen) Kontext ? Auf welchen Environmants laufen Steps (zB Funktionstest), die eben nicht mehr auf einer gitops-kontrollierten Stage laufen?

- Wie sieht der Workflow aus für ‘Events’, die nach dem CI/CD in die single source of truth einfliessen? ZB. abnahmen auf einer Abnahme Stage, oder Integrationsprobleme auf einer test Stage

Task

- Es sollen existierende, typische Pipelines hergenommen werden und auf die oben skizzierten Fragestellungen hin untersucht und angepasst werden.

- In lokalen Demo-Systemen (mit oder ohne CNOE aufgesetzt) sollen die Pipeline entwürfe dummyhaft dargestellt werden und luffähig sein.

- Für den POC sollen Workflow-Systeme wie Dagger, Argo Workflow, Flux, Forgejo Actions zum Einsatz kommen.

Further ideas for POSs

- see sample flows in https://docs.kubefirst.io/

3.1.2.1 - Activity 'CNOE Investigation'

Summary

Als designiertes Basis-Tool des Developer Frameworks sollen die Verwendung und die Möglichkeiten von CNOE zur Erweiterung analysiert werden.

Rationale

CNOE ist das designierte Werkzeug zur Beschreibung und Ausspielung des Developer Frameworks. Dieses Werkzeug gilt es zu erlernen, zu beschreiben und weiterzuentwickeln. Insbesondere der Metacharkter des ‘Software zur Bereitstellung von Bereitstellungssoftware für Software’, d.h. der unterschiedlichen Ebenen für unterschiedliche Use Cases und Akteure soll klar verständlich und dokumentiert werden. Siehe hierzu auch das Webinar von Huamnitec und die Diskussion zu unterschiedlichen Bereitstellungsmethoden eines RedisCaches.

Task

- CNOE deklarativ in lokalem und ggf. vorhandenem Cloud-Umfeld startbar machen

- Architektur von COE beschreiben, wesentliche Wording finden (zB Orchestrator, Stacks, Kompoennten-Deklaration, …)

- Tests / validations durchführen

- eigene ‘Stacks erstellen’ (auch in Zusammenarbeit mit Applikations-POCs, zB. SIA und Telemetrie)

- Wording und Architektur von Activity ‘Platform-Definition’ beachten und challengen

- Alles, was startbar und lauffähig ist, soll möglichst vollautomatisch verscriptet und git dokumentiert in einem Repo liegen

Issues / Ideas / Improvements

- k3d anstatt kind

- kind: ggf. issue mit kindnet, ersetzen durch Cilium

3.1.2.2 - Activity 'SIA Asset Golden Path Development'

Summary

Implementierung eines Golden Paths in einem CNOE/Backstage Stack für das existierende ‘Composable SIA (Semasuite Integrator Asset)’.

Rationale

Das SIA Asset ist eine Entwicklung des PC DC - es ist eine Composable Application die einen OnlineShop um die Möglichkeit der FAX-Bestellung erweitert. Die Entwicklung begann im Januar 2024 mit einem Team von drei Menschen, davon zwei Nearshore, und hatte die typischen ersten Stufen - erst Applikationscode ohne Integration, dann lokale gemockte Integration, dann lokale echte Integration, dann Integration auf einer Integrationsumgebung, dann Produktion. Jedesmal bei Erklimmung der nächsten Stufe mit Erstellung von individuellem Build und Deploymentcode und Abwägungen, wie aufwändig nachhaltig und wie benutzbar das jeweilige Konstrukt sein sollte. Ein CI/CD gibt es nicht, zu großer Aufwand für so ein kleines Projekt.

Die Erwartung ist, dass so ein Projekt als ‘Golden Path’ abbildbar ist und die Entwicklung enorm bescheunigt.

Task

- SIA ‘auf die Platform heben’ (was immer das bedeutet)

- Den Build-Code von SIA (die Applikation und einen Shop) in einen CI/CD Workflow transformieren

References

Scenario (see IPCEICIS-363)

graph TB

Developer[fa:fa-user developer]

PlatformDeliveryAndControlPlaneIDE[IDE]

subgraph LocalBox["localBox"]

LocalBox.EDF[Platform]

LocalBox.Local[local]

end

subgraph CloudGroup["cloudGroup"]

CloudGroup.Test[test]

CloudGroup.Prod[prod]

end

Developer -. "use preferred IDE as local code editing, building, testing, syncing tool" .-> PlatformDeliveryAndControlPlaneIDE

Developer -. "manage (in Developer Portal)" .-> LocalBox.EDF

PlatformDeliveryAndControlPlaneIDE -. "provide "code"" .-> LocalBox.EDF

LocalBox.EDF -. "provision" .-> LocalBox.Local

LocalBox.EDF -. "provision" .-> CloudGroup.Prod

LocalBox.EDF -. "provision" .-> CloudGroup.Test3.1.2.3 - Activity 'Kratix Investigation'

Summary

Ist Kratix eine valide Alternative zu CNOE?

Rationale

Task

Issues / Ideas / Improvements

3.1.3 - Deployment

Mantra:

- Everything as Code.

- Cloud natively deployable everywhere.

- Ramping up and tearing down oftenly is a no-brainer.

- Especially locally (whereby ’locally’ means ‘under my own control’)

Entwurf (28.8.24)

3.1.3.1 - Activity 'Forgejo'

WiP Ich (Stephan) schreibe mal schnell einige Stichworte, was ich so von Stefan gehört habe:

Summary

tbd

Rationale

- …

- Design: Deployment Architecture (Platform Code vs. Application Code)

- Design: Integration in Developer Workflow

- …

Task

- …

- Runner

- Tenants

- User Management

- …

- tbc

Issues

28.08.24, Forgejo in OTC (Planung Stefan, Florian, Stephan)

- STBE deployed mit Helm in bereitgestelltes OTC-Kubernetes

- erstmal interne User Datenbank nutzen

- dann ggf. OIDC mit vorhandenem Keycloak in der OTC anbinden

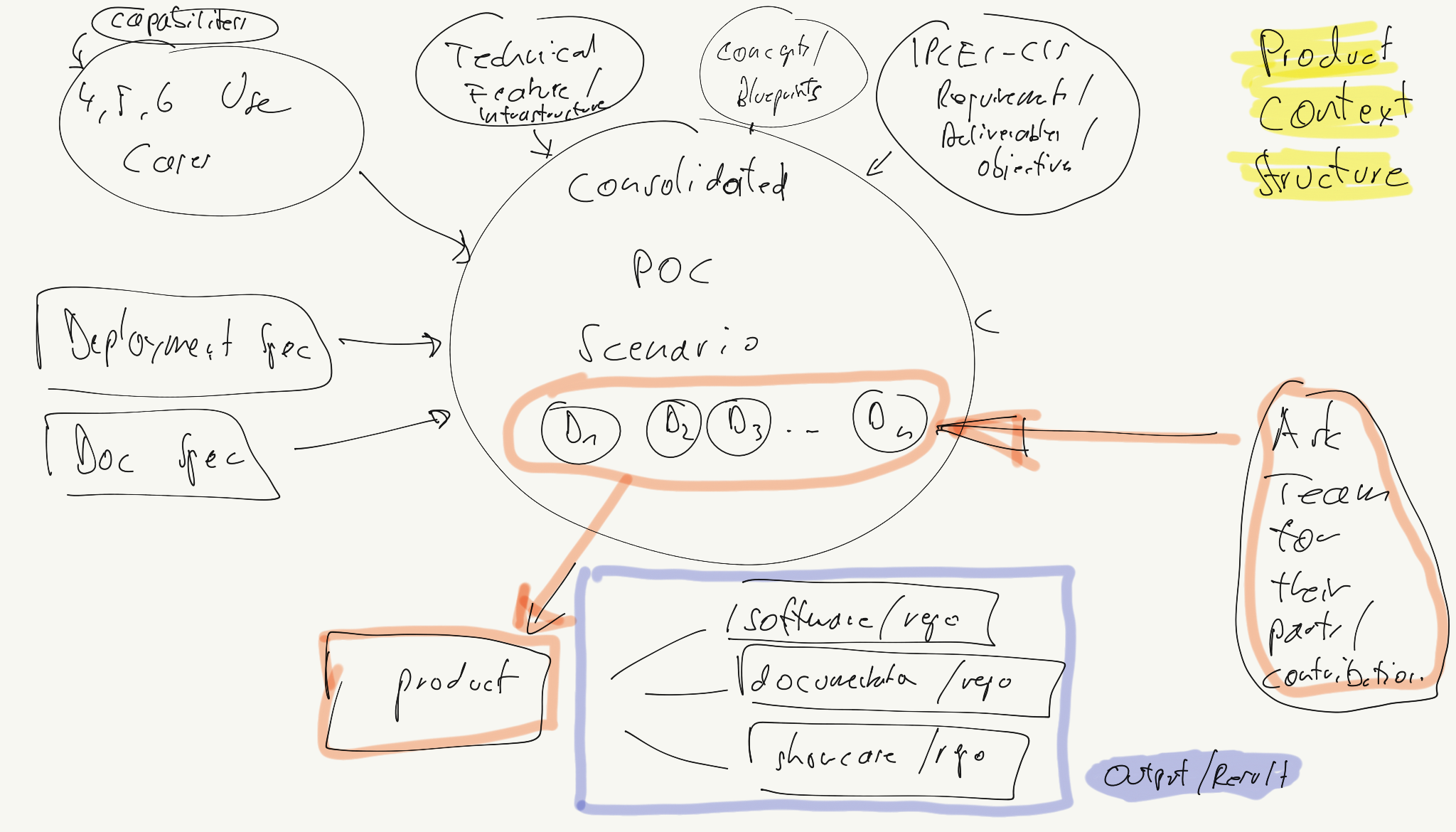

3.2 - PoC Structure

Presented and approved on tuesday, 26.11.2024 within the team:

The use cases/application lifecycle and deployment flow is drawn here: https://confluence.telekom-mms.com/display/IPCEICIS/Proof+of+Concept+2024

4 - Stakeholder Workshop Intro

Edge Developer Framework Solution Overview

This section is derived from conceptual-onboarding-intro

- As presented in the introduction: We have the ‘Edge Developer Framework’.

In short the mission is:- Build a european edge cloud IPCEI-CIS

- which contains typical layers infrastructure, platform, application

- and on top has a new layer ‘developer platform’

- which delivers a cutting edge developer experience and enables easy deploying of applications onto the IPCEI-CIS

- We think the solution for EDF is in relation to ‘Platforming’ (Digital Platforms)

- The next evolution after DevOps

- Gartner predicts 80% of SWE companies to have platforms in 2026

- Platforms have a history since roundabout 2019

- CNCF has a working group which created capabilities and a maturity model

- Platforms evolve - nowadys there are Platform Orchestrators

- Humanitec set up a Reference Architecture

- There is this ‘Orchestrator’ thing - declaratively describe, customize and change platforms!

- Mapping our assumptions to the CNOE solution

- CNOE is a hot candidate to help and fulfill our platform building

- CNOE aims to embrace change and customization!

2. Platforming as the result of DevOps

DevOps since 2010

- from ’left’ to ‘right’ - plan to monitor

- ’leftshift’

- –> turns out to be a right shift for developers with cognitive overload

- ‘DevOps isd dead’ -> we need Platforms

Platforming to provide ‘golden paths’

don’t mix up ‘golden paths’ with pipelines or CI/CD

Short list of platform using companies

As Gartner states: “By 2026, 80% of software engineering organizations will establish platform teams as internal providers of reusable services, components and tools for application delivery.”

Here is a small list of companies alrteady using IDPs:

- Spotify

- Airbnb

- Zalando

- Uber

- Netflix

- Salesforce

- Booking.com

- Amazon

- Autodesk

- Adobe

- Cisco

- …

3 Platform building by ‘Orchestrating’

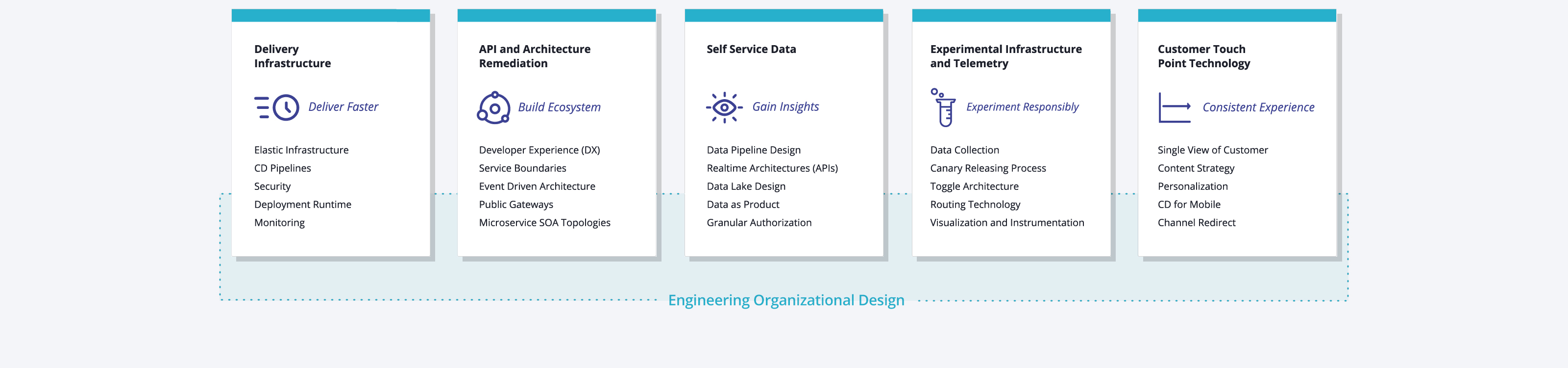

So the goal of platforming is to build a ‘digital platform’ which fits this architecture (Ref. in German):

Digital Platform blue print: Reference Architecture

The blue print for such a platform is given by the reference architecture from Humanitec:

Digital Platform builder: CNOE

Since 2023 this is done by ‘orchestrating’ such platforms. One orchestrator is the CNOE solution, which highly inspired our approach.

In our orchestartion engine we think in ‘stacks’ of ‘packages’ containing platform components.

4 Sticking all together: Our current platform orchestrating generated platform

Sticking together the platforming orchestration concept, the reference architecture and the CNOE stack solution, this is our current running platform minimum viable product.

This will now be presented! Enjoy!

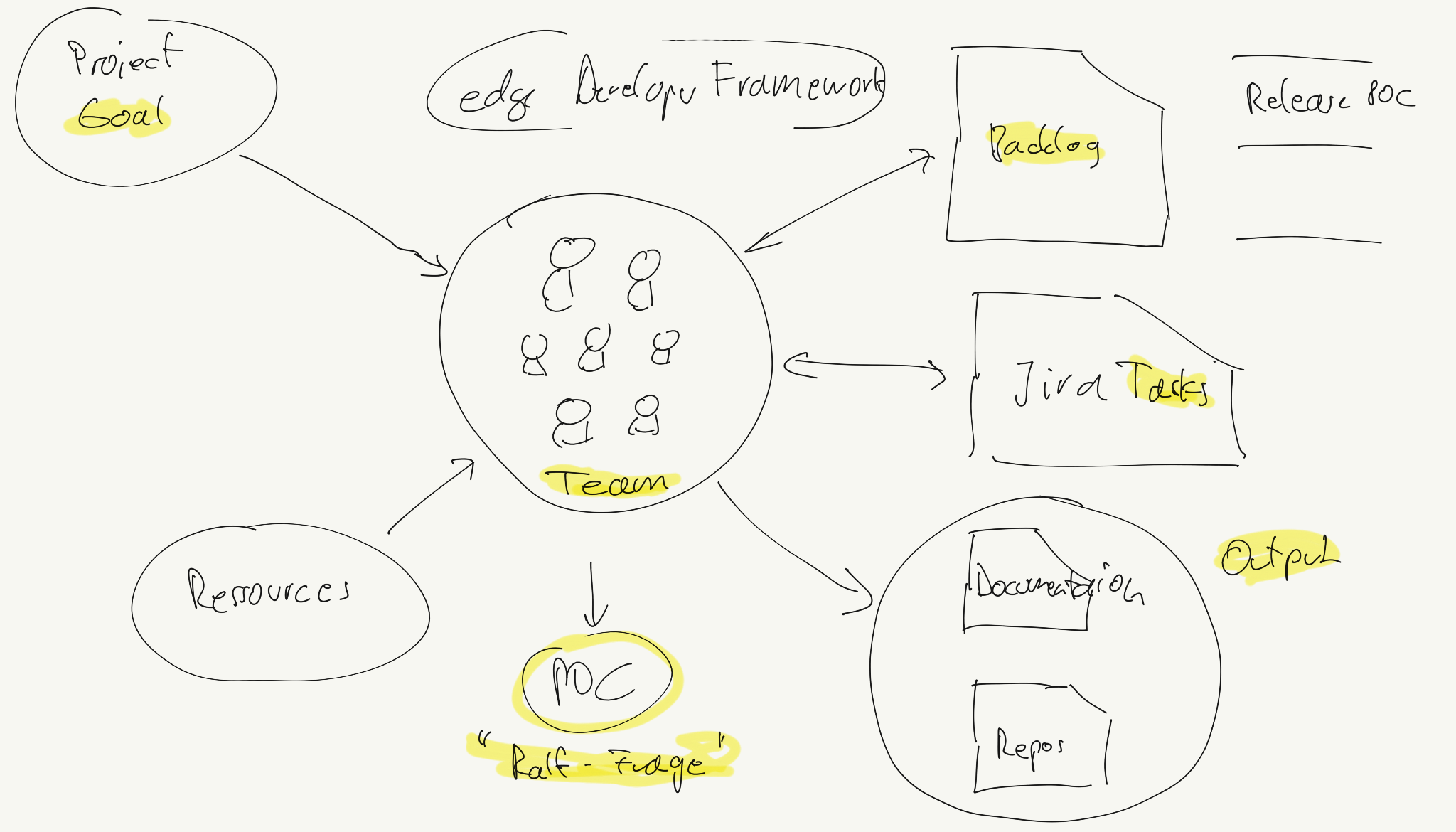

5 - Team and Work Structure

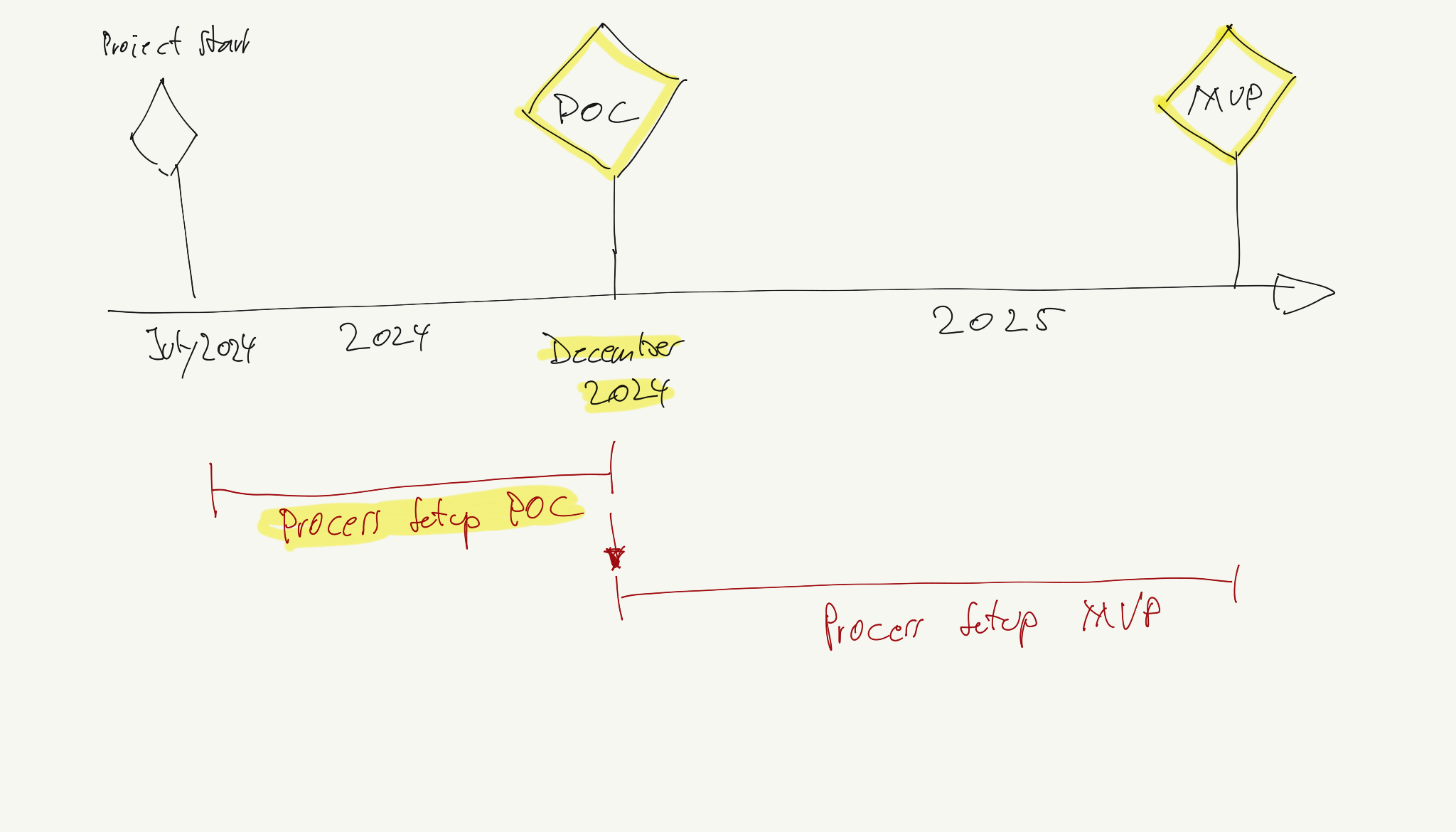

This document describes a proposal to set up a team work structure to primarily get the POC successfully delivered. Later on we will adjust and refine the process to fit for the MVP.

Introduction

Rationale

We currently face the following challenges in our process:

- missing team alignment on PoC-Output over all components

- Action: team is committed to clearly defined PoC capabilities

- Action: every each team-member is aware of individual and common work to be done (backlog) to achieve PoC

- missing concept for repository (process, structure,

- Action: the PoC has a robust repository concept up & running

- Action: repo concept is applicable for other repositorys as well (esp. documentation repo)

General working context

A project goal drives us as a team to create valuable product output.

The backlog contains the product specification which instructs us by working in tasks with the help and usage of ressources (like git, 3rd party code and knowledge and so on).

Goal, Backlog, Tasks and Output must be in a well-defined context, such that the team can be productive.

POC and MVP working context

This document has two targets: POC and MVP.

Today is mid november 2024 and we need to package our project results created since july 2024 to deliver the POC product.

Think of the agenda’s goal like this: Imagine Ralf the big sponsor passes by and sees ’edge Developer Framework’ somewhere on your screen. Then he asks: ‘Hey cool, you are one of these famous platform guys?! I always wanted to get a demo how this framework looks like!’

What are you going to show him?

Team and Work Structure (POC first, MVP later)

In the following we will look at the work structure proposal, primarily for the POC, but reusable for any other release or the MVP

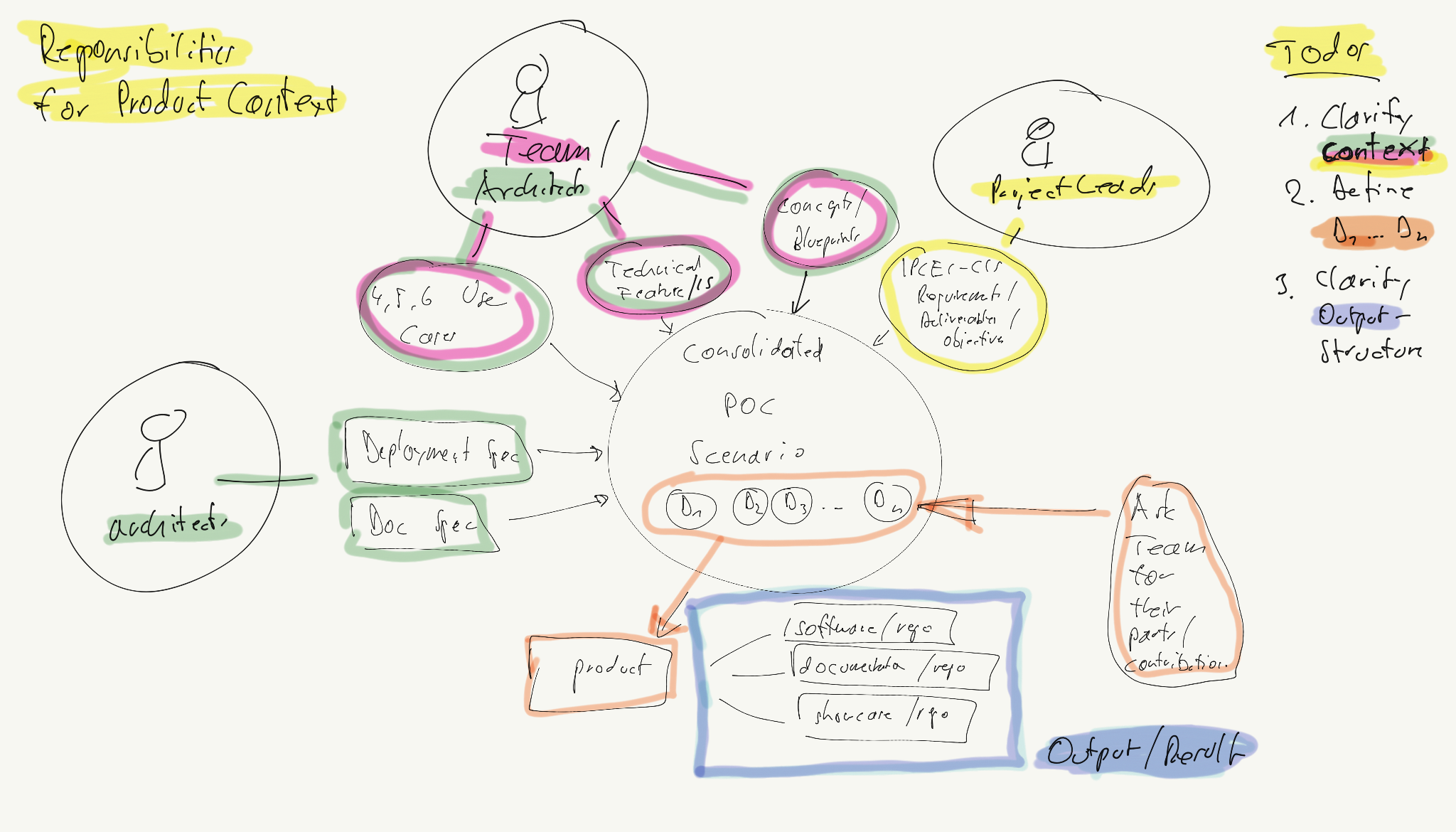

Consolidated POC (or any release later)

Responsibilities to reliably specify the deliverables

Todos

- SHOULD: Clarify context (arch, team, leads)

- MUST: Define Deliverables (arch, team) (Hint: Deleiverables could be seen 1:1 as use cases - not sure about that right now)

- MUST: Define Output structure (arch, leads)

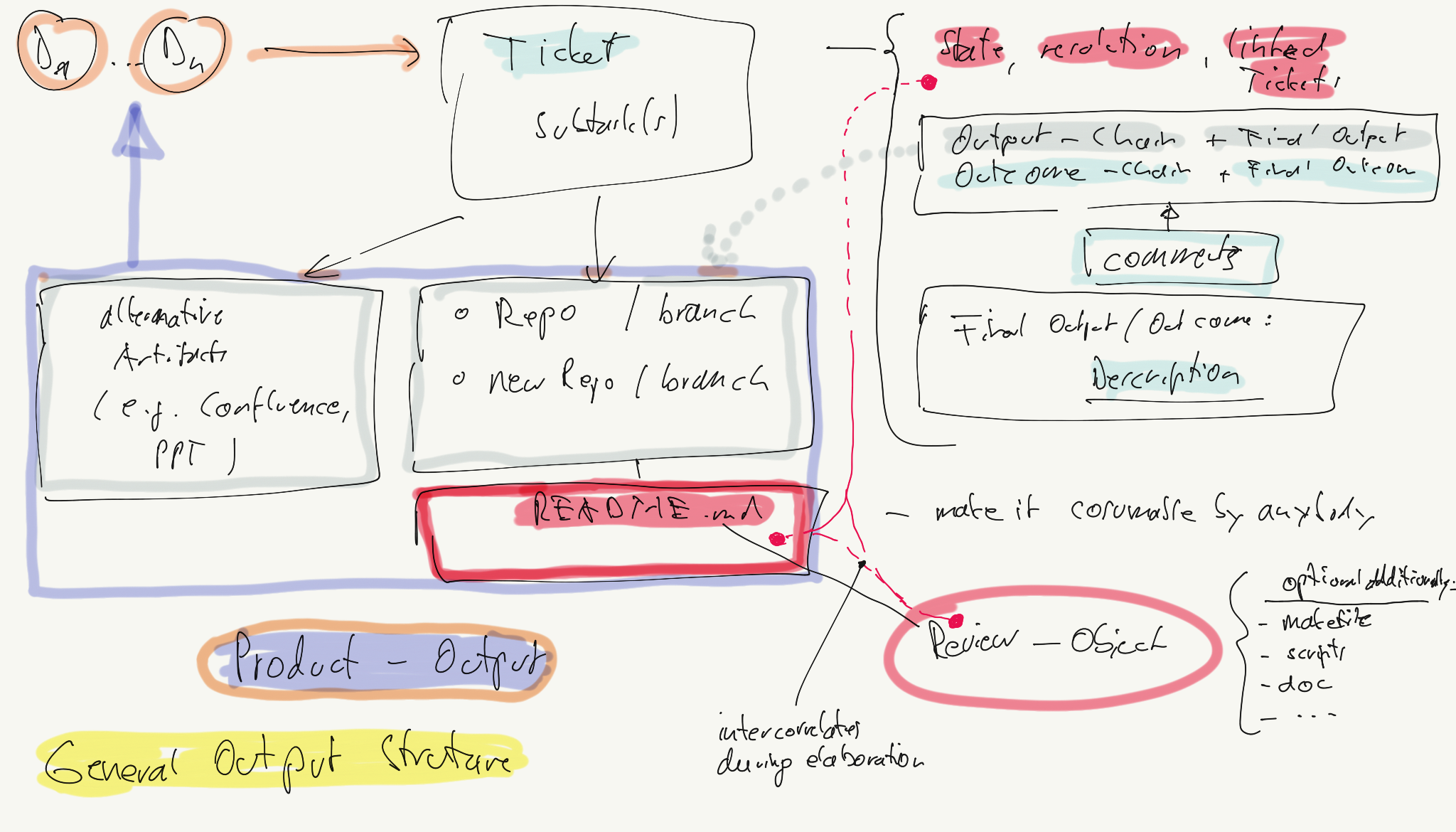

Process (General): from deliverables to output (POC first, MVP later)

Most important in the process are:

- traces from tickets to outputs (as the clue to understand and control what is where)

- README.md (as the clue how to use the output)

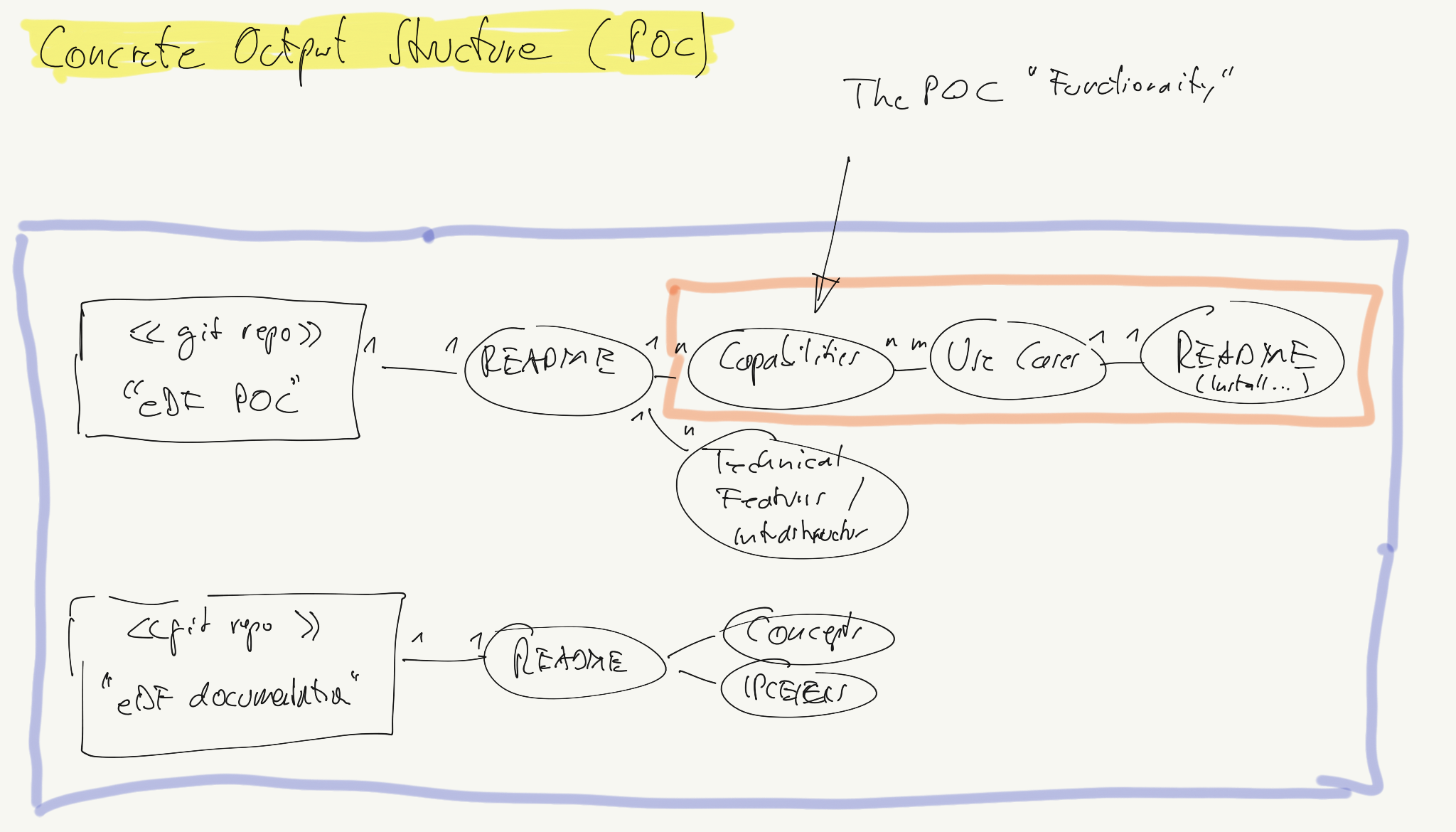

Output Structure POC

Most important in the POC structure are:

- one repo which is the product

- a README which maps project goals to the repo content

- the content consists of capabilities

- capabilities are shown (‘prooven’) by use cases

- the use cases are described in the deliverables

Glossary

- README: user manual and storybook

- Outcome: like resolution, but more verbose and detailled (especially when resolution was ‘Done’), so that state changes are easily recognisable

Work Structure Guidelines (POC first, MVP later)

Structure

- each task and/or user story has at least a branch in an existing repo or a new, dedicated task repo

recommended: multi-repo over monorepo

- each repo has a main and development branch. development is the intgration line

- pull requests are used to merge work outputs to the integration line

- optional (my be too cumbersome): each PR should be reflected as comment in jira

Workflow (in any task / user story)

- when output comes in own repo:

git init–> always create as fast as possible a new repo - commit early and oftenly

- comments on output and outcome when where is new work done. this could typically correlate to a pull request, see above

Definition of Done

- Jira: there is a final comment summarizimg the outcome (in a bit more verbose from than just the ‘resolution’ of the ticket) and the main outputs. This may typically be a link to the commit and/or pull request of the final repo state

- Git/Repo: there is a README.md in the root of the repo. It summarizes in a typical Gihub-manner how to use the repo, so that it does what it is intended to do and reveals all the bells and whistles of the repo to the consumer. If the README doesn’t lead to the usable and recognizable added value the work is not done!

Review

- Before a ticket gets finished (not defined yet which jira-state this is) there must be a review by a second team member

- the reviewing person may review whatever they want, but must at least check the README

Out of scope (for now)

The following topics are optional and do not need an agreement at the moment:

- Commit message syntax

Recommendation: at least ‘WiP’ would be good if the state is experimental

- branch permissions

- branch clean up policies

- squashing when merging into the integration line

- CI

- Tech blogs / gists

- Changelogs

Integration of Jira with Forgejo (compare to https://github.com/atlassian/github-for-jira)

- Jira -> Forgejo: Create Branch

- Forgejo -> Jira:

- commit

- PR

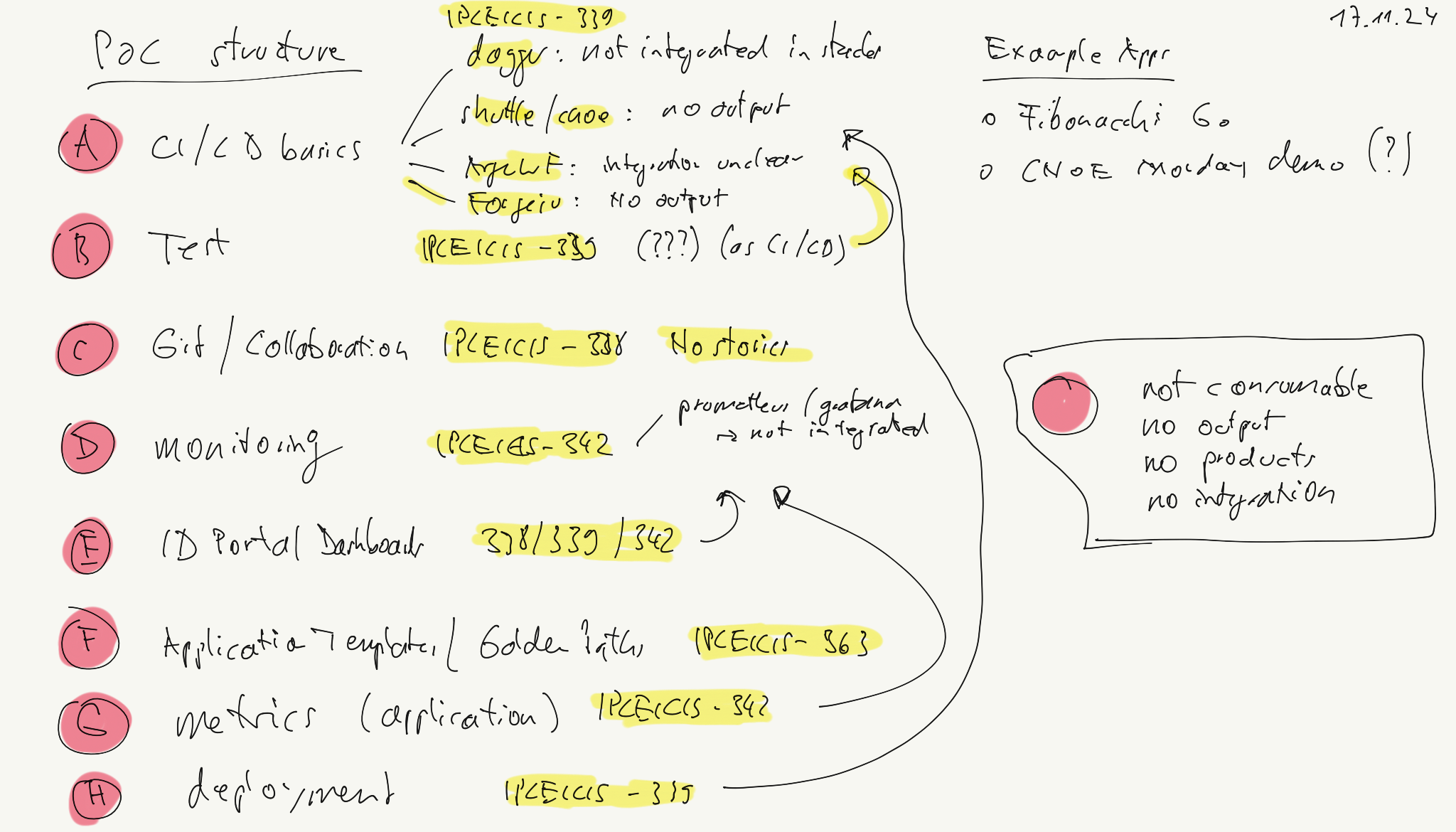

Status of POC Capabilities

The following table lists an analysis of the status of the ‘Funcionality validation’ of the POC. Assumption: These functionalities should be the aforementioned capabilities.

6 -

Ideen

- Kanban

- product review sessions

ChatGPT

Fehlendes Backlog

welche agilen vorgehensmodelle bzw. best practices gibt es in der software-entwicklung, wenn das product backlog noch nicht da ist. es gibt zwar eine produktvision und auch schon einen PoC, aber das Produkt ist sowohl technisch als auch nutzenseitig immer nocht nicht planbar in user stories. daher ist SCRUM nicht machbar. das team ist ca. 8 personen groß, und wir überlgen, einen meilenstein in den nächsten 8 wochen einfach durch tägliches ‘PDCA’ zu erstellen: ein morgendliches planning, dann in subgruppen ein erforschen, dann im Plenum wieder C (Check) zusammenführen/integrieren, und ein abschliessendes ‘A’ als Ergebnissicherung, Versionierierung, Dokumentation. Was hälst du von diesem Vorgehen, bzw. welches Risiken und Verbesserungsvorschläge hast du? Oder gibt es ein Standard-Vorgehen hierfür?

Dein beschriebenes Szenario – Vision und PoC vorhanden, aber kein ausformuliertes Product Backlog – ist typisch für eine frühe Explorations- oder Inceptionsphase in der Produktentwicklung. In dieser Phase ist klassisches Scrum tatsächlich oft zu starr, weil es eine gewisse Klarheit über Features und Stories voraussetzt. Das von euch überlegte Vorgehen klingt nach einer sehr pragmatischen und iterativen Methode, die im Kern gut zu den Lean-Startup-Prinzipien oder Design Thinking + Agile Engineering passt.

Bewertung eures PDCA-basierten Vorgehens

Positiv:

- Täglicher PDCA-Zyklus (Plan-Do-Check-Act) sorgt für schnelle Feedbackschleifen.

- Morgendliches Planning und Check-Meeting im Plenum fördern Transparenz und Selbstorganisation.

- Subgruppen-Erkundung erlaubt parallele Experimente.

- Abschließendes “A” zur Ergebnissicherung ist essenziell, sonst bleibt es bei “busy work”.

Risiken:

- Fehlende Langfriststruktur: Ohne grobe übergreifende Richtung (z. B. Meilensteinplan, Zielbild) kann es leicht in ziellosem Explorieren enden.

- Uneinheitlicher Erkenntnisgewinn: Subgruppen könnten redundant oder inkompatibel arbeiten, wenn kein gemeinsames Verständnis besteht.

- Dokumentation als „Nachsorge“: Wenn die Doku erst am Ende passiert, droht Wissensverlust – lieber „Living Docs“ in Echtzeit pflegen.

- Stakeholder-Abkopplung: Ohne explizite Reviews oder Demo-Rituale kann Feedback von außen fehlen.

Verbesserungsvorschläge

Exploration Backlog oder Hypothesenboard: Auch ohne klassisches Product Backlog könnt ihr mit einem Experiment-/Hypothesenboard (à la Lean Startup) arbeiten. Zum Beispiel:

- Hypothese: „Feature X wird den Use Case Y verbessern.“

- Experiment: „Prototyp bauen und testen mit Nutzergruppe Z.“

- Ergebnis & Learnings dokumentieren.

Wöchentliche Zielsetzungen: Jeden Montag ein Weekly Planning mit klaren Zielen für die Woche (Outcome-fokussiert, nicht Task-fokussiert).

Integration mit Dual Track Agile: Das Vorgehen ähnelt dem Dual-Track-Modell: ein Discovery-Track (Erkunden, Validieren, Prototypisieren) läuft parallel zum späteren Delivery-Track (Releases, Sprints). Ihr seid noch voll im Discovery-Track.

Design Studio oder Spike-Zeitfenster: Strukturiert Zeit für z. B. 2-Tages-Design-Studios oder 1-Tages-Spikes – das erhöht die Qualität der Exploration.

Review/Share-Session freitags: Eine fixe wöchentliche Review-/Demo-Session (auch intern) erhöht die Disziplin und hilft beim „Check“-Teil im PDCA.

Gängige Standard-Methoden in dieser Phase

- Design Thinking (für Nutzerzentrierte Exploration)

- Lean UX / Lean Startup (Hypothesengetrieben)

- Dual Track Agile

- Google Design Sprint (5 Tage) – extrem hilfreich, wenn es um das Testen von Nutzerideen geht

- Shape Up (Basecamp) – für nicht-sprintbasiertes Arbeiten mit “Bet” auf 6-Wochen-Projekte

Fazit:

Euer PDCA-Zyklus ist ein solider, pragmatischer Einstieg in eine unsichere, vorstrukturierte Produktphase. Mit ein paar Ergänzungen (Exploration Backlog, feste Review-Elemente, Hypothesenstruktur) könnt ihr daraus ein effektives Discovery-Vorgehen machen, das den Übergang zu einem klassischen agilen Framework (z. B. Scrum) vorbereitet.

Möchtest du eine konkrete Vorlage für ein Exploration Backlog oder Hypothesenboard für euer Team?

Architektur vorhanden

ok, du findest vor allem Tages-Zyklen zu kurz. Ich möchte noch ergänzen, dass wir eine Architektur haben. Das klingt vielleicht paradox, aber es ist tatsächlich so, dass wir das Software-syewtm bereits designed haben, und die Komponenten auch bereits kennen, aber jetzt vor allem die Wege des automatischen Deployments in eine Cloud und die Konfigurations- und Verwaltungsstrukturen unsrerer Software herausfinden müssen. Das ist da, was sich gerade nicht weiter planen lässt. Kannst du mit diesen Ergänzungen dein Feedbach nochmal schärfen?

Danke für die Ergänzung – das ändert die Ausgangslage entscheidend und erlaubt eine deutlich präzisere Einordnung.

Neue Ausgangslage

- Ihr habt eine Architektur: Die Komponenten und Schnittstellen sind klar.

- Es gibt bereits einen PoC und eine Produktvision.

- Das Backlog fehlt, weil ihr gerade die “nicht-funktionalen” Themen wie Deployment, Cloud-Umgebung, Konfiguration und Betrieb klärt.

- Das System ist technisch grob fertig designt, aber die Operations- und Deployment-Prozesse sind unklar und werden explorativ entwickelt.

Schärferes Feedback

1. Tages-PDCA-Zyklen wirken in diesem Kontext zu granular

Bei Infrastruktur-, Deployment- oder Konfigurationsfragen entstehen oft tiefe „Spikes“ (z. B. CI/CD-Pipeline aufsetzen, Helm-Chart modellieren, Secrets Handling evaluieren). Diese brauchen oft mehr als einen Tag bis zum „Check“, weil Abhängigkeiten (z. B. Rechte, Cloud-Zugänge, Testumgebungen) entstehen. Ein 2- bis 3-Tages-Rhythmus mit klaren Zwischenzielen wäre realistischer, ergänzt um:

- Daily Standup als Taktgeber, aber nicht zwangsläufig als vollständiger PDCA-Zyklus.

- Weekly Planning mit Zielvorgaben und Review-Ritualen, um Fortschritt messbar zu machen.

2. Was ihr macht, ist kein Produkt-Delivery, sondern “System Enablement”

Ihr steckt im Übergang von Architektur zu einem Infrastructure as Code + Plattform Enablement Track. Das bedeutet:

- Die „User Stories“ sind keine klassischen Features, sondern z. B.:

- „Als Team möchte ich unsere Software mit einem Befehl deployen können.“

- „Als Betreiber will ich wissen, wie ich Services konfiguriere.“

Das spricht für ein Infrastructure Kanban Board, keine Sprints.

3. Mögliche Vorgehensmodelle / Best Practices

| Modell / Methode | Warum es jetzt passt |

|---|---|

| Kanban für technische Tasks | Flexible Work-in-Progress-Kontrolle, ideal für Exploration. |

| Spikes (aus XP) | Timeboxed Exploration zu offenen Fragen (z. B. Helm, Secrets). |

| Enabler Epics (SAFe) | Deployment- und Ops-Fragen formal als “Enabler” fassen. |

| Team Topologies – Platform Team Light | Ihr agiert gerade wie ein internes Plattform-Team. |

| Weekly DevOps Review | Alle 5–7 Tage Stand der Automatisierung/Doku/Strukturen. |

| ADRs & Living Docs | Architectural Decision Records für Deployment-/Ops-Entscheidungen. |

4. Konkrete Empfehlungen zur Strukturierung

- Ziele für die 8 Wochen formulieren als “Capabilities”, z. B.:

- „Greenfield-Clouddeployment in AWS/GCP über GitHub Actions.“

- „Parameterisierbares Helm-Chart mit Doku.“

- „Konfigurierbarkeit über ENV vs. Secrets evaluieren.“

- Dazu 1–2 Woche cadence, jede Woche ein Review/Checkpoint.

- Boards mit Task-Kategorien:

- 🧪 Spike

- 🛠️ Tooling/Automation

- 📦 Infrastruktur/Dienst

- 📓 Dokumentation

- Ergebnisse sofort versionieren und dokumentieren, z. B.:

- GitOps Repo-Struktur

- ADRs

- Beispiel-Deployments

Fazit

Ihr seid im “technisch operativen Enablement” eures Systems, nicht im Produkt-Delivery. Das rechtfertigt:

- ein kanban-artiges, flexibles Modell mit timeboxed Reviews,

- tägliche Abstimmungen, aber nicht zwingend tägliche Zyklen,

- Fokus auf Enabler-Ziele statt funktionale Stories.

SCRUM ist hier fehl am Platz, aber euer Ansatz ist sehr nahe an einer modernen DevOps-orientierten Arbeitsweise, die z. B. von Platform Engineering Teams eingesetzt wird.

Möchtest du ein Beispiel für ein solches Kanban-Board mit passenden Task-Typen und Zielstruktur?

Kanban

ja, gerne!

Hier ist ein konkretes Beispiel für ein Kanban-Board, das speziell auf eure Situation als „Platform/Enablement“-Team mit einem vorhandenen Architekturbild, aber offenem Deployment- und Betriebsdesign zugeschnitten ist.

🎯 Zielstruktur (übergeordnet für 8 Wochen)

Diese formuliert ihr am besten als Capabilities – Fähigkeiten, die das System nachher haben soll:

| Capability ID | Zielbeschreibung |

|---|---|

| C1 | Anwendung kann automatisiert in Cloudumgebung X deployed werden (inkl. Secrets Mgmt) |

| C2 | Konfiguration des Systems ist externisiert und dokumentiert (ENV, YAML, o. ä.) |

| C3 | Monitoring- und Logging-Infrastruktur ist einsatzbereit und dokumentiert |

| C4 | Dev- und Test-Umgebungen sind klar getrennt und automatisch provisionierbar |

| C5 | Alle Plattformentscheidungen (z. B. Helm vs. Kustomize) sind versioniert und begründet |

Diese Capabilities bilden Spalten oder Swimlanes im Board (wenn euer Tool das unterstützt, z. B. GitHub Projects, Jira oder Trello mit Labels).

🗂️ Kanban-Board-Spalten (klassisch)

| Spalte | Zweck |

|---|---|

| 🔍 Backlog | Ideen, Hypothesen, Tasks – priorisiert nach Capabilities |

| 🧪 In Exploration | Aktive Spikes, Proofs, technische Evaluierungen |

| 🛠️ In Progress | Umsetzung mit konkretem Ziel |

| ✅ Review / Check | Funktionsprüfung, internes Review |

| 📦 Done | Abgeschlossen, dokumentiert, ggf. in Repo |

🏷️ Task-Typen (Labels oder Emojis zur Kennzeichnung)

| Symbol / Label | Typ | Beispiel |

|---|---|---|

| 🧪 Spike | Technische Untersuchung | „Untersuche ArgoCD vs. Flux für GitOps Deployment“ |

| 📦 Infra | Infrastruktur | „Provisioniere dev/test/stage in GCP mit Terraform“ |

| 🔐 Secrets | Sicherheitsrelevante Aufgabe | „Design für Secret-Handling mit Sealed Secrets“ |

| 📓 Docs | Dokumentation | „README für Developer Setup schreiben“ |

| 🧰 Tooling | CI/CD, Pipelines, Linter | „GitHub Action für Build & Deploy schreiben“ |

| 🔁 Entscheidung | Architekturentscheidung | „ADR: Helm vs. Kustomize für Service Deployment“ |

🧩 Beispielhafte Tasks für Capability „C1 – Deployment automatisieren“

| Task | Typ | Status |

|---|---|---|

| Write GitHub Action for Docker image push | 🧰 Tooling | 🛠️ In Progress |