This section is the project documentation for IPCEI-CIS Developer Framework.

This is the multi-page printable view of this section. Click here to print.

Developer Framework Documentation

- 1: Architecture

- 2: Documentation (v1 - Legacy)

- 2.1: Concepts

- 2.1.1: Code: Software and Workloads

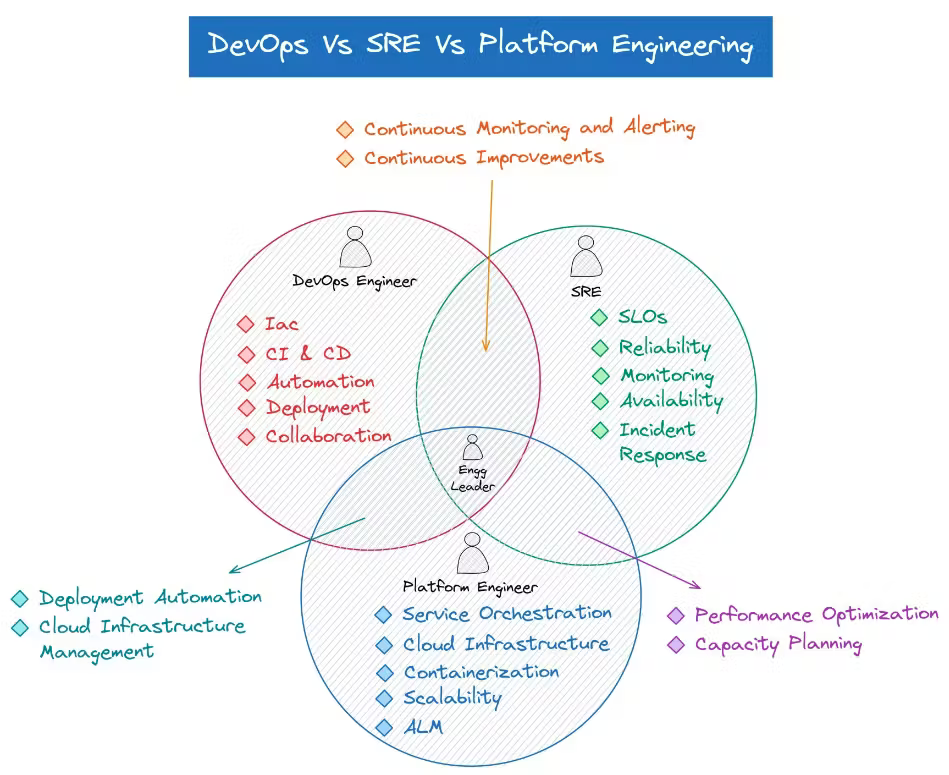

- 2.1.2: Engineers

- 2.1.3: Use Cases

- 2.1.4: (Digital) Platforms

- 2.1.4.1: Platform Engineering

- 2.1.4.1.1: Reference Architecture

- 2.1.4.2: Platform Components

- 2.1.4.2.1: CI/CD Pipeline

- 2.1.4.2.2: Developer Portals

- 2.1.4.2.3: Platform Orchestrator

- 2.1.4.2.4: List of references

- 2.1.5: Platform Orchestrators

- 2.2: Solution

- 2.2.1: Design

- 2.2.1.1: Agnostic EDF Deployment

- 2.2.1.2: Agnostic Stack Definition

- 2.2.1.3: eDF is self-contained and has an own IAM (WiP)

- 2.2.1.4:

- 2.2.1.5:

- 2.2.1.6:

- 2.2.1.7:

- 2.2.2: Scenarios

- 2.2.2.1: Gitops

- 2.2.2.2: Orchestration

- 2.2.3: Tools

- 2.2.3.1: Backstage

- 2.2.3.1.1: Backstage Description

- 2.2.3.1.2: Backstage Local Setup Tutorial

- 2.2.3.1.3: Existing Backstage Plugins

- 2.2.3.1.4: Plugin Creation Tutorial

- 2.2.3.2: CNOE

- 2.2.3.2.1: Analysis of CNOE competitors

- 2.2.3.2.2: Included Backstage Templates

- 2.2.3.2.2.1: Template for basic Argo Workflow

- 2.2.3.2.2.2: Template for basic kubernetes deployment

- 2.2.3.2.3: idpbuilder

- 2.2.3.2.3.1: Installation of idpbuilder

- 2.2.3.2.3.2: Http Routing

- 2.2.3.2.4: ArgoCD

- 2.2.3.2.5: Validation and Verification

- 2.2.3.3: Crossplane

- 2.2.3.3.1: Howto develop a crossplane kind provider

- 2.2.3.4: Kube-prometheus-stack

- 2.2.3.5: Kyverno

- 2.2.3.6: Loki

- 2.2.3.7: Promtail

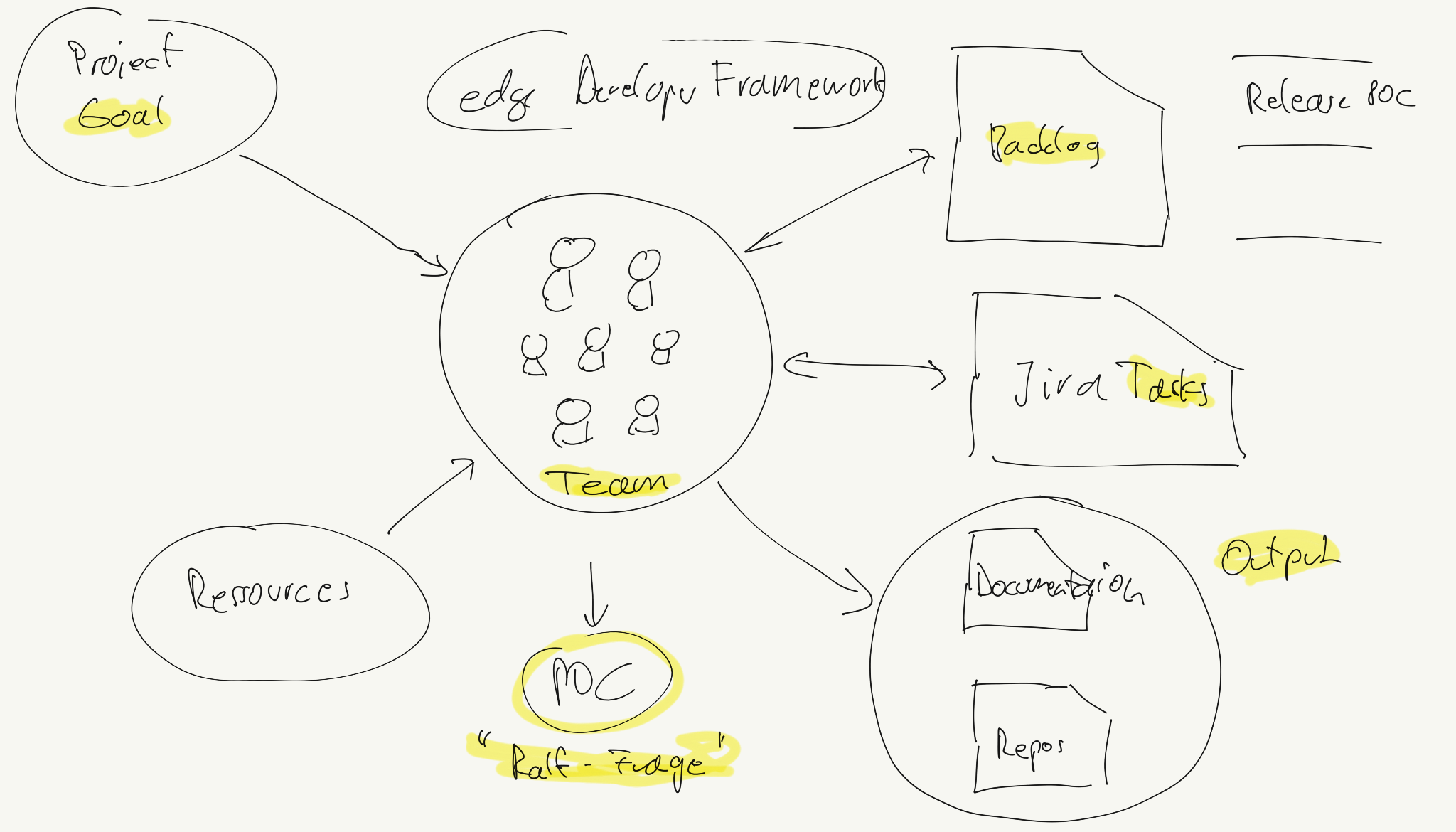

- 2.3: Project

- 2.3.1: Platform 101: Conceptual Onboarding

- 2.3.1.1: Introduction

- 2.3.1.2: Edge Developer Framework

- 2.3.1.3: Platform Engineering aka Platforming

- 2.3.1.4: Orchestrators

- 2.3.1.5: CNOE

- 2.3.1.6: CNOE Showtime

- 2.3.1.7: Conclusio

- 2.3.1.8:

- 2.3.2: Bootstrapping Infrastructure

- 2.3.2.1: Backup of the Bootstrapping Cluster

- 2.3.3: Plan in 2024

- 2.3.3.1: Workstreams

- 2.3.3.1.1: Fundamentals

- 2.3.3.1.1.1: Activity 'Platform Definition'

- 2.3.3.1.1.2: Activity 'CI/CD Definition'

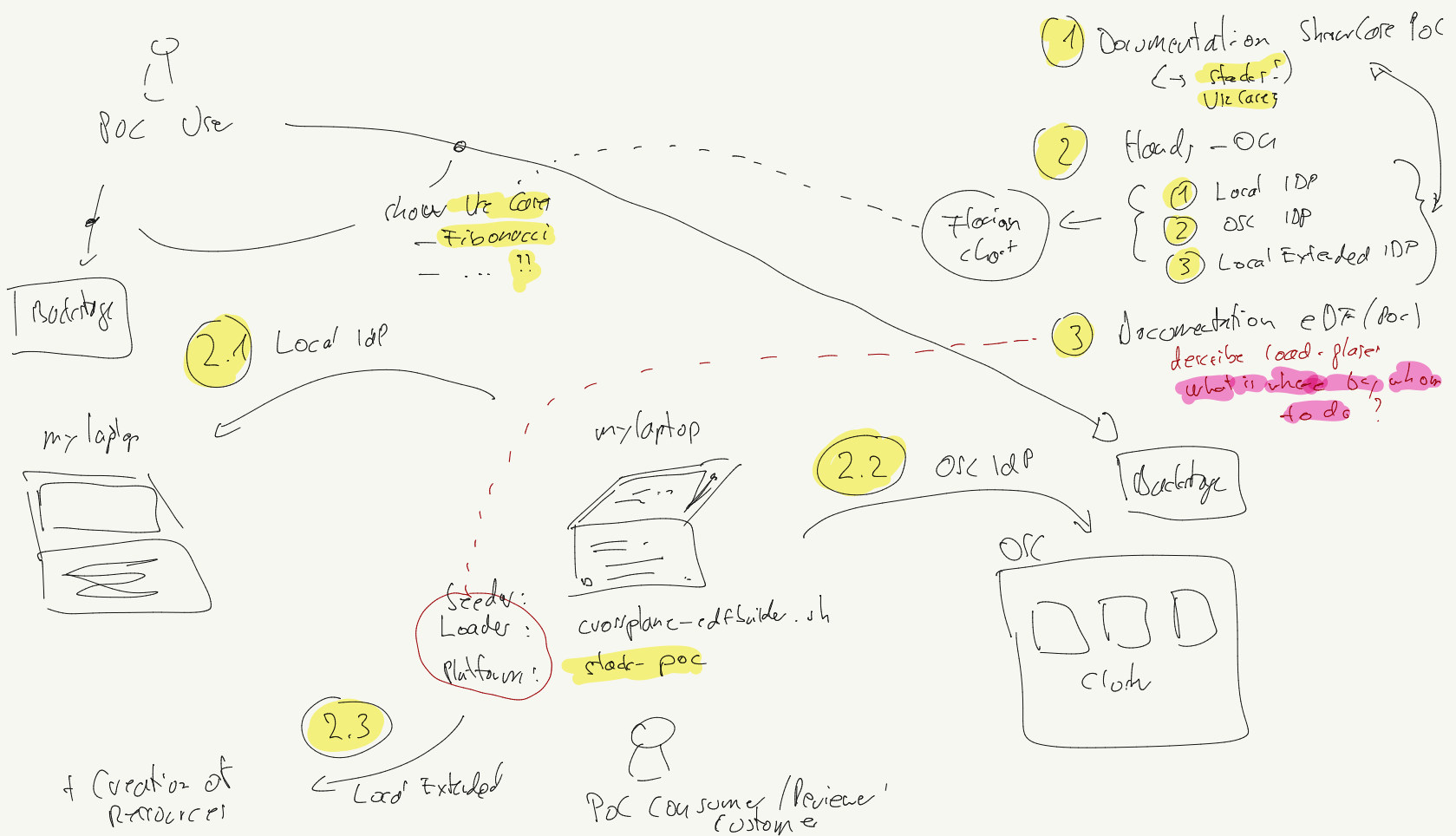

- 2.3.3.1.2: POCs

- 2.3.3.1.2.1: Activity 'CNOE Investigation'

- 2.3.3.1.2.2: Activity 'SIA Asset Golden Path Development'

- 2.3.3.1.2.3: Activity 'Kratix Investigation'

- 2.3.3.1.3: Deployment

- 2.3.3.1.3.1: Activity 'Forgejo'

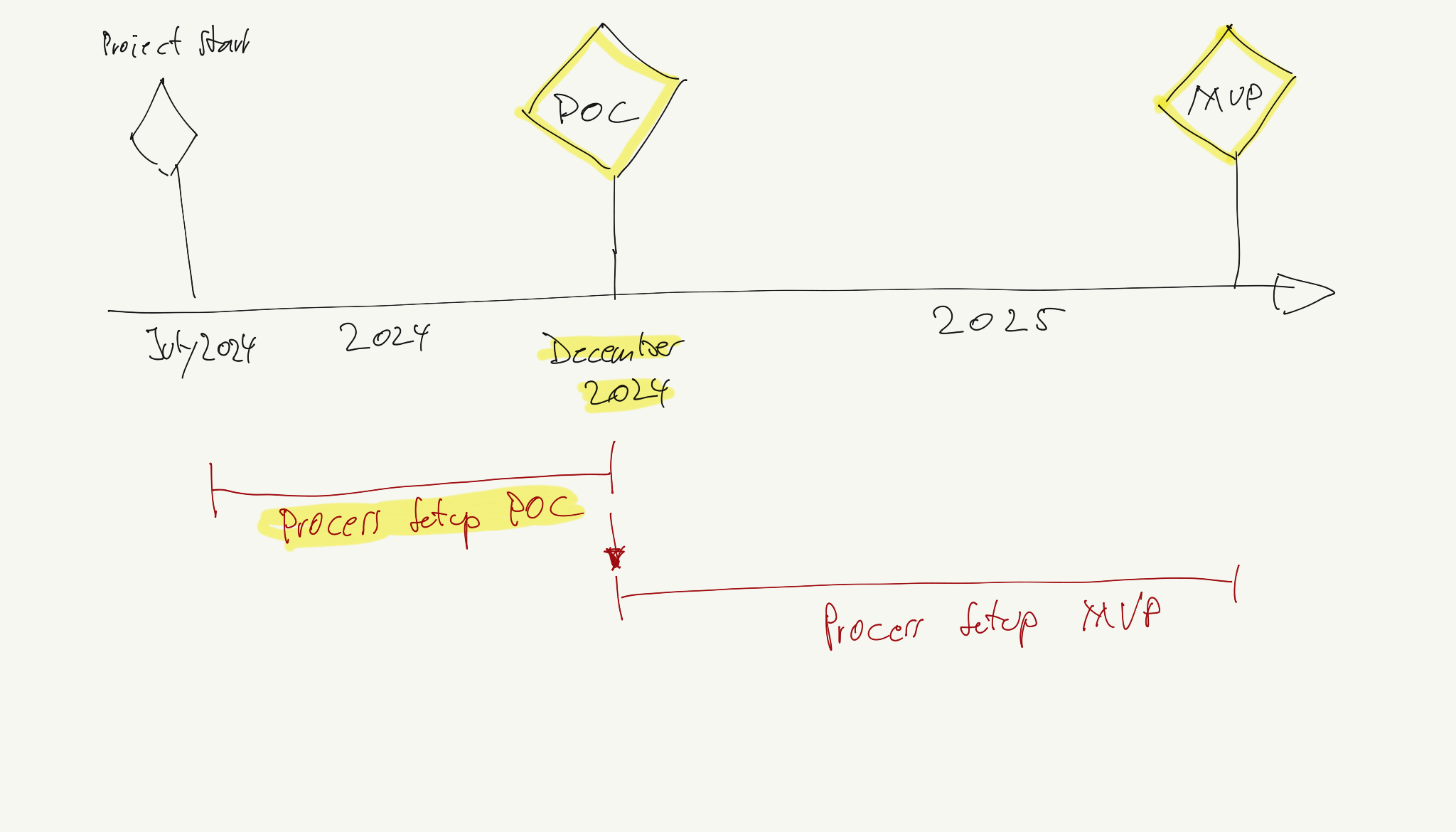

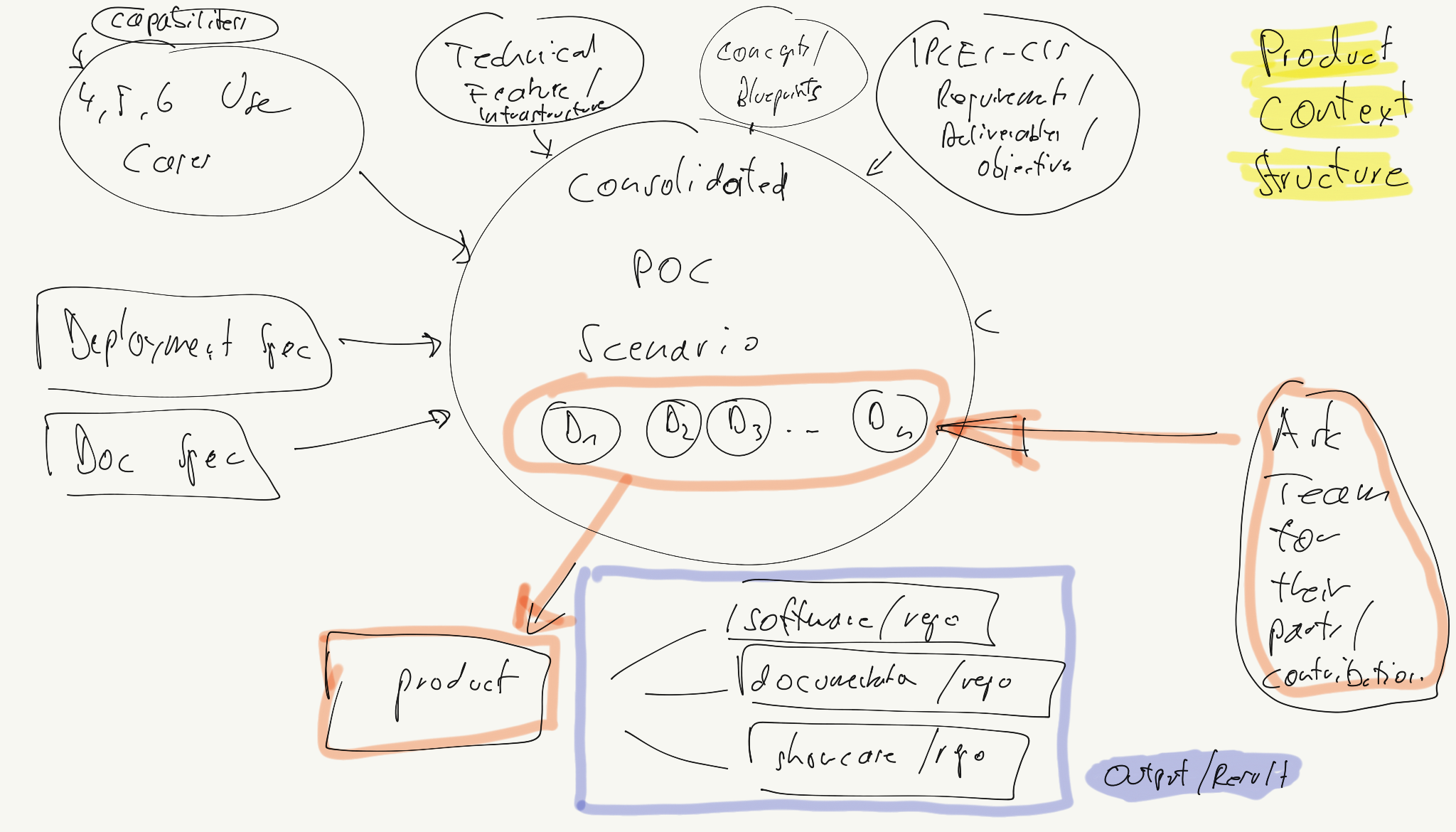

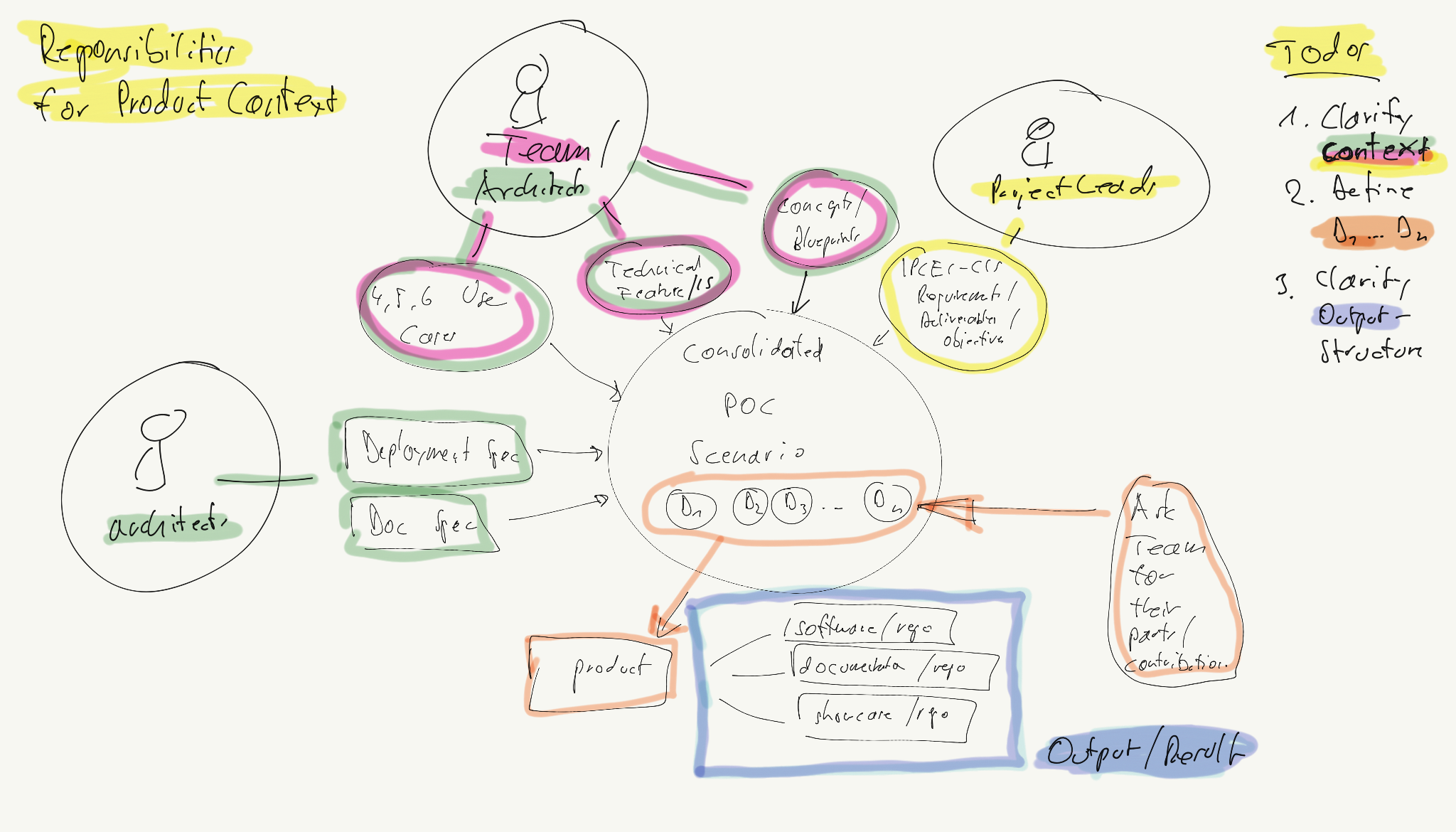

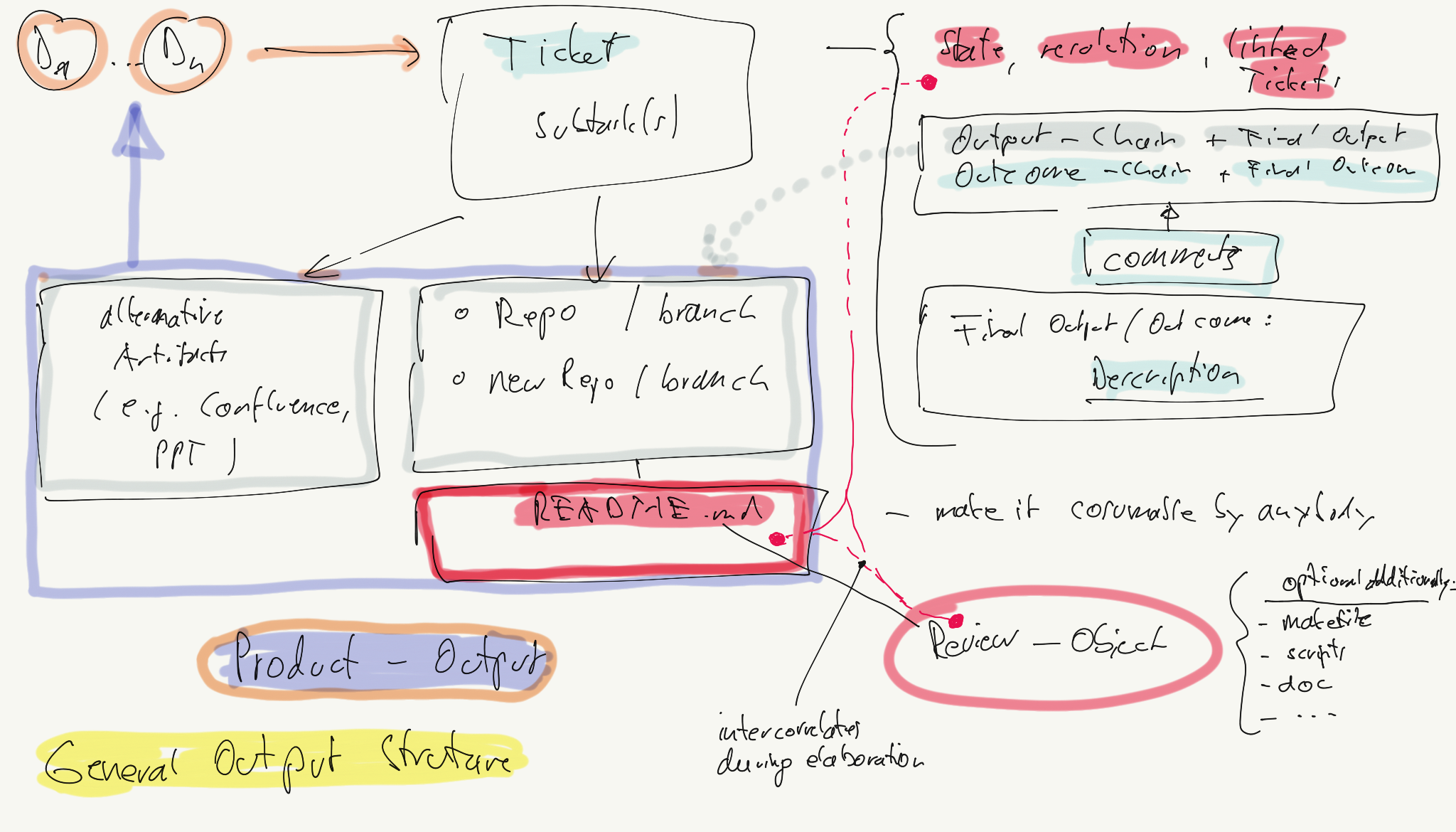

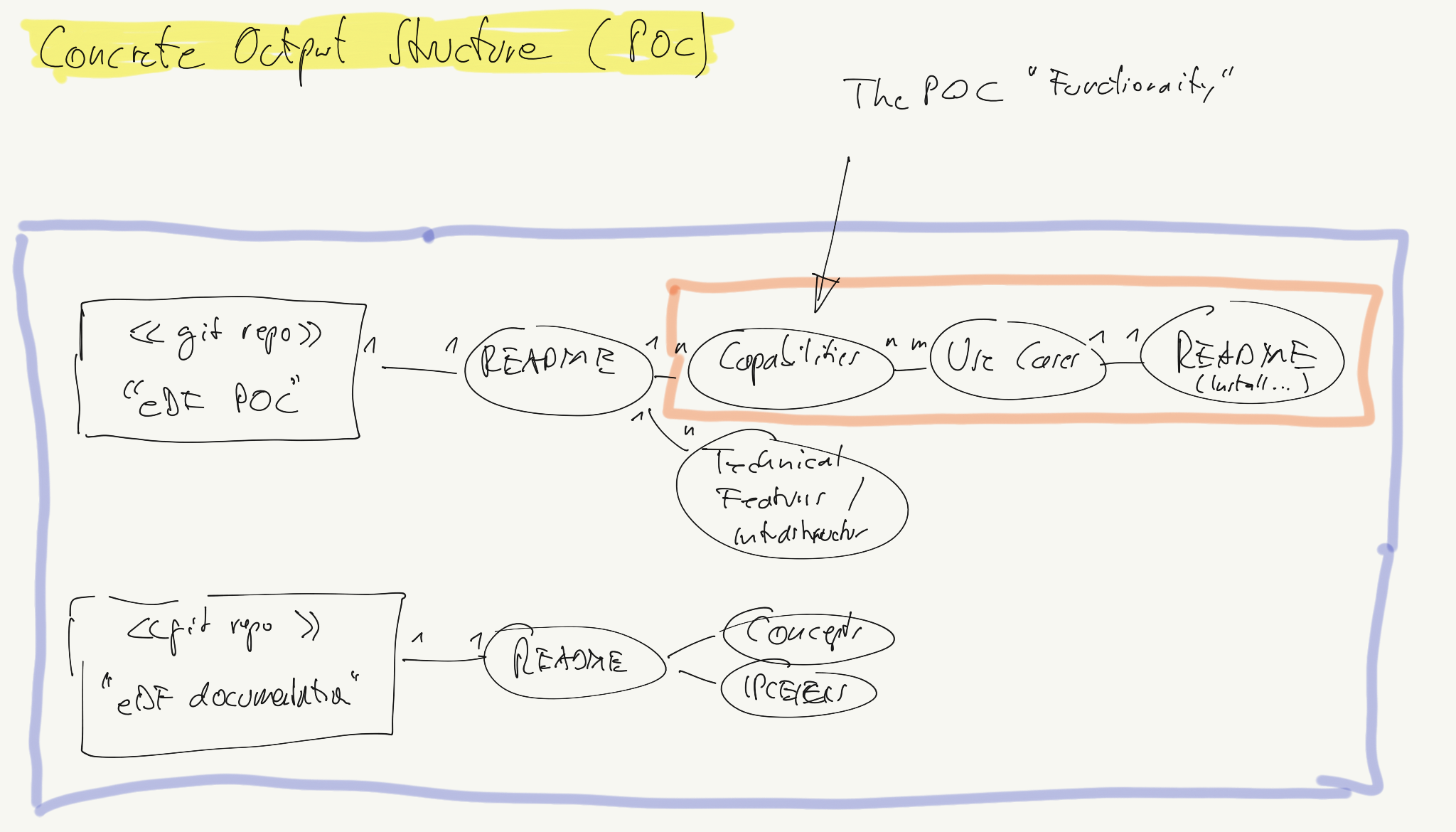

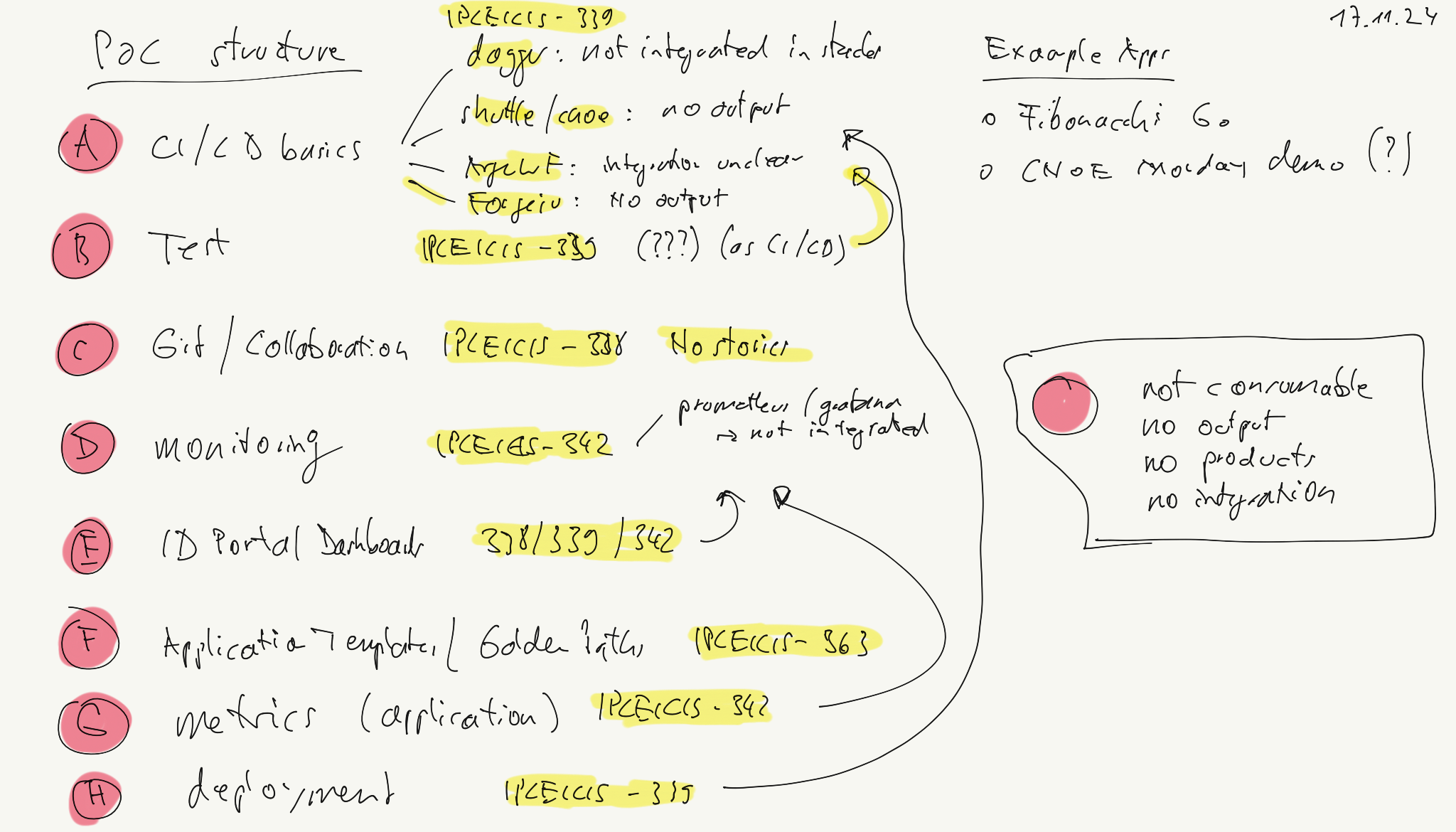

- 2.3.3.2: PoC Structure

- 2.3.4: Stakeholder Workshop Intro

- 2.3.5: Team and Work Structure

- 2.3.6:

- 3:

- 4:

- 5:

1 - Architecture

This section contains architecture documentation for the IPCEI-CIS Developer Framework, including interactive C4 architecture diagrams.

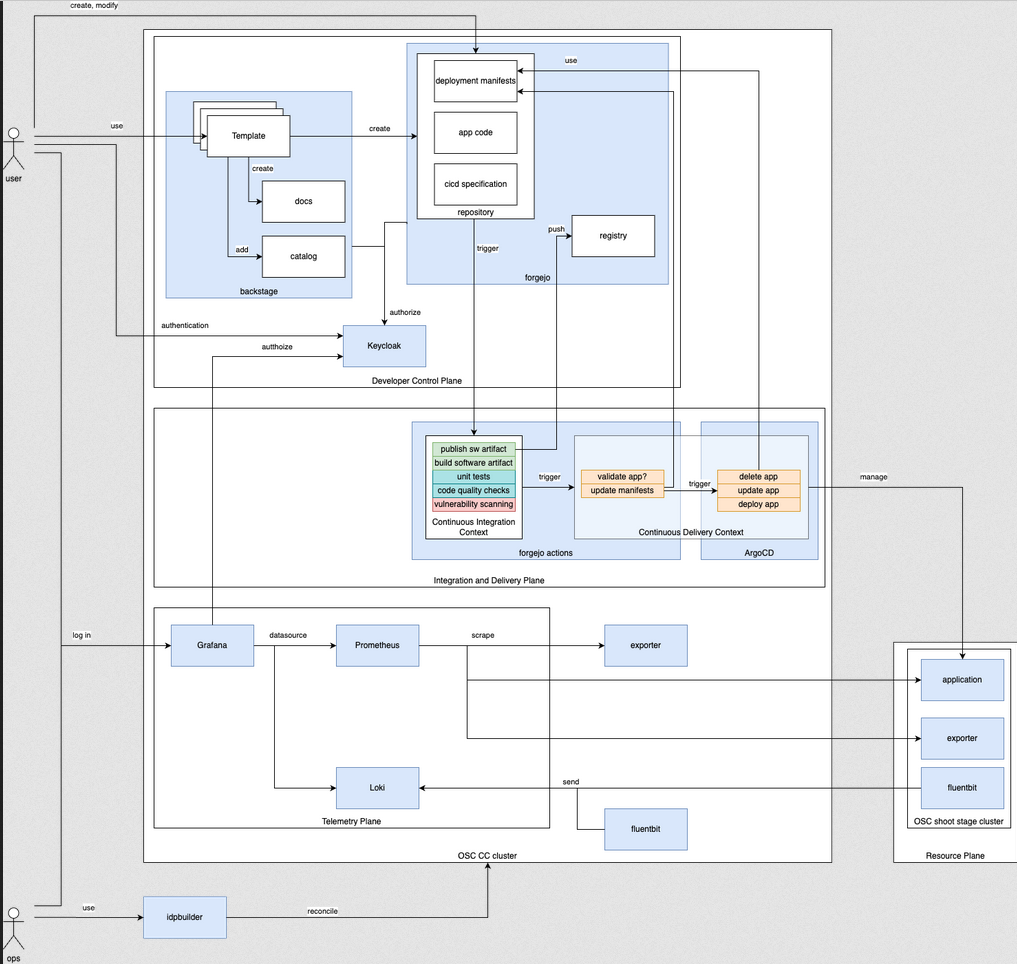

1.1 - High Level Architecture

This document describes the high-level architecture of our Enterprise Development Platform (EDP) system.

Interactive Architecture Diagram

Interactive Diagram

The diagram above is interactive when viewed in a compatible browser. You can click on components to explore the architecture details.

Note: The interactive diagram requires the LikeC4 webcomponent to be generated. See the setup instructions for details.

Architecture Overview

The Enterprise Development Platform consists of several key components working together to provide a comprehensive development and deployment environment.

Key Components

- OTC Foundry - Central management and orchestration layer

- Per-Tenant EDP - Isolated development environments for each tenant

- FaaS Environment - Function-as-a-Service deployment targets on Open Telekom Cloud

- Cloud Services - Managed services including databases, storage, and monitoring

Deployment Environments

- Development Environment (

*.t09.de) - For platform team development and testing - Production Environment (

*.buildth.ing) - For production workloads and tenant services

Component Details

The interactive diagram above shows the relationships between different components and how they interact within the system architecture. You can explore the diagram by clicking on different elements to see more details.

Infrastructure Components

- Kubernetes Clusters - Container orchestration using OTC CCE (Cloud Container Engine)

- ArgoCD - GitOps continuous deployment and application lifecycle management

- Forgejo - Git repository management and CI/CD pipelines

- Observability Stack - Monitoring (Prometheus, Grafana), logging (Loki), and alerting

Security and Management

- Keycloak - Identity and access management (IAM)

- OpenBao - Secrets management (Hashicorp Vault fork)

- External Secrets Operator - Kubernetes secrets integration

- Crossplane - Infrastructure as Code and cloud resource provisioning

Developer Experience

- Backstage - Internal developer portal and service catalog

- Forgejo Actions - CI/CD pipeline execution

- Development Workflows - GitOps-based inner and outer loop workflows

Setup and Maintenance

To update or modify the architecture diagrams:

Edit the

.c4files inresources/likec4/Regenerate the webcomponent:

cd resources/likec4 npx likec4 codegen webcomponent \ --webcomponent-prefix likec4 \ --outfile ../../static/js/likec4-webcomponent.jsCommit both the model changes and the regenerated JavaScript file

For more information, see the LikeC4 Integration Guide.

1.2 - LikeC4 Setup Guide

This guide explains how to set up and use LikeC4 interactive architecture diagrams in this documentation.

Overview

LikeC4 enables you to create interactive C4 architecture diagrams as code. The diagrams are defined in .c4 files and compiled into a web component that can be embedded in any HTML page.

Prerequisites

- Node.js (v18 or later)

- npm or yarn

Initial Setup

1. Install Dependencies

Navigate to the LikeC4 directory and install dependencies:

cd resources/likec4

npm install

2. Generate the Web Component

Create the web component that Hugo will load:

npx likec4 codegen webcomponent \

--webcomponent-prefix likec4 \

--outfile ../../static/js/likec4-webcomponent.js

This command:

- Reads all

.c4files frommodels/andviews/ - Generates a single JavaScript file with all architecture views

- Outputs to

static/js/likec4-webcomponent.js

3. Verify Integration

The integration should already be configured in:

hugo.toml- Containsparams.likec4.enable = truelayouts/partials/hooks/head-end.html- Loads CSS and loader scriptstatic/css/likec4-styles.css- Diagram stylingstatic/js/likec4-loader.js- Dynamic module loader

Directory Structure

resources/likec4/

├── models/ # C4 model definitions

│ ├── components/ # Component models

│ ├── containers/ # Container models

│ ├── context/ # System context

│ └── code/ # Code-level workflows

├── views/ # View definitions

│ ├── deployment/ # Deployment views

│ ├── edp/ # EDP views

│ ├── high-level-concept/ # Conceptual views

│ └── dynamic/ # Process flows

├── package.json # Dependencies

└── INTEGRATION.md # Integration docs

Using in Documentation

Basic Usage

Add this to any Markdown file:

<div class="likec4-container">

<div class="likec4-header">

Your Diagram Title

</div>

<likec4-view view-id="YOUR-VIEW-ID" browser="true"></likec4-view>

<div class="likec4-loading" id="likec4-loading">

Loading architecture diagram...

</div>

</div>

Available View IDs

To find available view IDs, search the .c4 files:

cd resources/likec4

grep -r "view\s\+\w" views/ models/ --include="*.c4"

Common views:

otc-faas- OTC FaaS deploymentedp- EDP overviewlandscape- Developer landscapeedpbuilderworkflow- Builder workflowkeycloak- Keycloak component

With Hugo Alert

Combine with Docsy alerts for better UX:

<div class="likec4-container">

<div class="likec4-header">

System Architecture

</div>

<likec4-view view-id="otc-faas" browser="true"></likec4-view>

<div class="likec4-loading" id="likec4-loading">

Loading...

</div>

</div>

{{< alert title="Note" >}}

Click on components in the diagram to explore the architecture.

{{< /alert >}}

Workflow for Changes

1. Modify Architecture Models

Edit the .c4 files in resources/likec4/:

# Edit a model

vi resources/likec4/models/containers/argocd.c4

# Or edit a view

vi resources/likec4/views/deployment/otc/otc-faas.c4

2. Preview Changes Locally

Use the LikeC4 CLI to preview:

cd resources/likec4

# Start preview server

npx likec4 start

# Opens browser at http://localhost:5173

3. Regenerate Web Component

After making changes:

cd resources/likec4

npx likec4 codegen webcomponent \

--webcomponent-prefix likec4 \

--outfile ../../static/js/likec4-webcomponent.js

4. Test in Hugo

Start the Hugo development server:

# From repository root

hugo server -D

# Open http://localhost:1313

5. Commit Changes

Commit both the model files and the regenerated web component:

git add resources/likec4/

git add static/js/likec4-webcomponent.js

git commit -m "feat: update architecture diagrams"

Advanced Configuration

Custom Styling

Modify static/css/likec4-styles.css to customize appearance:

.likec4-container {

height: 800px; /* Adjust height */

border-radius: 8px; /* Rounder corners */

}

Multiple Diagrams Per Page

You can include multiple diagrams on a single page:

<!-- First diagram -->

<div class="likec4-container">

<div class="likec4-header">Deployment View</div>

<likec4-view view-id="otc-faas" browser="true"></likec4-view>

<div class="likec4-loading">Loading...</div>

</div>

<!-- Second diagram -->

<div class="likec4-container">

<div class="likec4-header">Component View</div>

<likec4-view view-id="edp" browser="true"></likec4-view>

<div class="likec4-loading">Loading...</div>

</div>

Disable for Specific Pages

Add to page front matter:

---

title: "My Page"

params:

disable_likec4: true

---

Then update layouts/partials/hooks/head-end.html:

{{ if and .Site.Params.likec4.enable (not .Params.disable_likec4) }}

<!-- LikeC4 scripts -->

{{ end }}

Troubleshooting

Diagram Not Loading

- Check browser console (F12 → Console)

- Verify webcomponent exists:

ls -lh static/js/likec4-webcomponent.js - Regenerate if missing:

cd resources/likec4 npm install npx likec4 codegen webcomponent \ --webcomponent-prefix likec4 \ --outfile ../../static/js/likec4-webcomponent.js

View Not Found

- Check view ID matches exactly (case-sensitive)

- Search for the view in

.c4files:grep -r "view otc-faas" resources/likec4/

Styling Issues

- Clear browser cache (Ctrl+Shift+R)

- Check

static/css/likec4-styles.cssis loaded in browser DevTools → Network

Build Errors

If LikeC4 codegen fails:

cd resources/likec4

rm -rf node_modules package-lock.json

npm install

Resources

Migration Notes

This LikeC4 integration was migrated from the edp-doc repository. This repository (ipceicis-developerframework) is now the primary source for architecture models.

The edp-doc repository can reference these models via git submodule if needed.

2 - Documentation (v1 - Legacy)

Note

This is the legacy documentation (v1). For the latest version, please visit the current documentation.This section contains the original documentation that is being migrated to a new structure.

2.1 - Concepts

2.1.1 - Code: Software and Workloads

2.1.2 - Engineers

2.1.3 - Use Cases

Rationale

The challenge of IPCEI-CIS Developer Framework is to provide value for DTAG customers, and more specifically: for Developers of DTAG customers.

That’s why we need verifications - or test use cases - for the Developer Framework to develop.

(source: https://tag-app-delivery.cncf.io/whitepapers/platforms/)

(source: https://tag-app-delivery.cncf.io/whitepapers/platforms/)

Golden Paths as Use Cases

- https://platformengineering.org/blog/how-to-pave-golden-paths-that-actually-go-somewhere

- https://thenewstack.io/using-an-internal-developer-portal-for-golden-paths/

- https://nl.devoteam.com/expert-view/building-golden-paths-with-internal-developer-platforms/

- https://www.redhat.com/en/blog/designing-golden-paths

List of Use Cases

Here we have a collection of possible usage scenarios.

Socksshop

Deploy and develop the famous socks shops:

See also mkdev fork: https://github.com/mkdev-me/microservices-demo

Humanitec Demos

Github Examples

Telemetry Use Case with respect to the Fibonacci workload

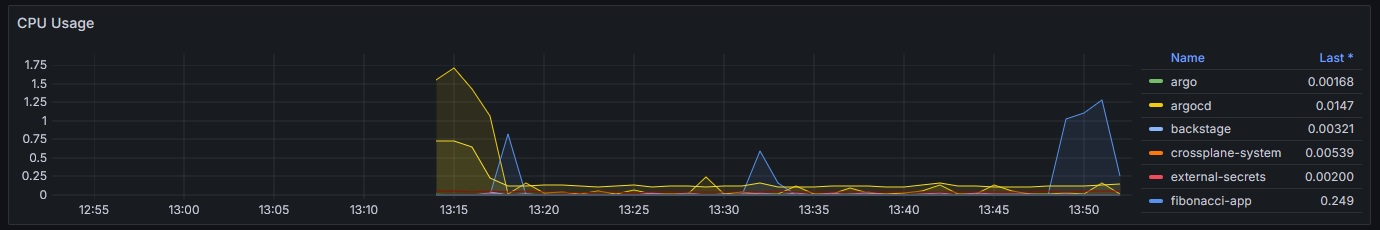

The Fibonacci App on the cluster can be accessed on the path https://cnoe.localtest.me/fibonacci. It can be called for example by using the URL https://cnoe.localtest.me/fibonacci?number=5000000.

The resulting ressource spike can be observed one the Grafana dashboard “Kubernetes / Compute Resources / Cluster”. The resulting visualization should look similar like this:

When and how to use the developer framework?

e.g. an example

…. taken from https://cloud.google.com/blog/products/application-development/common-myths-about-platform-engineering?hl=en

2.1.4 - (Digital) Platforms

Surveys

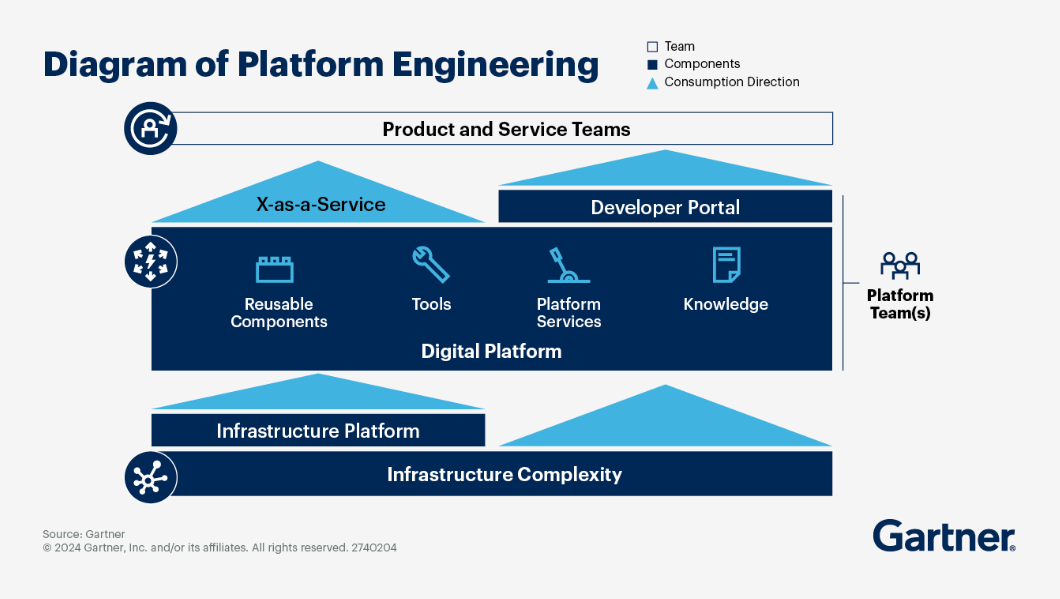

2.1.4.1 - Platform Engineering

Rationale

IPCEI-CIS Developer Framework is part of a cloud native technology stack. To design the capabilities and architecture of the Developer Framework we need to define the surounding context and internal building blocks, both aligned with cutting edge cloud native methodologies and research results.

In CNCF the discipline of building stacks to enhance the developer experience is called ‘Platform Engineering’

CNCF Platforms White Paper

CNCF first asks why we need platform engineering:

The desire to refocus delivery teams on their core focus and reduce duplication of effort across the organisation has motivated enterprises to implement platforms for cloud-native computing. By investing in platforms, enterprises can:

- Reduce the cognitive load on product teams and thereby accelerate product development and delivery

- Improve reliability and resiliency of products relying on platform capabilities by dedicating experts to configure and manage them

- Accelerate product development and delivery by reusing and sharing platform tools and knowledge across many teams in an enterprise

- Reduce risk of security, regulatory and functional issues in products and services by governing platform capabilities and the users, tools and processes surrounding them

- Enable cost-effective and productive use of services from public clouds and other managed offerings by enabling delegation of implementations to those providers while maintaining control over user experience

platformengineering.org’s Definition of Platform Engineering

Platform engineering is the discipline of designing and building toolchains and workflows that enable self-service capabilities for software engineering organizations in the cloud-native era. Platform engineers provide an integrated product most often referred to as an “Internal Developer Platform” covering the operational necessities of the entire lifecycle of an application.

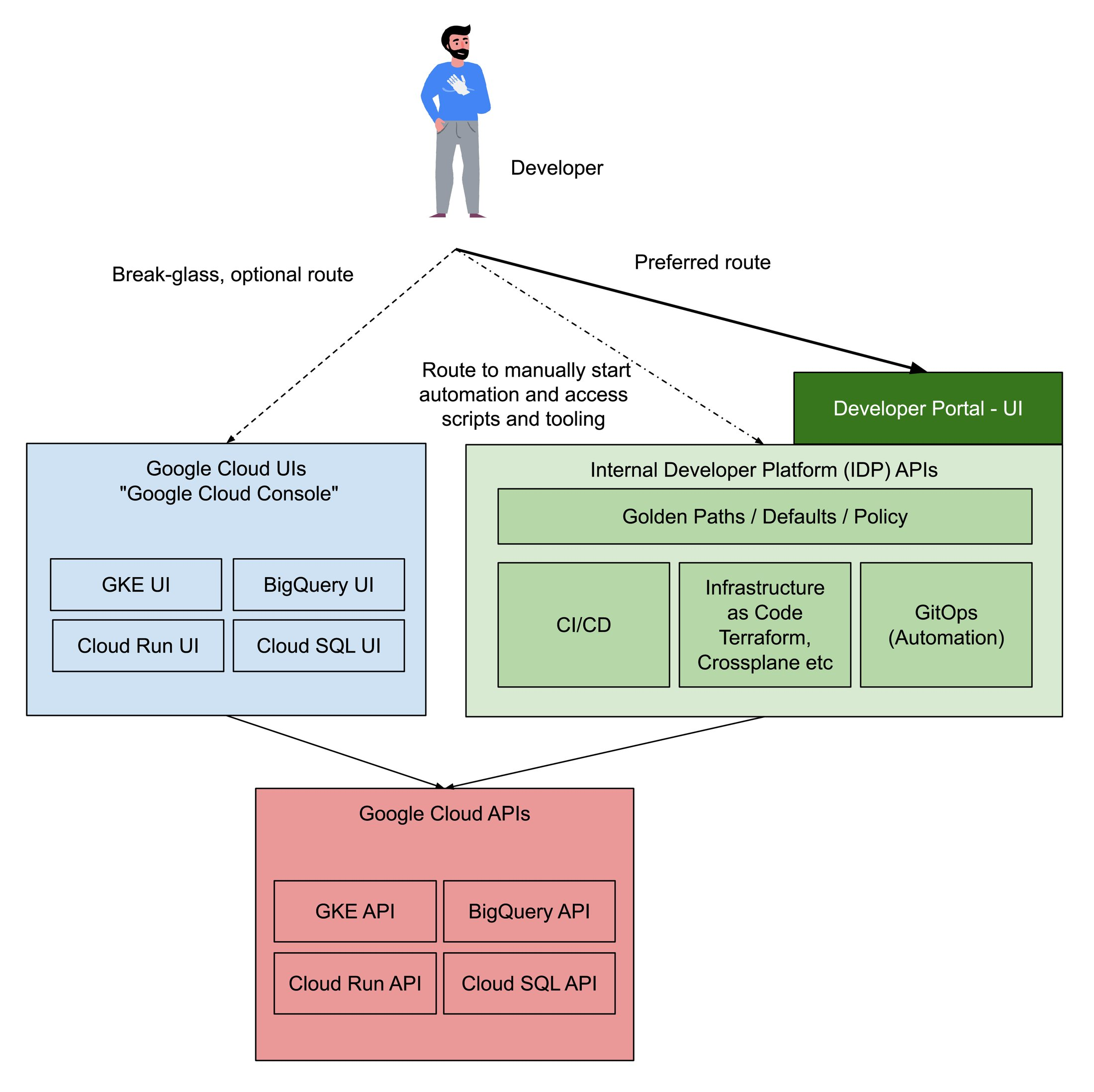

Reference Architecture aka ‘Even more wording’: Internal Developer Platform vs. Developer Portal vs. Platform

https://humanitec.com/blog/wtf-internal-developer-platform-vs-internal-developer-portal-vs-paas

Platform Engineering as running a restaurant

Internal Developer Platform

In IPCEI-CIS right now (July 2024) we are primarily interested in understanding how IDPs are built as one option to implement an IDP is to build it ourselves.

The outcome of the Platform Engineering discipline is - created by the platform engineering team - a so called ‘Internal Developer Platform’.

One of the first sites focusing on this discipline was internaldeveloperplatform.org

Examples of existing IDPs

The amount of available IDPs as product is rapidly growing.

[TODO] LIST OF IDPs

- internaldeveloperplatform.org - ‘Ecosystem’

- Typical market overview: https://medium.com/@rphilogene/10-best-internal-developer-portals-to-consider-in-2023-c780fbf8ab12

- Another one: https://www.qovery.com/blog/10-best-internal-developer-platforms-to-consider-in-2023/

- Just found as another example: platformplane

Additional links

- how-to-fail-at-platform-engineering

- 8-real-world-reasons-to-adopt-platform-engineering

- 7-core-elements-of-an-internal-developer-platform

- internal-developer-platform-vs-internal-developer-portal

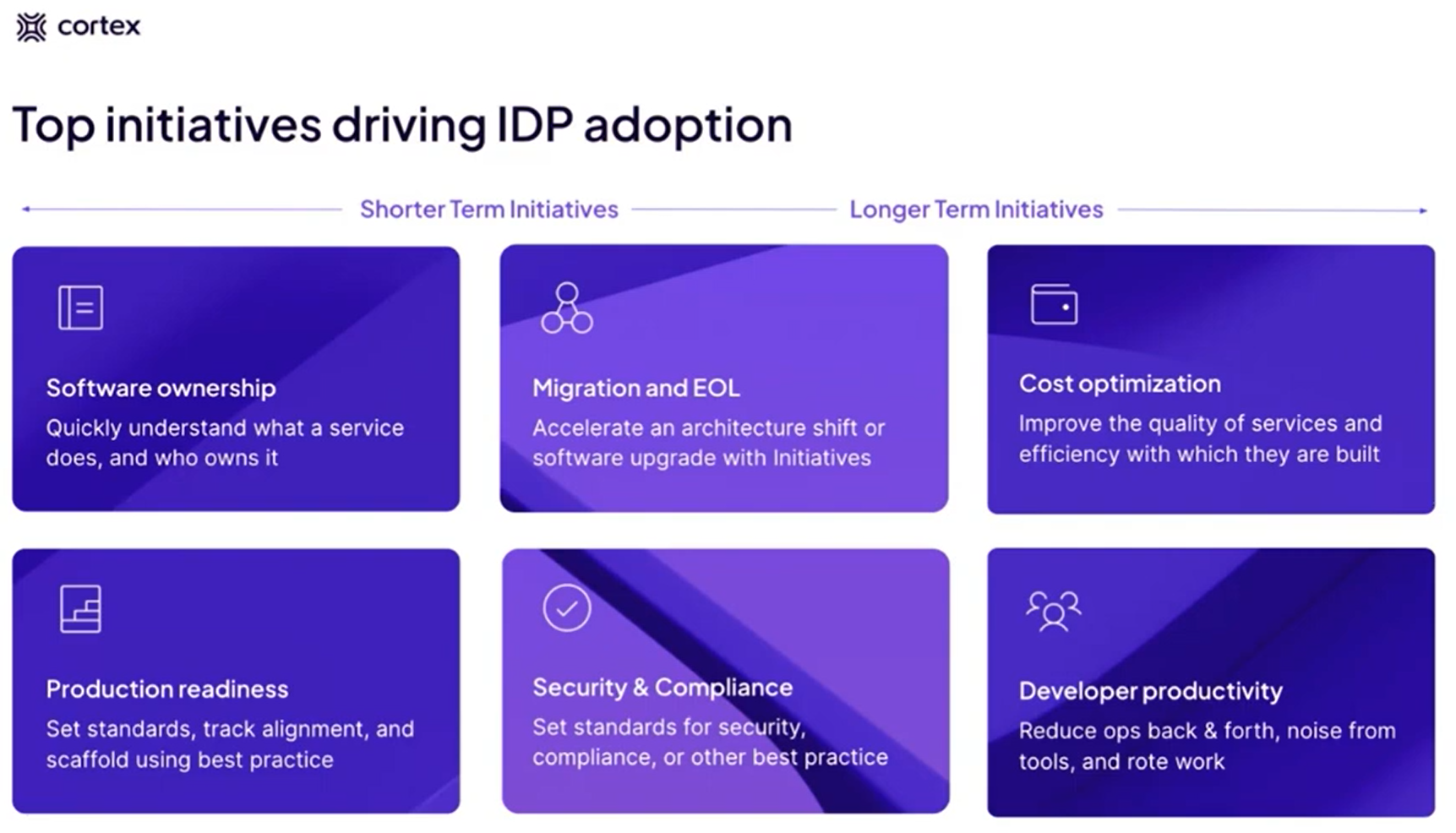

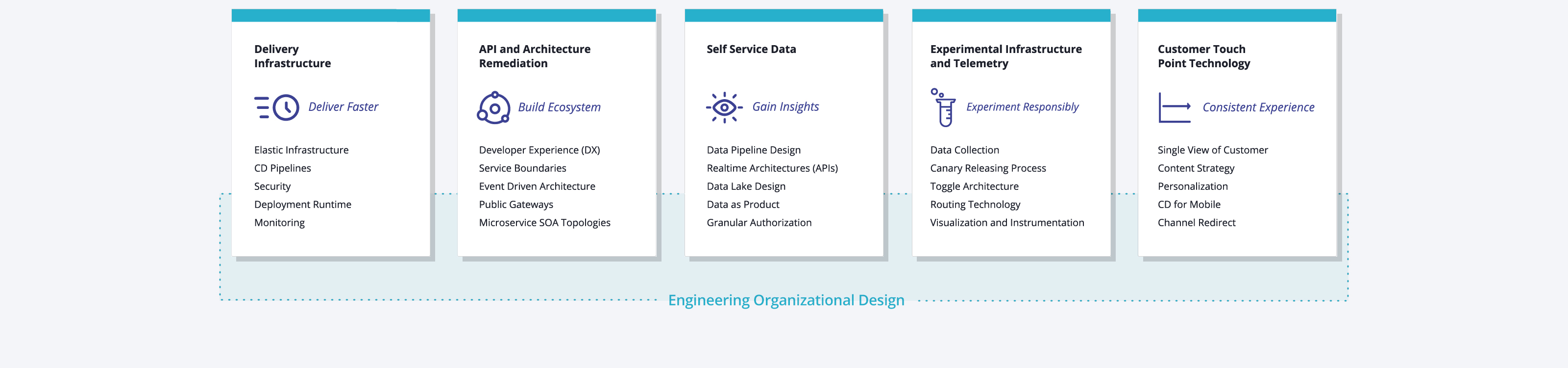

Platform ‘Initiatives’ aka Use Cases

Cortex is talking about Use Cases (aka Initiatives): (or https://www.brighttalk.com/webcast/20257/601901)

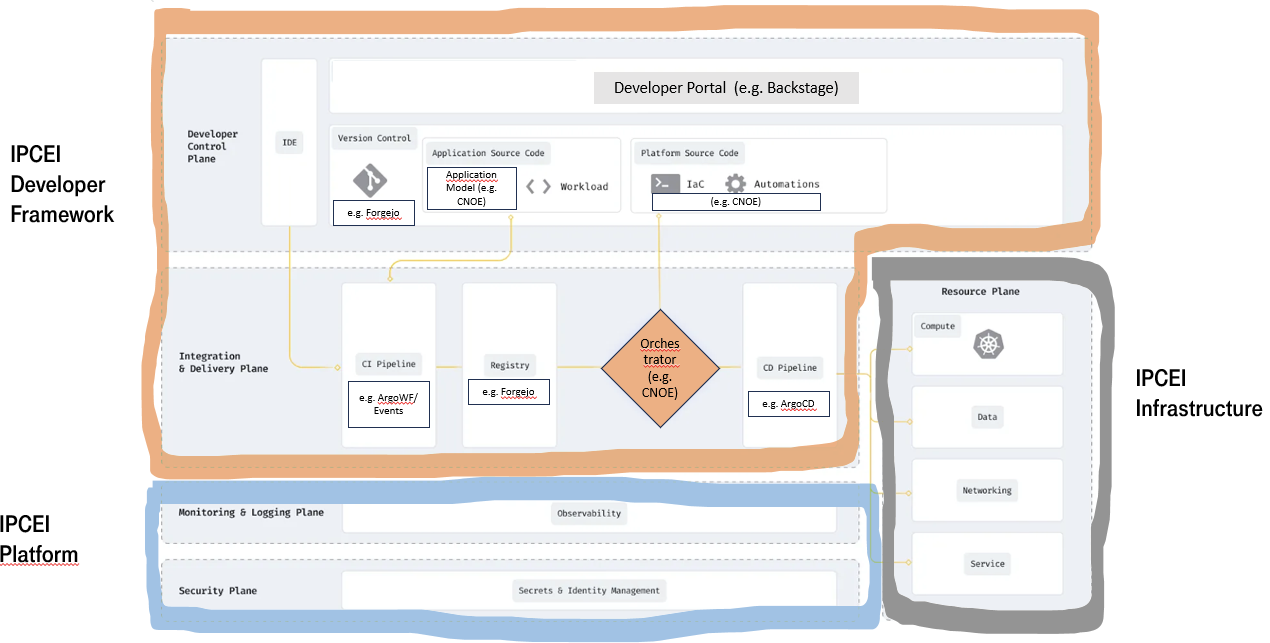

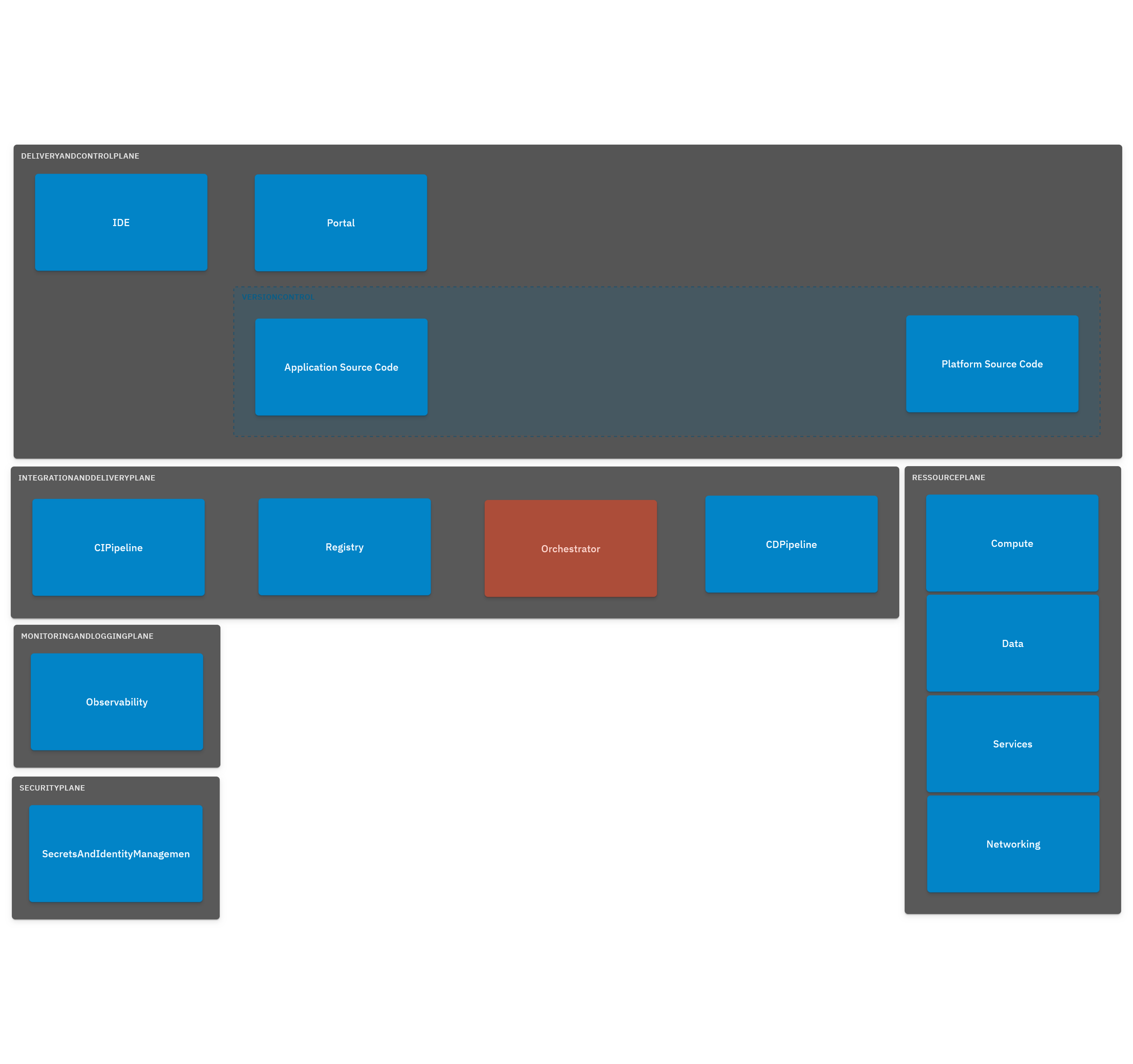

2.1.4.1.1 - Reference Architecture

The Structure of a Successful Internal Developer Platform

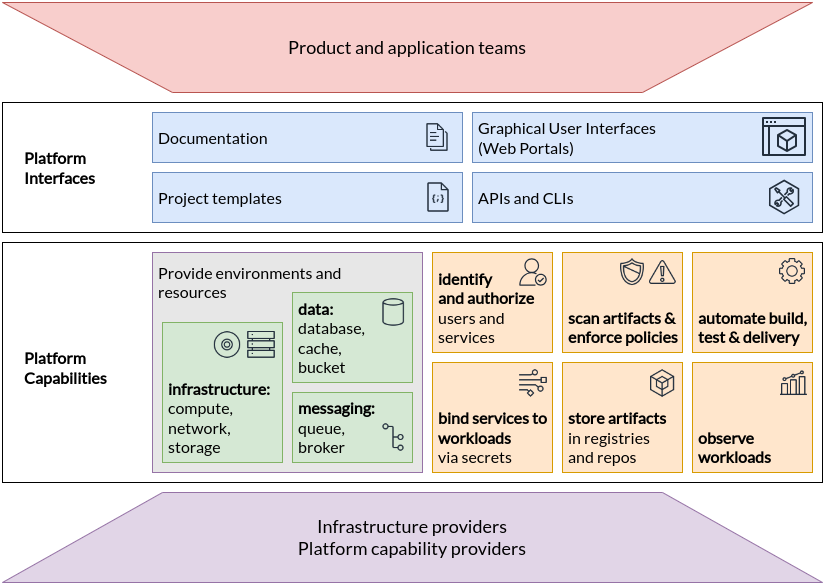

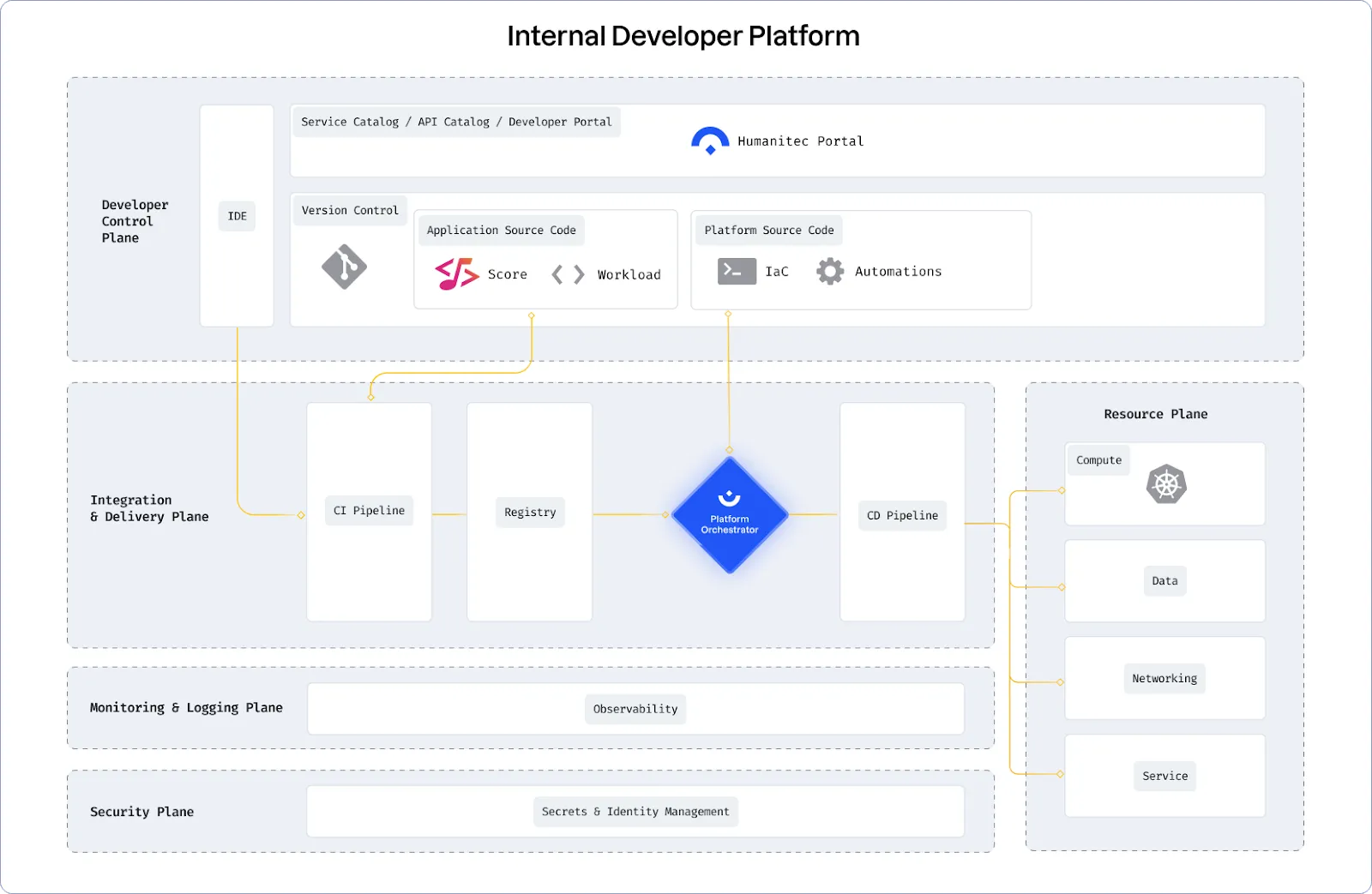

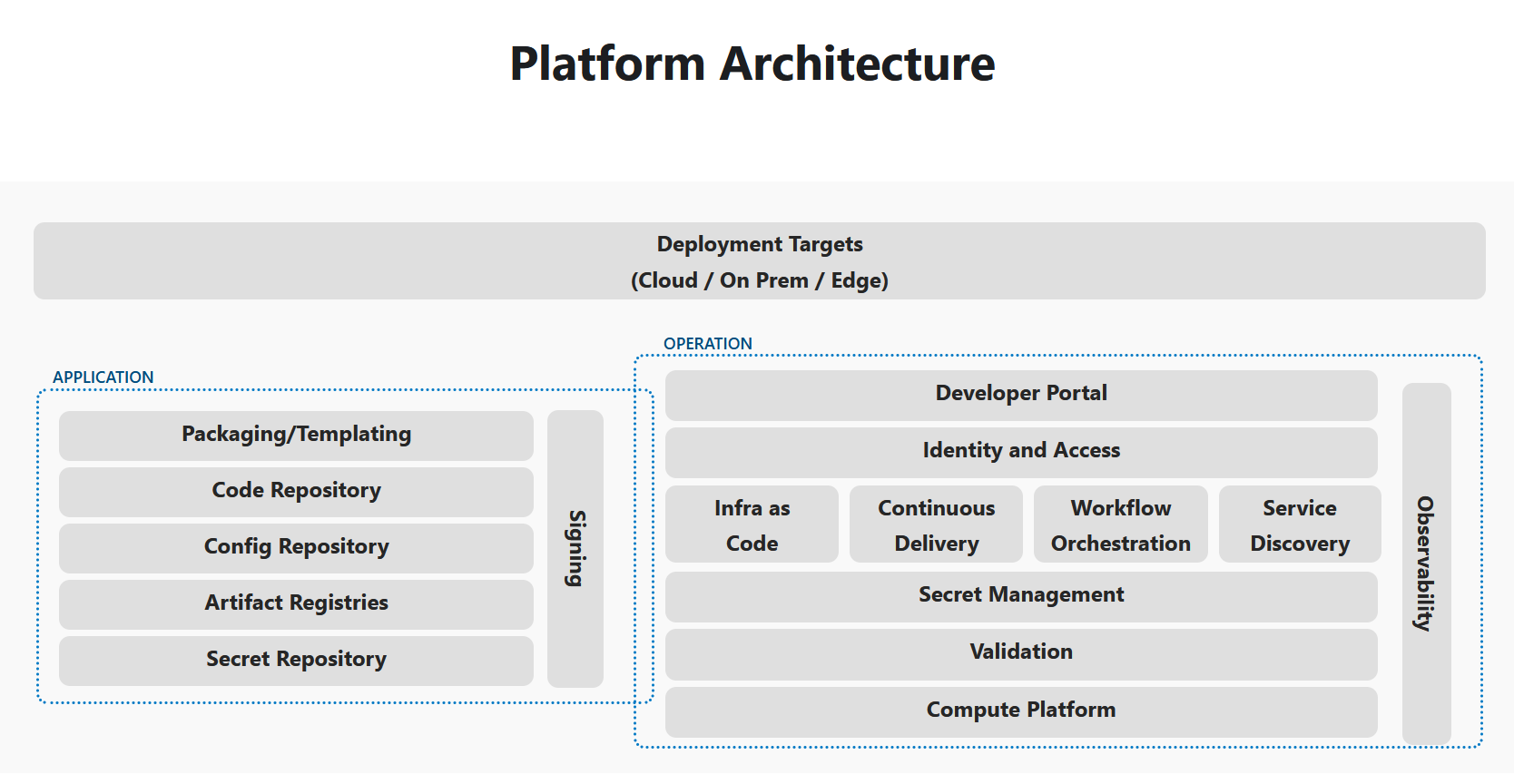

In a platform reference architecture there are five main planes that make up an IDP:

- Developer Control Plane – this is the primary configuration layer and interaction point for the platform users. Components include Workload specifications such as Score and a portal for developers to interact with.

- Integration and Delivery Plane – this plane is about building and storing the image, creating app and infra configs, and deploying the final state. It usually contains a CI pipeline, an image registry, a Platform Orchestrator, and the CD system.

- Resource Plane – this is where the actual infrastructure exists including clusters, databases, storage or DNS services. 4, Monitoring and Logging Plane – provides real-time metrics and logs for apps and infrastructure.

- Security Plane – manages secrets and identity to protect sensitive information, e.g., storing, managing, and security retrieving API keys and credentials/secrets.

(source: https://humanitec.com/blog/wtf-internal-developer-platform-vs-internal-developer-portal-vs-paas)

Humanitec

https://github.com/humanitec-architecture

https://humanitec.com/reference-architectures

Create a reference architecture

2.1.4.2 - Platform Components

This page is in work. Right now we have in the index a collection of links describing and listing typical components and building blocks of platforms. Also we have a growing number of subsections regarding special types of components.

See also:

- https://thenewstack.io/build-an-open-source-kubernetes-gitops-platform-part-1/

- https://thenewstack.io/build-an-open-source-kubernetes-gitops-platform-part-2/

2.1.4.2.1 - CI/CD Pipeline

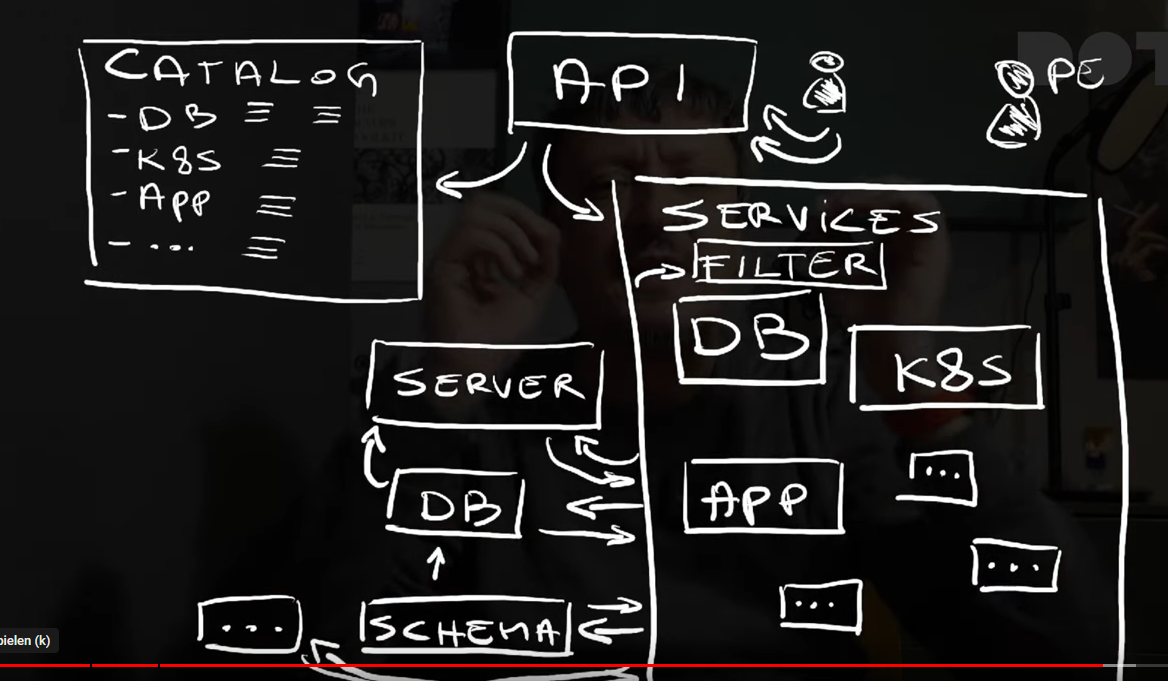

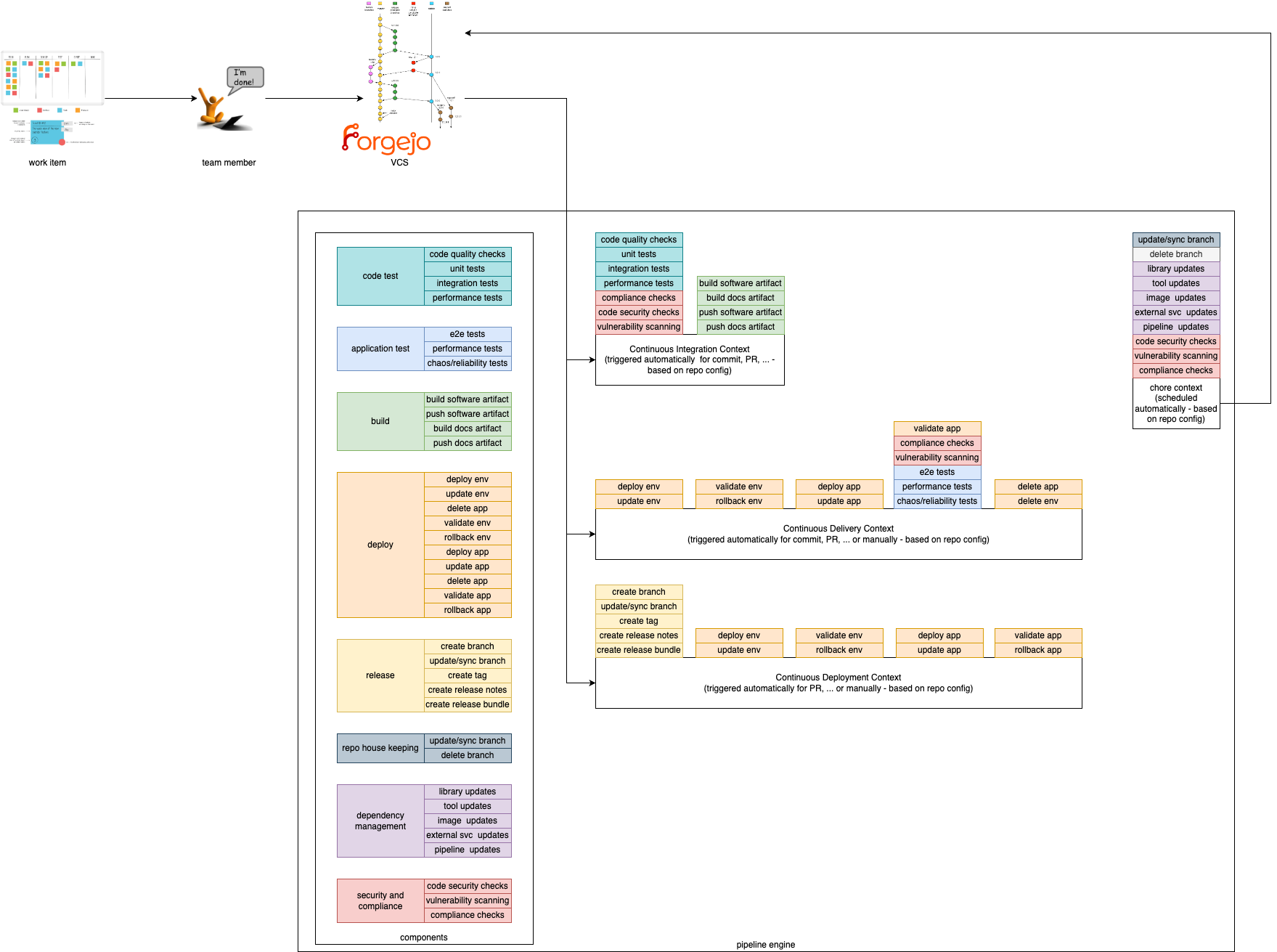

This document describes the concept of pipelining in the context of the Edge Developer Framework.

Overview

In order to provide a composable pipeline as part of the Edge Developer Framework (EDF), we have defined a set of concepts that can be used to create pipelines for different usage scenarios. These concepts are:

Pipeline Contexts define the context in which a pipeline execution is run. Typically, a context corresponds to a specific step within the software development lifecycle, such as building and testing code, deploying and testing code in staging environments, or releasing code. Contexts define which components are used, in which order, and the environment in which they are executed.

Components are the building blocks, which are used in the pipeline. They define specific steps that are executed in a pipeline such as compiling code, running tests, or deploying an application.

Pipeline Contexts

We provide 4 Pipeline Contexts that can be used to create pipelines for different usage scenarios. The contexts can be described as the golden path, which is fully configurable and extenable by the users.

Pipeline runs with a given context can be triggered by different actions. For example, a pipeline run with the Continuous Integration context can be triggered by a commit to a repository, while a pipeline run with the Continuous Delivery context could be triggered by merging a pull request to a specific branch.

Continuous Integration

This context is focused on running tests and checks on every commit to a repository. It is used to ensure that the codebase is always in a working state and that new changes do not break existing functionality. Tests within this context are typically fast and lightweight, and are used to catch simple errors such as syntax errors, typos, and basic logic errors. Static vulnerability and compliance checks can also be performed in this context.

Continuous Delivery

This context is focused on deploying code to a (ephermal) staging environment after its static checks have been performed. It is used to ensure that the codebase is always deployable and that new changes can be easily reviewed by stakeholders. Tests within this context are typically more comprehensive than those in the Continuous Integration context, and handle more complex scenarios such as integration tests and end-to-end tests. Additionally, live security and compliance checks can be performed in this context.

Continuous Deployment

This context is focused on deploying code to a production environment and/or publishing artefacts after static checks have been performed.

Chore

This context focuses on measures that need to be carried out regularly (e.g. security or compliance scans). They are used to ensure the robustness, security and efficiency of software projects. They enable teams to maintain high standards of quality and reliability while minimizing risks and allowing developers to focus on more critical and creative aspects of development, increasing overall productivity and satisfaction.

Components

Components are the composable and self-contained building blocks for the contexts described above. The aim is to cover most (common) use cases for application teams and make them particularly easy to use by following our golden paths. This way, application teams only have to include and configure the functionalities they actually need. An additional benefit is that this allows for easy extensibility. If a desired functionality has not been implemented as a component, application teams can simply add their own.

Components must be as small as possible and follow the same concepts of software development and deployment as any other software product. In particular, they must have the following characteristics:

- designed for a single task

- provide a clear and intuitive output

- easy to compose

- easily customizable or interchangeable

- automatically testable

In the EDF components are divided into different categories. Each category contains components that perform similar actions. For example, the build category contains components that compile code, while the deploy category contains components that automate the management of the artefacts created in a production-like system.

Note: Components are comparable to interfaces in programming. Each component defines a certain behaviour, but the actual implementation of these actions depends on the specific codebase and environment.

For example, the

buildcomponent defines the action of compiling code, but the actual build process depends on the programming language and build tools used in the project. Thevulnerability scanningcomponent will likely execute different tools and interact with different APIs depending on the context in which it is executed.

Build

Build components are used to compile code. They can be used to compile code written in different programming languages, and can be used to compile code for different platforms.

Code Test

These components define tests that are run on the codebase. They are used to ensure that the codebase is always in a working state and that new changes do not break existing functionality. Tests within this category are typically fast and lightweight, and are used to catch simple errors such as syntax errors, typos, and basic logic errors. Tests must be executable in isolation, and do not require external dependencies such as databases or network connections.

Application Test

Application tests are tests, which run the code in a real execution environment, and provide external dependencies. These tests are typically more comprehensive than those in the Code Test category, and handle more complex scenarios such as integration tests and end-to-end tests.

Deploy

Deploy components are used to deploy code to different environments, but can also be used to publish artifacts. They are typically used in the Continuous Delivery and Continuous Deployment contexts.

Release

Release components are used to create releases of the codebase. They can be used to create tags in the repository, create release notes, or perform other tasks related to releasing code. They are typically used in the Continuous Deployment context.

Repo House Keeping

Repo house keeping components are used to manage the repository. They can be used to clean up old branches, update the repository’s README file, or perform other maintenance tasks. They can also be used to handle issues, such as automatically closing stale issues.

Dependency Management

Dependency management is used to automate the process of managing dependencies in a codebase. It can be used to create pull requests with updated dependencies, or to automatically update dependencies in a codebase.

Security and Compliance

Security and compliance components are used to ensure that the codebase meets security and compliance requirements. They can be used to scan the codebase for vulnerabilities, check for compliance with coding standards, or perform other security and compliance checks. Depending on the context, different tools can be used to accomplish scanning. In the Continuous Integration context, static code analysis can be used to scan the codebase for vulnerabilities, while in the Continuous Delivery context, live security and compliance checks can be performed.

2.1.4.2.1.1 -

Gitops changes the definition of ‘Delivery’ or ‘Deployment’

We have Gitops these days …. so there is a desired state of an environment in a repo and a reconciling mechanism done by Gitops to enforce this state on the environemnt.

There is no continuous whatever step inbetween … Gitops is just ‘overwriting’ (to avoid saying ‘delivering’ or ‘deploying’) the environment with the new state.

This means whatever quality ensuring steps have to take part before ‘overwriting’ have to be defined as state changer in the repos, not in the environments.

Conclusio: I think we only have three contexts, or let’s say we don’t have the contect ‘continuous delivery’

2.1.4.2.2 - Developer Portals

This page is in work. Right now we have in the index a collection of links describing developer portals.

- Backstage (siehe auch https://nl.devoteam.com/expert-view/project-unox/)

- Port

- Cortex

- Humanitec

- OpsLevel

- https://www.configure8.io/

- … tbc …

Port’s Comparison vs. Backstage

https://www.getport.io/compare/backstage-vs-port

Cortex’s Comparison vs. Backstage, Port, OpsLevel

Service Catalogue

- https://humanitec.com/blog/service-catalogs-and-internal-developer-platforms

- https://dzone.com/articles/the-differences-between-a-service-catalog-internal

Links

2.1.4.2.3 - Platform Orchestrator

‘Platform Orchestration’ is first mentionned by Thoughtworks in Sept 2023

Links

- portals-vs-platform-orchestrator

- kratix.io

- https://internaldeveloperplatform.org/platform-orchestrators/

- backstack.dev

CNOE

- cnoe.io

Resources

2.1.4.2.4 - List of references

CNCF

Here are capability domains to consider when building platforms for cloud-native computing:

- Web portals for observing and provisioning products and capabilities

- APIs (and CLIs) for automatically provisioning products and capabilities

- “Golden path” templates and docs enabling optimal use of capabilities in products

- Automation for building and testing services and products

- Automation for delivering and verifying services and products

- Development environments such as hosted IDEs and remote connection tools

- Observability for services and products using instrumentation and dashboards, including observation of functionality, performance and costs

- Infrastructure services including compute runtimes, programmable networks, and block and volume storage

- Data services including databases, caches, and object stores

- Messaging and event services including brokers, queues, and event fabrics

- Identity and secret management services such as service and user identity and authorization, certificate and key issuance, and static secret storage

- Security services including static analysis of code and artifacts, runtime analysis, and policy enforcement

- Artifact storage including storage of container image and language-specific packages, custom binaries and libraries, and source code

IDP

An Internal Developer Platform (IDP) should be built to cover 5 Core Components:

| Core Component | Short Description |

|---|---|

| Application Configuration Management | Manage application configuration in a dynamic, scalable and reliable way. |

| Infrastructure Orchestration | Orchestrate your infrastructure in a dynamic and intelligent way depending on the context. |

| Environment Management | Enable developers to create new and fully provisioned environments whenever needed. |

| Deployment Management | Implement a delivery pipeline for Continuous Delivery or even Continuous Deployment (CD). |

| Role-Based Access Control | Manage who can do what in a scalable way. |

2.1.5 - Platform Orchestrators

2.1.5.1 - CNOE

The goal for the CNOE framework is to bring together a cohort of enterprises operating at the same scale so that they can navigate their operational technology decisions together, de-risk their tooling bets, coordinate contribution, and offer guidance to large enterprises on which CNCF technologies to use together to achieve the best cloud efficiencies.

Aussprache

- Englisch Kuh.noo,

- also ‘Kanu’ im Deutschen

Architecture

Run the CNOEs reference implementation

See https://cnoe.io/docs/reference-implementation/integrations/reference-impl:

# in a local terminal with docker and kind

idpbuilder create --use-path-routing --log-level debug --package-dir https://github.com/cnoe-io/stacks//ref-implementation

Output

time=2024-08-05T14:48:33.348+02:00 level=INFO msg="Creating kind cluster" logger=setup

time=2024-08-05T14:48:33.371+02:00 level=INFO msg="Runtime detected" logger=setup provider=docker

########################### Our kind config ############################

# Kind kubernetes release images https://github.com/kubernetes-sigs/kind/releases

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

image: "kindest/node:v1.29.2"

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 443

hostPort: 8443

protocol: TCP

containerdConfigPatches:

- |-

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."gitea.cnoe.localtest.me:8443"]

endpoint = ["https://gitea.cnoe.localtest.me"]

[plugins."io.containerd.grpc.v1.cri".registry.configs."gitea.cnoe.localtest.me".tls]

insecure_skip_verify = true

######################### config end ############################

time=2024-08-05T14:48:33.394+02:00 level=INFO msg="Creating kind cluster" logger=setup cluster=localdev

time=2024-08-05T14:48:53.680+02:00 level=INFO msg="Done creating cluster" logger=setup cluster=localdev

time=2024-08-05T14:48:53.905+02:00 level=DEBUG+3 msg="Getting Kube config" logger=setup

time=2024-08-05T14:48:53.908+02:00 level=DEBUG+3 msg="Getting Kube client" logger=setup

time=2024-08-05T14:48:53.908+02:00 level=INFO msg="Adding CRDs to the cluster" logger=setup

time=2024-08-05T14:48:53.948+02:00 level=DEBUG+3 msg="crd not yet established, waiting." "crd name"=custompackages.idpbuilder.cnoe.io

time=2024-08-05T14:48:53.954+02:00 level=DEBUG+3 msg="crd not yet established, waiting." "crd name"=custompackages.idpbuilder.cnoe.io

time=2024-08-05T14:48:53.957+02:00 level=DEBUG+3 msg="crd not yet established, waiting." "crd name"=custompackages.idpbuilder.cnoe.io

time=2024-08-05T14:48:53.981+02:00 level=DEBUG+3 msg="crd not yet established, waiting." "crd name"=gitrepositories.idpbuilder.cnoe.io

time=2024-08-05T14:48:53.985+02:00 level=DEBUG+3 msg="crd not yet established, waiting." "crd name"=gitrepositories.idpbuilder.cnoe.io

time=2024-08-05T14:48:54.734+02:00 level=DEBUG+3 msg="Creating controller manager" logger=setup

time=2024-08-05T14:48:54.737+02:00 level=DEBUG+3 msg="Created temp directory for cloning repositories" logger=setup dir=/tmp/idpbuilder-localdev-2865684949

time=2024-08-05T14:48:54.737+02:00 level=INFO msg="Setting up CoreDNS" logger=setup

time=2024-08-05T14:48:54.798+02:00 level=INFO msg="Setting up TLS certificate" logger=setup

time=2024-08-05T14:48:54.811+02:00 level=DEBUG+3 msg="Creating/getting certificate" logger=setup host=cnoe.localtest.me sans="[cnoe.localtest.me *.cnoe.localtest.me]"

time=2024-08-05T14:48:54.825+02:00 level=DEBUG+3 msg="Creating secret for certificate" logger=setup host=cnoe.localtest.me

time=2024-08-05T14:48:54.832+02:00 level=DEBUG+3 msg="Running controllers" logger=setup

time=2024-08-05T14:48:54.833+02:00 level=DEBUG+3 msg="starting manager"

time=2024-08-05T14:48:54.833+02:00 level=INFO msg="Creating localbuild resource" logger=setup

time=2024-08-05T14:48:54.834+02:00 level=INFO msg="Starting EventSource" controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage source="kind source: *v1alpha1.CustomPackage"

time=2024-08-05T14:48:54.834+02:00 level=INFO msg="Starting EventSource" controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository source="kind source: *v1alpha1.GitRepository"

time=2024-08-05T14:48:54.834+02:00 level=INFO msg="Starting Controller" controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage

time=2024-08-05T14:48:54.834+02:00 level=INFO msg="Starting Controller" controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository

time=2024-08-05T14:48:54.834+02:00 level=INFO msg="Starting EventSource" controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild source="kind source: *v1alpha1.Localbuild"

time=2024-08-05T14:48:54.834+02:00 level=INFO msg="Starting Controller" controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild

time=2024-08-05T14:48:54.937+02:00 level=INFO msg="Starting workers" controller=gitrepository controllerGroup=idpbuilder.cnoe.io controllerKind=GitRepository "worker count"=1

time=2024-08-05T14:48:54.937+02:00 level=INFO msg="Starting workers" controller=custompackage controllerGroup=idpbuilder.cnoe.io controllerKind=CustomPackage "worker count"=1

time=2024-08-05T14:48:54.937+02:00 level=INFO msg="Starting workers" controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild "worker count"=1

time=2024-08-05T14:48:56.863+02:00 level=DEBUG+3 msg=Reconciling controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild Localbuild.name=localdev namespace="" name=localdev reconcileID=cc0e5b9d-4952-4fd1-9d62-6d9821f180be resource=/localdev

time=2024-08-05T14:48:56.863+02:00 level=DEBUG+3 msg="Create or update namespace" controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild Localbuild.name=localdev namespace="" name=localdev reconcileID=cc0e5b9d-4952-4fd1-9d62-6d9821f180be resource="&Namespace{ObjectMeta:{idpbuilder-localdev 0 0001-01-01 00:00:00 +0000 UTC <nil> <nil> map[] map[] [] [] []},Spec:NamespaceSpec{Finalizers:[],},Status:NamespaceStatus{Phase:,Conditions:[]NamespaceCondition{},},}"

time=2024-08-05T14:48:56.983+02:00 level=DEBUG+3 msg="installing core packages" controller=localbuild controllerGroup=idpbuilder.cnoe.io controllerKind=Localbuild Localbuild.name=localdev namespace="" name=localdev reconcileID=cc0e5b9d-4952-4fd1-9d62-6d9821f180be

time=2024-08-05T14:

...

time=2024-08-05T14:51:04.166+02:00 level=INFO msg="Stopping and waiting for webhooks"

time=2024-08-05T14:51:04.166+02:00 level=INFO msg="Stopping and waiting for HTTP servers"

time=2024-08-05T14:51:04.166+02:00 level=INFO msg="Wait completed, proceeding to shutdown the manager"

########################### Finished Creating IDP Successfully! ############################

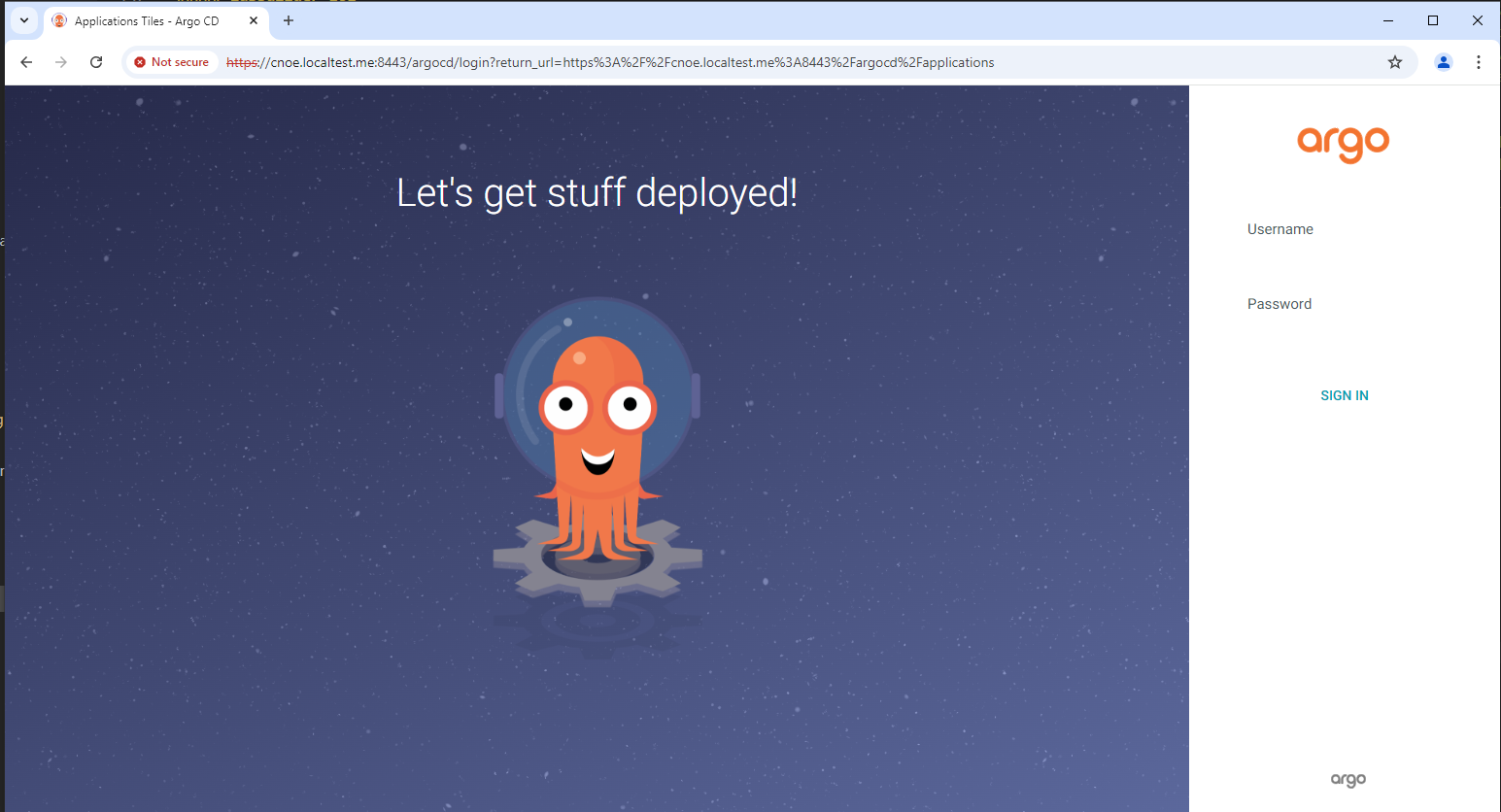

Can Access ArgoCD at https://cnoe.localtest.me:8443/argocd

Username: admin

Password can be retrieved by running: idpbuilder get secrets -p argocd

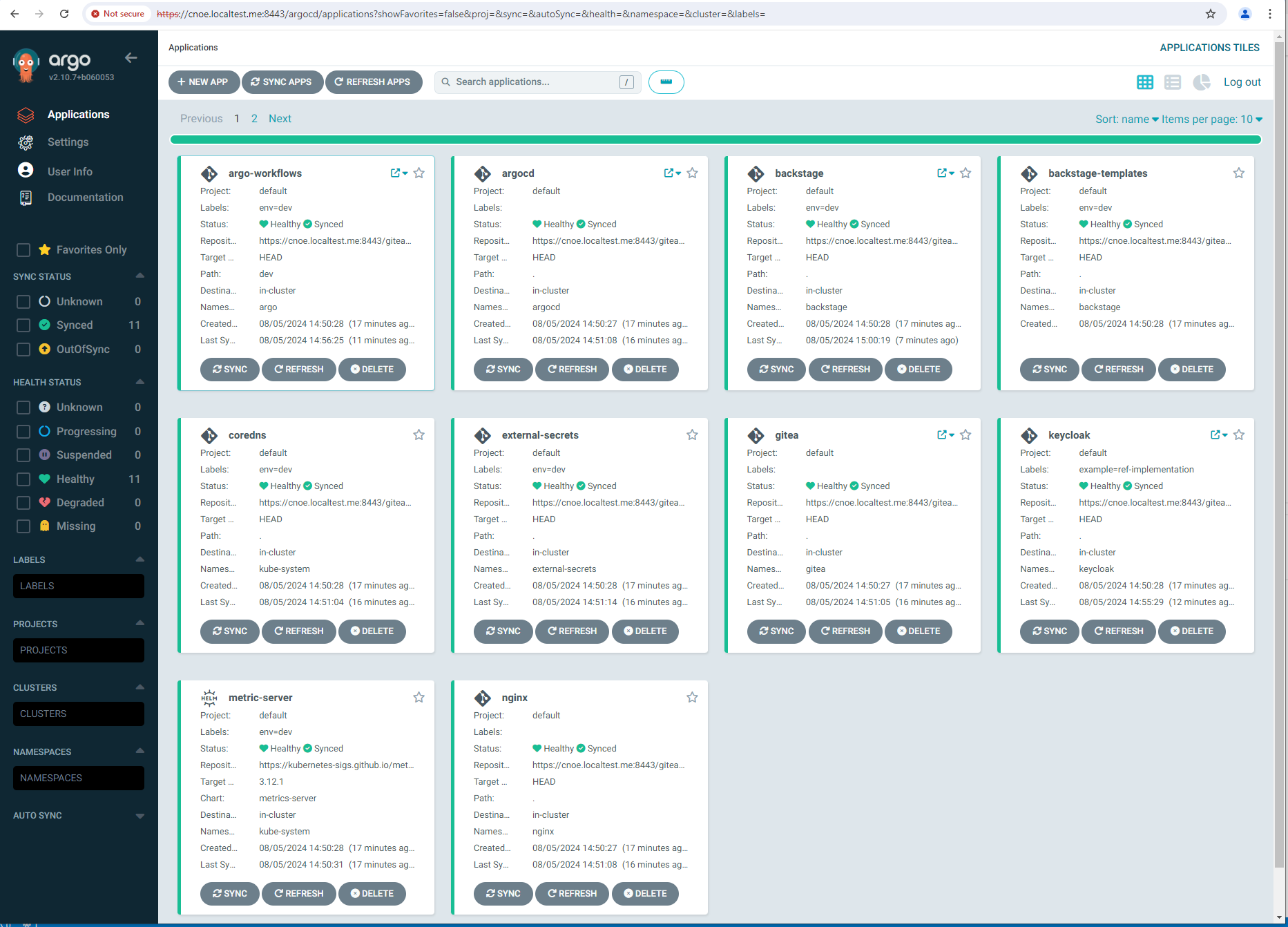

Outcome

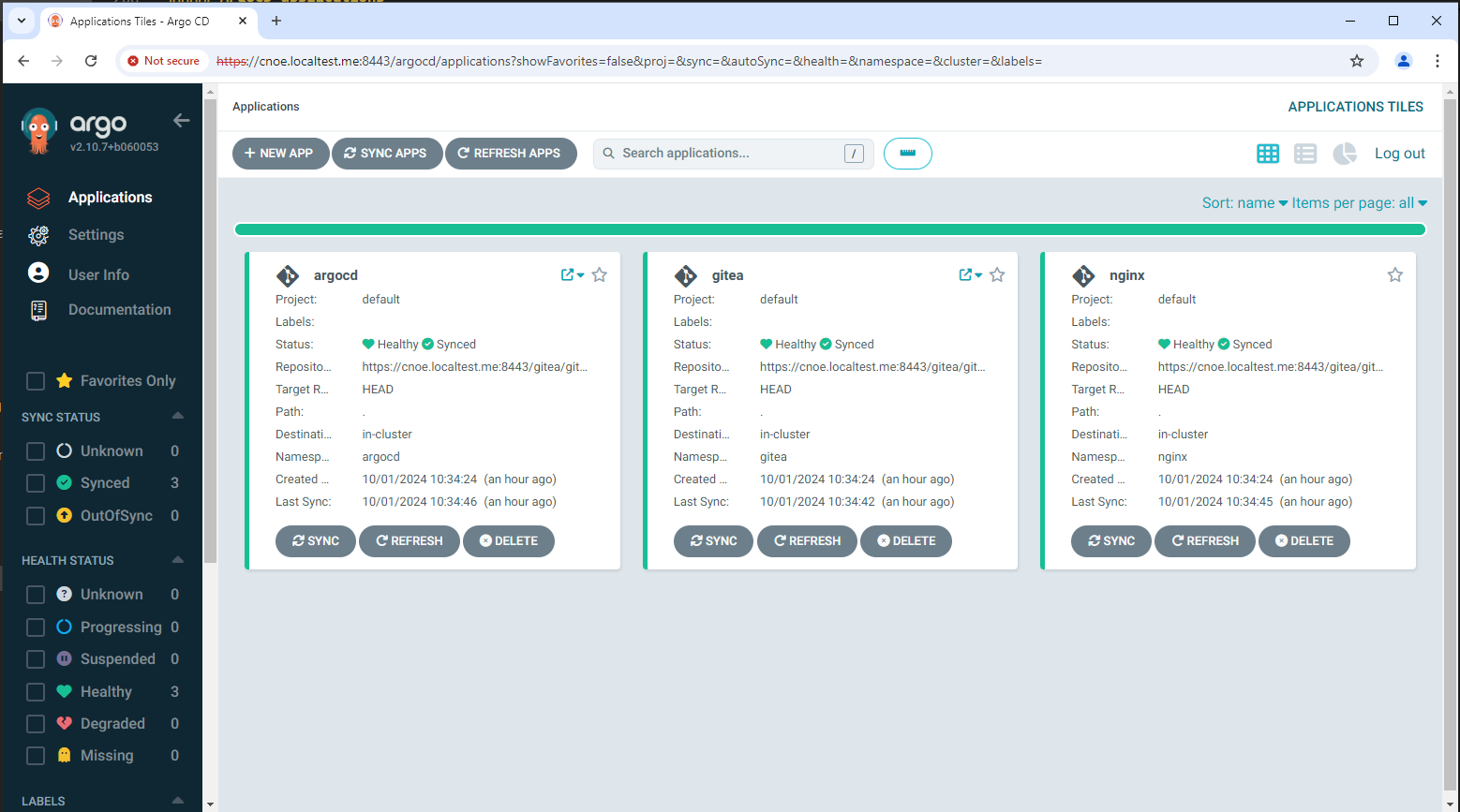

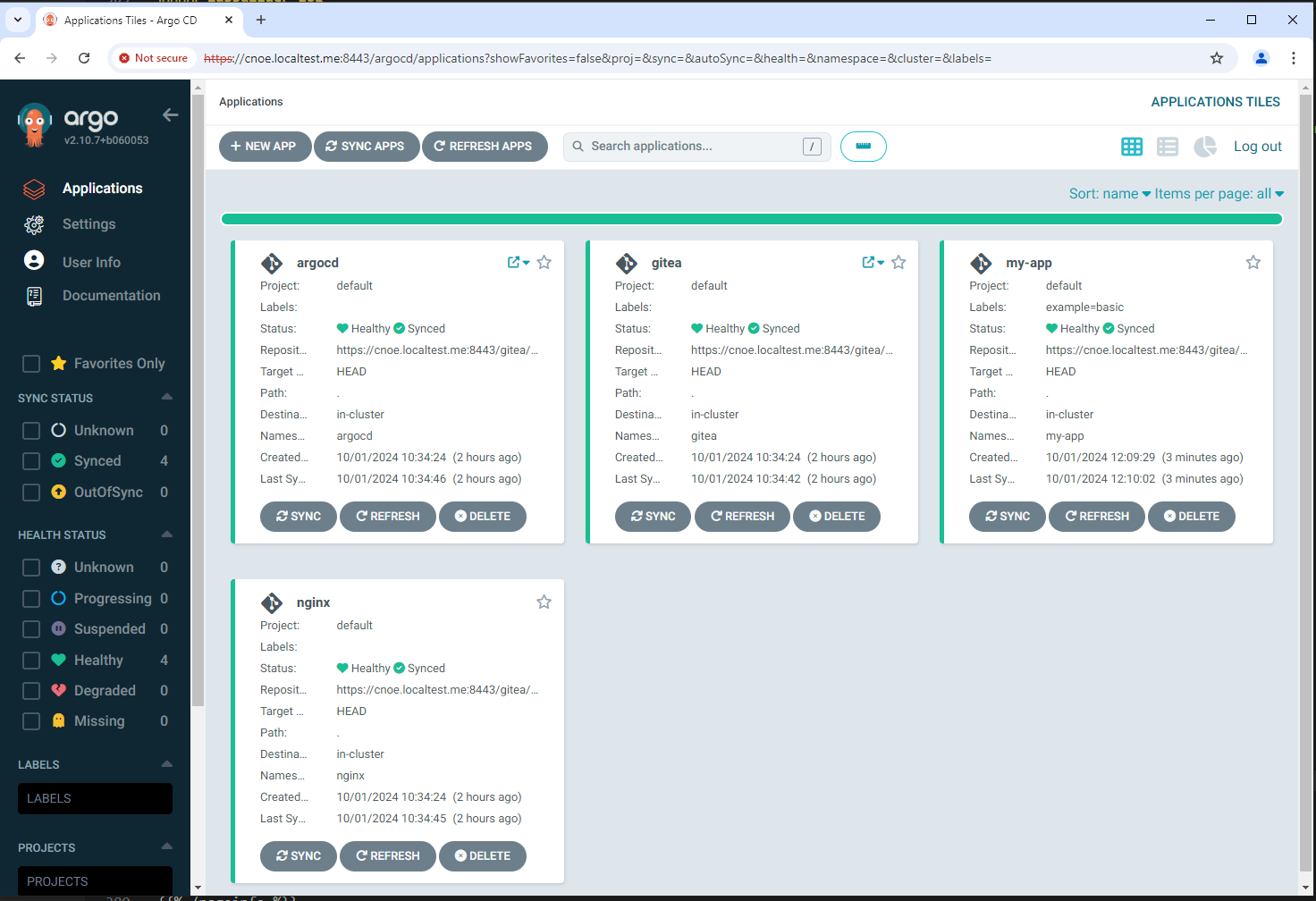

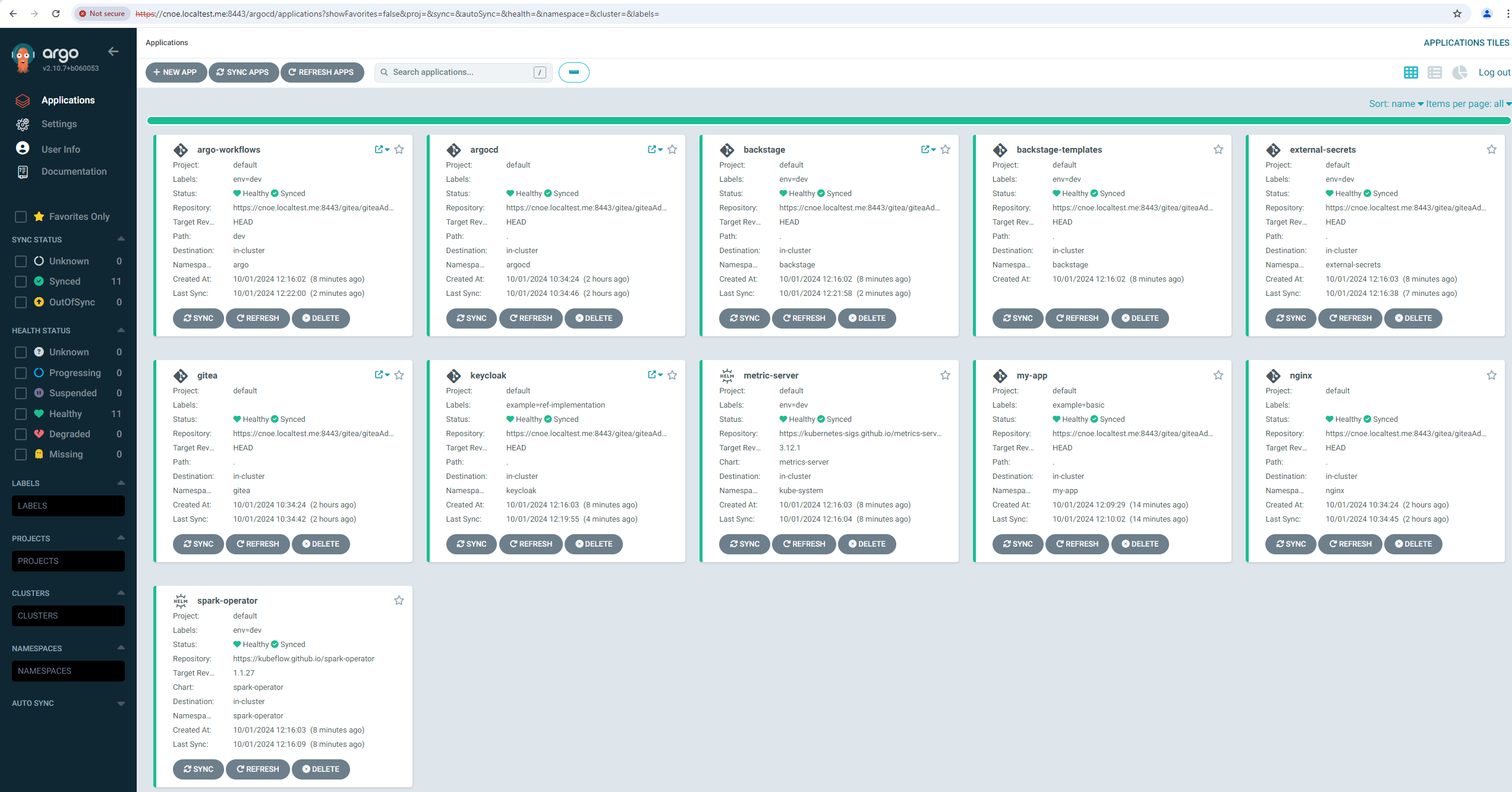

Nach ca. 10 minuten sind alle applications ausgerollt (am längsten dauert Backstage):

stl@ubuntu-vpn:~$ kubectl get applications -A

NAMESPACE NAME SYNC STATUS HEALTH STATUS

argocd argo-workflows Synced Healthy

argocd argocd Synced Healthy

argocd backstage Synced Healthy

argocd included-backstage-templates Synced Healthy

argocd coredns Synced Healthy

argocd external-secrets Synced Healthy

argocd gitea Synced Healthy

argocd keycloak Synced Healthy

argocd metric-server Synced Healthy

argocd nginx Synced Healthy

argocd spark-operator Synced Healthy

stl@ubuntu-vpn:~$ idpbuilder get secrets

---------------------------

Name: argocd-initial-admin-secret

Namespace: argocd

Data:

password : sPMdWiy0y0jhhveW

username : admin

---------------------------

Name: gitea-credential

Namespace: gitea

Data:

password : |iJ+8gG,(Jj?cc*G>%(i'OA7@(9ya3xTNLB{9k'G

username : giteaAdmin

---------------------------

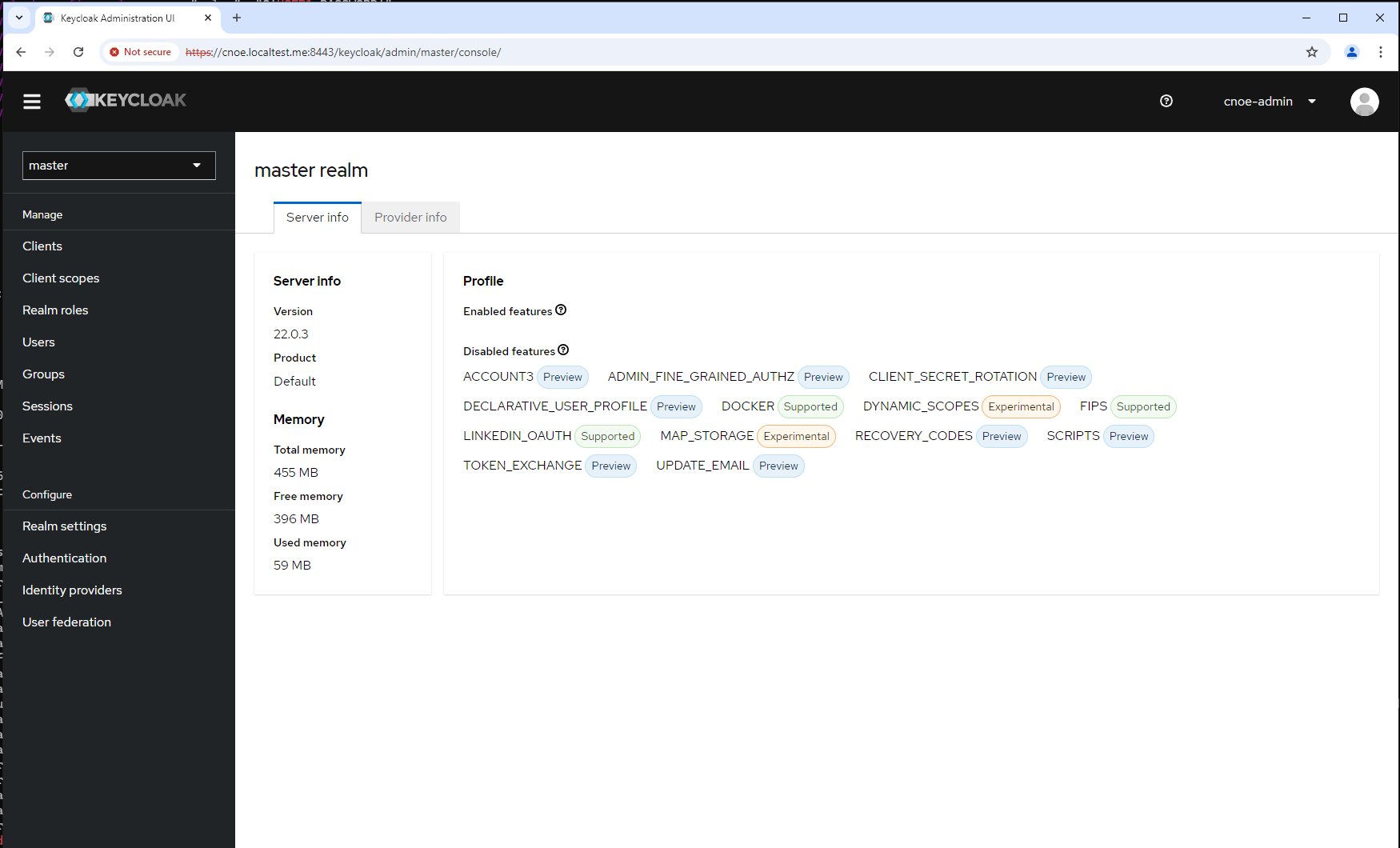

Name: keycloak-config

Namespace: keycloak

Data:

KC_DB_PASSWORD : ES-rOE6MXs09r+fAdXJOvaZJ5I-+nZ+hj7zF

KC_DB_USERNAME : keycloak

KEYCLOAK_ADMIN_PASSWORD : BBeMUUK1CdmhKWxZxDDa1c5A+/Z-dE/7UD4/

POSTGRES_DB : keycloak

POSTGRES_PASSWORD : ES-rOE6MXs09r+fAdXJOvaZJ5I-+nZ+hj7zF

POSTGRES_USER : keycloak

USER_PASSWORD : RwCHPvPVMu+fQM4L6W/q-Wq79MMP+3CN-Jeo

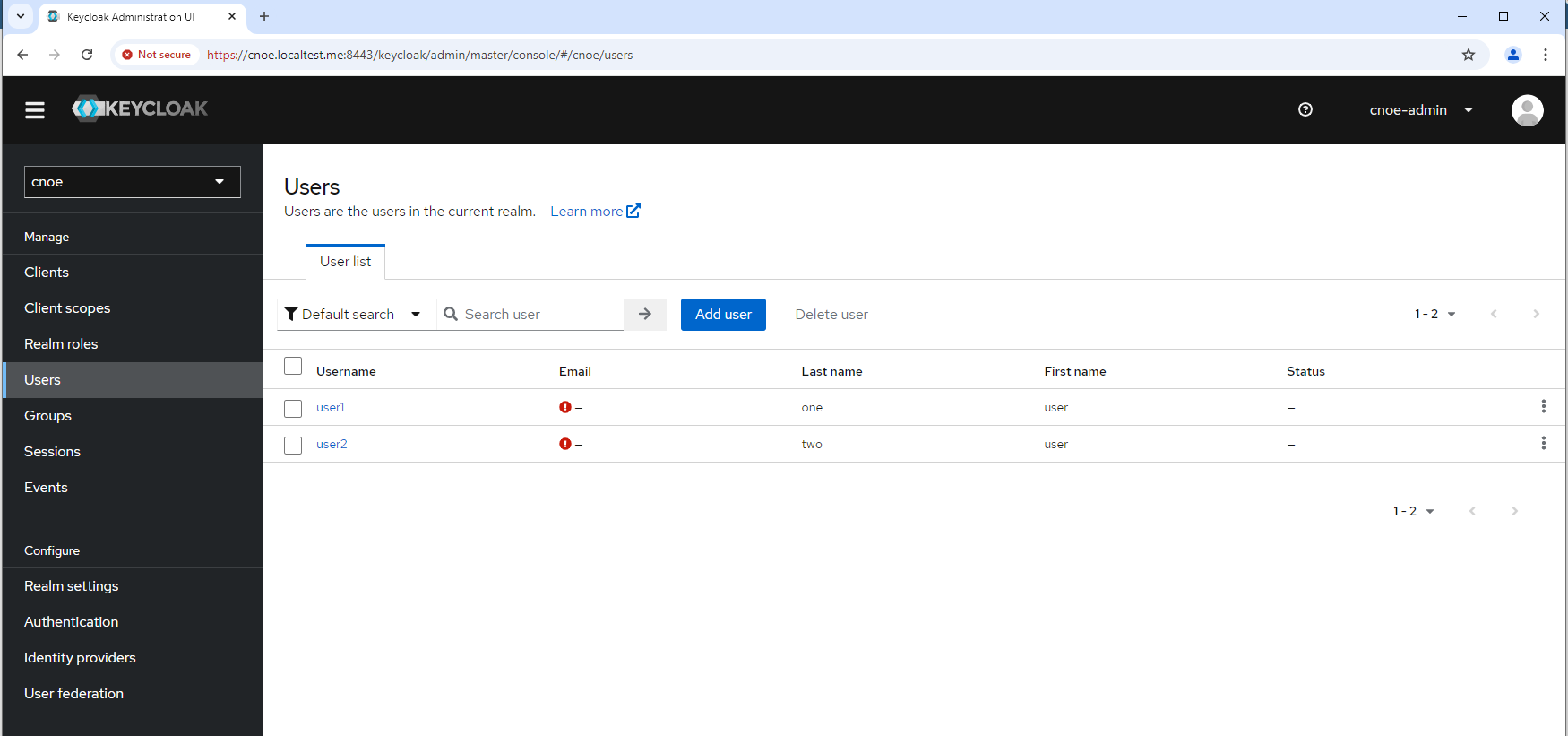

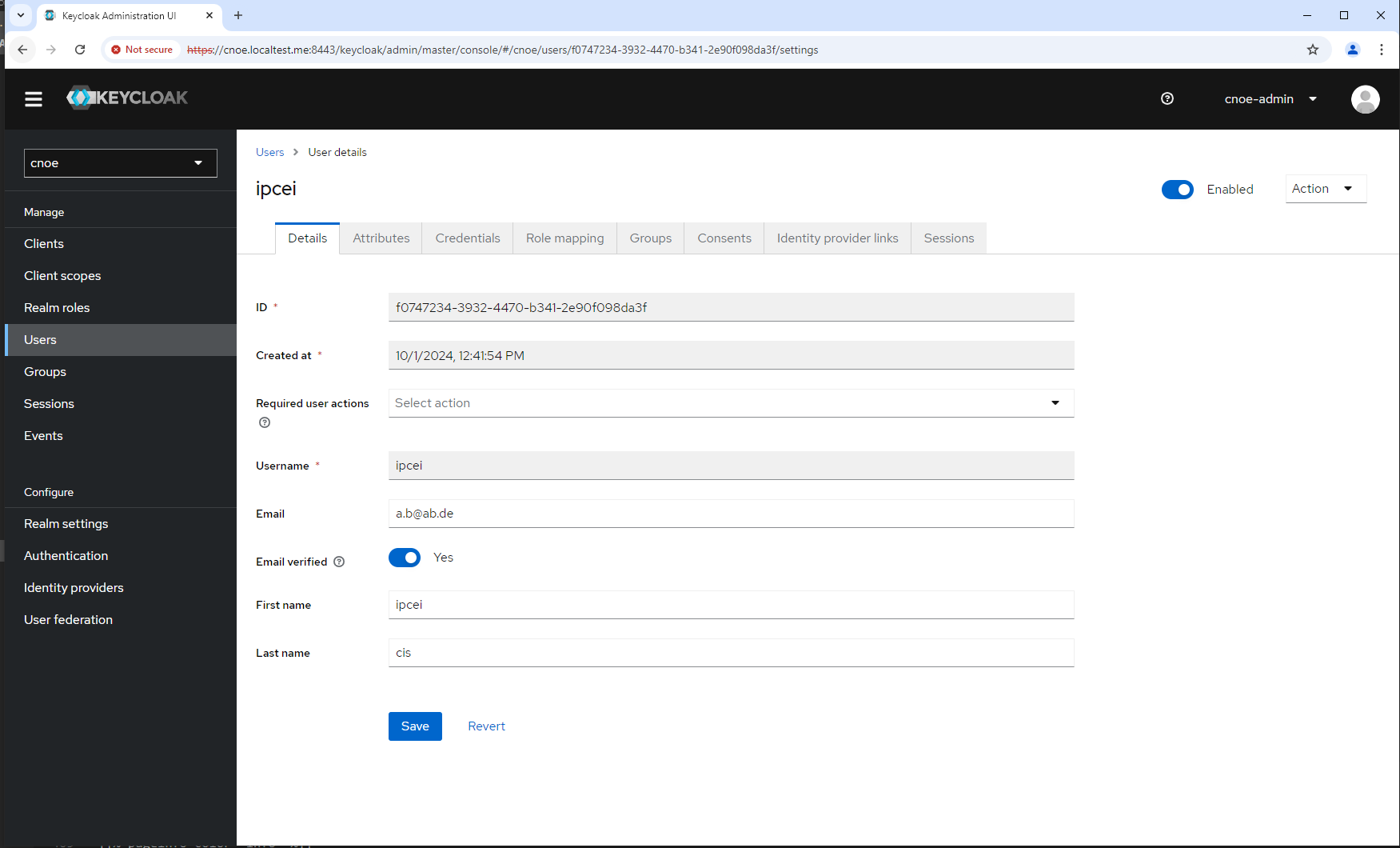

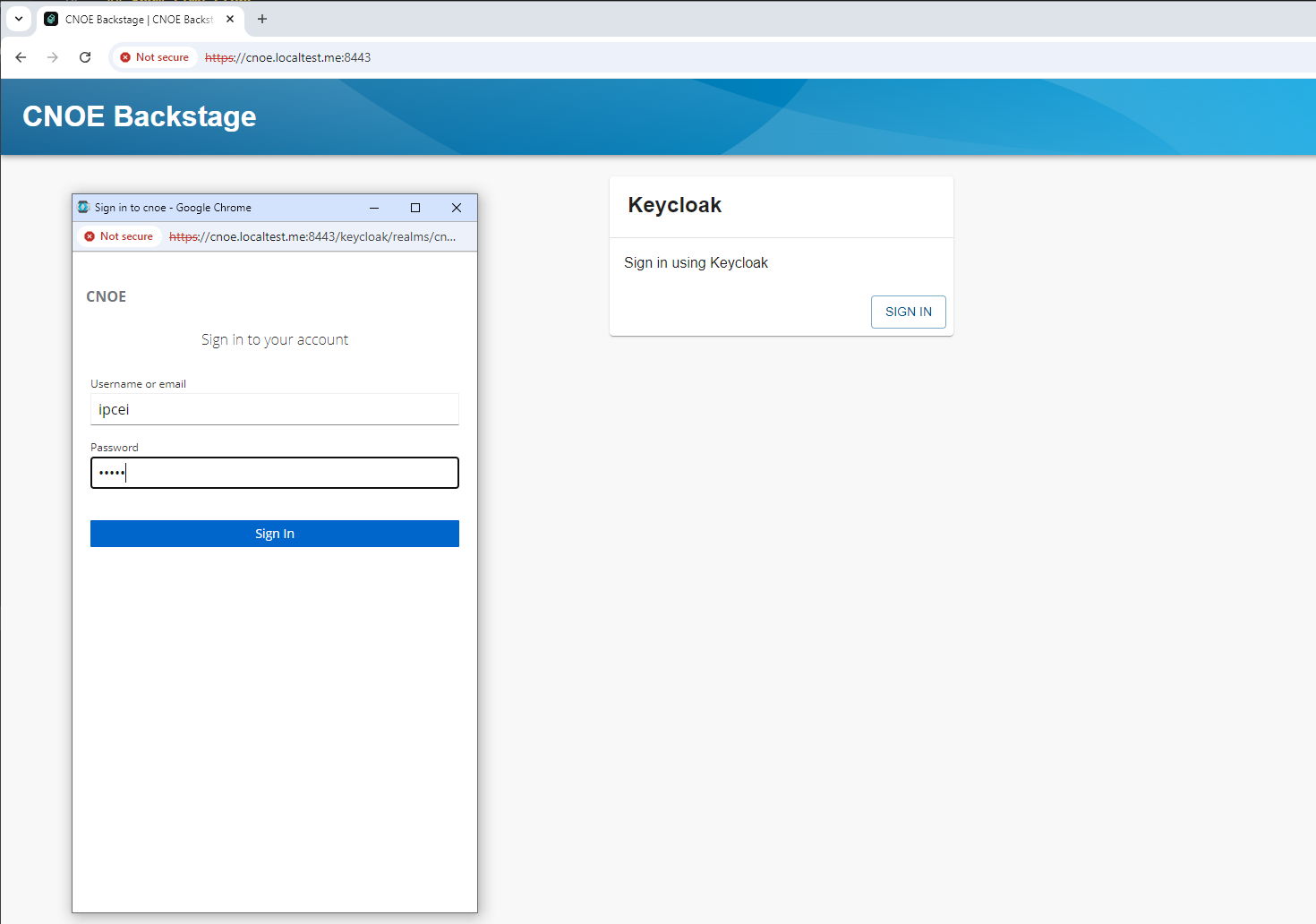

login to backstage

login geht mit den Creds, siehe oben:

2.1.5.2 - Humanitec

tbd

2.2 - Solution

2.2.1 - Design

This design documentation structure is inspired by the design of crossplane.

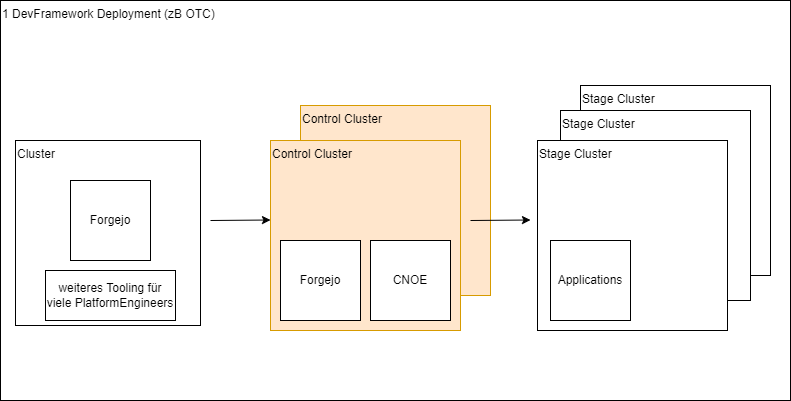

2.2.1.1 - Agnostic EDF Deployment

- Type: Proposal

- Owner: Stephan Lo (stephan.lo@telekom.de)

- Reviewers: EDF Architects

- Status: Speculative, revision 0.1

Background

EDF is running as a controlplane - or let’s say an orchestration plane, correct wording is still to be defined - in a kubernetes cluster. Right now we have at least ArgoCD as controller of manifests which we provide as CNOE stacks of packages and standalone packages.

Proposal

The implementation of EDF must be kubernetes provider agnostic. Thus each provider specific deployment dependency must be factored out into provider specific definitions or deployment procedures.

Local deployment

This implies that EDF must always be deployable into a local cluster, whereby by ’local’ we mean a cluster which is under the full control of the platform engineer, e.g. a kind cluster on their laptop.

2.2.1.2 - Agnostic Stack Definition

- Type: Proposal

- Owner: Stephan Lo (stephan.lo@telekom.de)

- Reviewers: EDF Architects

- Status: Speculative, revision 0.1

Background

When booting and reconciling the ‘final’ stack exectuting orchestrator (here: ArgoCD) needs to get rendered (or hydrated) presentations of the manifests.

It is not possible or unwanted that the orchestrator itself resolves dependencies or configuration values.

Proposal

The hydration takes place for all target clouds/kubernetes providers. There is no ‘default’ or ‘special’ setup, like the Kind version.

Local development

This implies that in a development process there needs to be a build step hydrating the ArgoCD manifests for the targeted cloud.

Reference

Discussion from Robert and Stephan-Pierre in the context of stack development - there should be an easy way to have locally changed stacks propagated into the local running platform.

2.2.1.3 - eDF is self-contained and has an own IAM (WiP)

- Type: Proposal

- Owner: Stephan Lo (stephan.lo@telekom.de)

- Reviewers: EDF Architects

- Status: Speculative, revision 0.1

Background

tbd

Proposal

==== 1 =====

There is a core eDF which is self-contained and does not have any impelmented dependency to external platforms. eDF depends on abstractions. Each embdding into customer infrastructure works with adapters which implement the abstraction.

==== 2 =====

eDF has an own IAM. This may either hold the principals and permissions itself when there is no other IAM or proxy and map them when integrated into external enterprise IAMs.

Reference

Arch call from 4.12.24, Florian, Stefan, Stephan-Pierre

2.2.1.4 -

why we have architectural documentation

TN: Robert, Patrick, Stefan, Stephan 25.2.25, 13-14h

referring Tickets / Links

- https://jira.telekom-mms.com/browse/IPCEICIS-2424

- https://jira.telekom-mms.com/browse/IPCEICIS-478

- Confluence: https://confluence.telekom-mms.com/display/IPCEICIS/Architecture

charts

we need charts, because:

- external stakeholders (especially architects) want to understand our product and component structure(*)

- our team needs visualization in technical discussions(**)

- we need to have discussions during creating the documentation

(*): marker: “jetzt hab’ ich das erste mal so halbwegs verstanden was ihr da überhaupt macht” (**) marker: ????

typed of charts

- schichtenmodell (frontend, middleware, backend)

- bebauungsplan mit abhängigkeiten, domänen

- kontext von außen

- komponentendiagramm,

decisions

- openbao is backend-system, wird über apis erreicht

further topics / new requirements

- runbook (compare to openbao discussions)

- persistenz der EDP konfiguartion (zb postgres)

- OIDC vs. SSI

2.2.1.5 -

arbeitsteilung arcihtekur, nach innen und nach aussen

Sebastiano, Stefan, Robert, Patrick, Stephan 25.2.25, 14-15h

links

montags-call

- Sebasriano im montags-call, inklusive florian, mindestens interim, solange wir keinen architektur-aussenminister haben

workshops

- nach abstimmung mit hasan zu platform workshops

- weitere beteiligung in weiteren workshop-serien to be defined

programm-alignment

- sponsoren finden

- erledigt sich durch die workshop-serien

interne architekten

- robert und patrick steigen ein

- themen-teilung

produkt struktur

edp standalone ipcei edp

architektur themen

stl

produktstruktur application model (cnoe, oam, score, xrd, …) api backstage (usage scenarios) pipelining ’everything as code’, deklaratives deployment, crossplane (bzw. orchestrator)

ggf: identity mgmt

nicht: security monitoring kubernetes internals

robert

pipelining kubernetes-inetrnals api crossplane platforming - erzeugen von ressourcen in ‘clouds’ (e.g. gcp, und hetzner :-) )

patrick

security identity-mgmt (SSI) EaC und alles andere macht mir auch total spass!

einschätzungen

- ipceicis-pltaform ist wichtigstes teilprojekt (hasan + patrick)

- offener punkt: workload-steuerung, application model (kompatibility mit EDP)

- thema security, siehe ssi vs. oidc

- wir brauchen eigene workshops zum definieren der zusammenarbiets-modi

committements

- patrick und robert nehmen teil an architektur

offen

- sebastian schwaar onboarding? (>=50%) — robert fragt

- alternative: consulting/support anfallsweise

- hält eine kubernetes einführungs-schulung –> termine sind zu vereinbaren (liegt bei sophie)

2.2.1.6 -

crossplane dawn?

- Monday, March 31, 2025

Issue

Robert worked on the kindserver reconciling.

He got aware that crossplane is able to delete clusters when drift is detected. This mustnt happen for sure in productive clusters.

Even worse, if crossplane did delete the cluster and then set it up again correctly, argocd would be out of sync and had no idea by default how to relate the old and new cluster.

Decisions

- quick solution: crosspllane doesn’t delete clusters.

- If it detects drift with a kind cluster, it shall create an alert (like email) but not act in any way

- analyze how crossplane orchestration logic calls ‘business logic’ to decide what to do.

- In this logic we could decide whether to delete resources like clusters and if so then how. Secondly an ‘orchestration’ or let’s workflow how to correctly set the old state with respect to argocd could be implemented there.

- keep terraform in mind

- we probably will need it in adapters anyway

- if the crossplane design does not fir, or the benefit is too small, or we definetly ahve more ressources in developing terraform, the we could completley switch

- focus on EDP domain and application logic

- for the momen (in MVP1) we need to focus on EDP higher level functionality

2.2.1.7 -

platform-team austausch

stefan

- initiale fragen:

- vor 2 wochen workshop tapeten-termin

- wer nimmt an den workshops teil?

- was bietet platform an?

- EDP: könnte 5mio/a kosten

- -> produkt pitch mit marko

- -> edp ist unabhängig von ipceicis cloud continuum*

- generalisierte quality of services ( <-> platform schnittstelle)

Hasan

- martin macht: agent based iac generation

- platform-workshops mitgestalten

- mms-fokus

- connectivity enabled cloud offering, e2e von infrastruktur bis endgerät

- sdk für latenzarme systeme, beraten und integrieren

- monitoring in EDP?

- beispiel ‘unity’

- vorstellung im arch call

- wie können unterschieldiche applikationsebenen auf unterschiedliche infrastruktur(compute ebenen) verteit werden

- zero touch application deployment model

- ich werde gerade ‘abgebremst’

- workshop beteiligung, TPM application model

martin

* edgeXR erlaubt keine persistenz

* openai, llm als abstarktion nicht vorhanden

* momentan nur compute vorhanden

* roaming von applikationen --> EDP muss das unterstützen

* anwendungsfall: sprachmodell übersetzt design-artifakte in architektur, dann wird provisionierung ermöglicht

? Applikations-modelle ? zusammenhang mit golden paths * zB für reines compute faas

2.2.2 - Scenarios

2.2.2.1 - Gitops

WiP - this is in work.

What kind of Gitops do we have with idpbuilder/CNOE ?

References

https://github.com/gitops-bridge-dev/gitops-bridge

2.2.2.2 - Orchestration

WiP - this is in work.

What deployment scenarios do we have with idpbuilder/CNOE ?

References

- Base Url of CNOE presentations: https://github.com/cnoe-io/presentations/tree/main

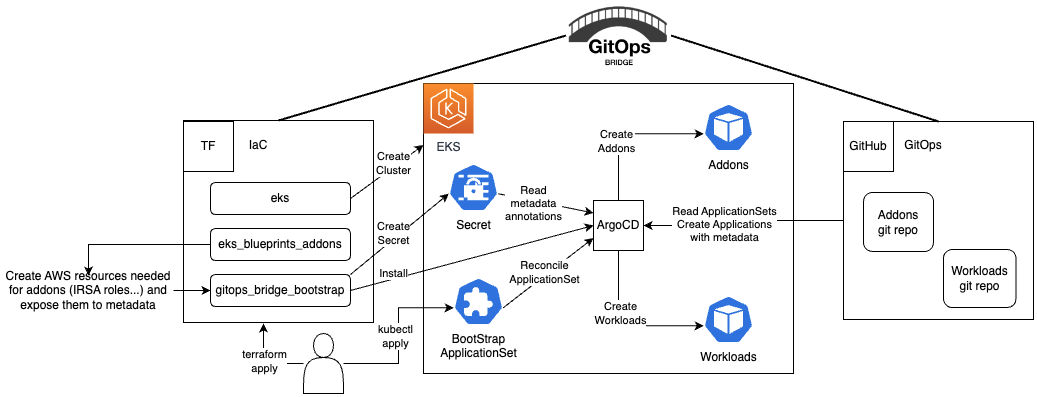

CNOE in EKS

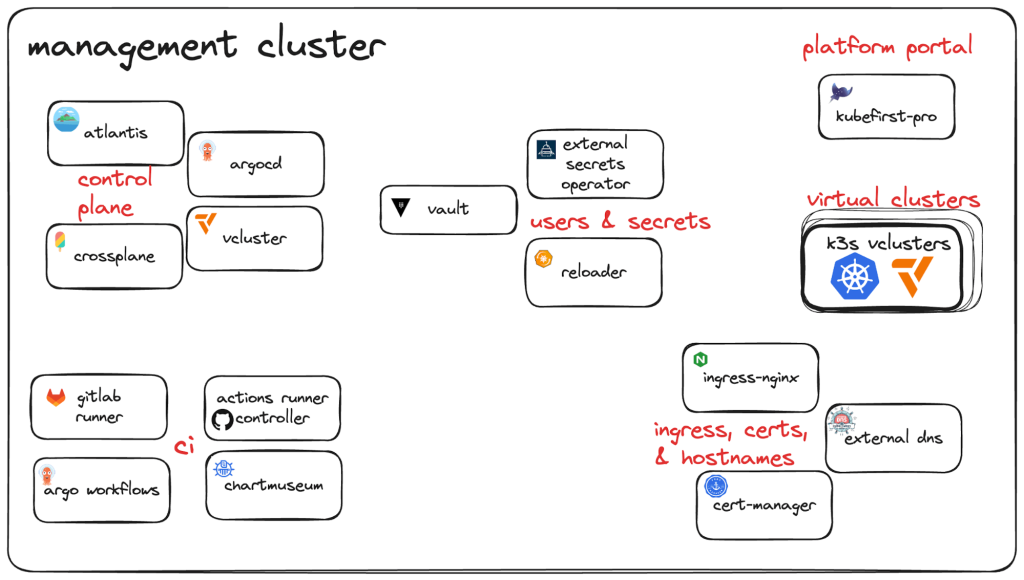

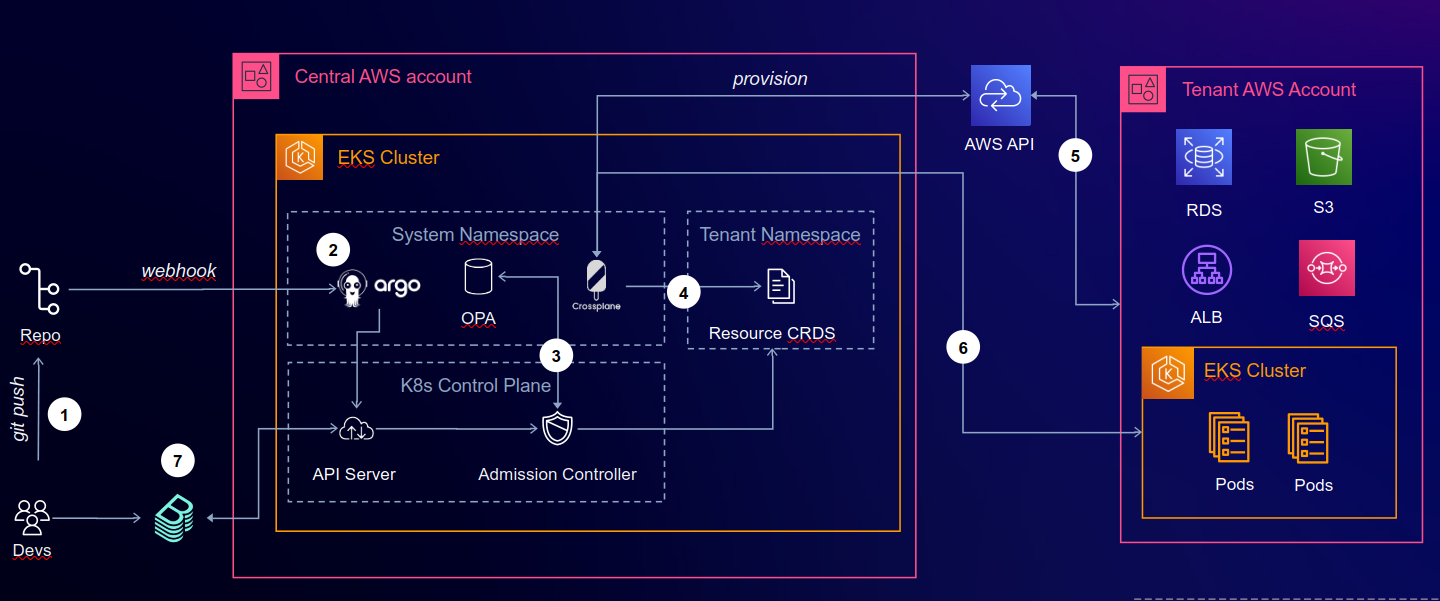

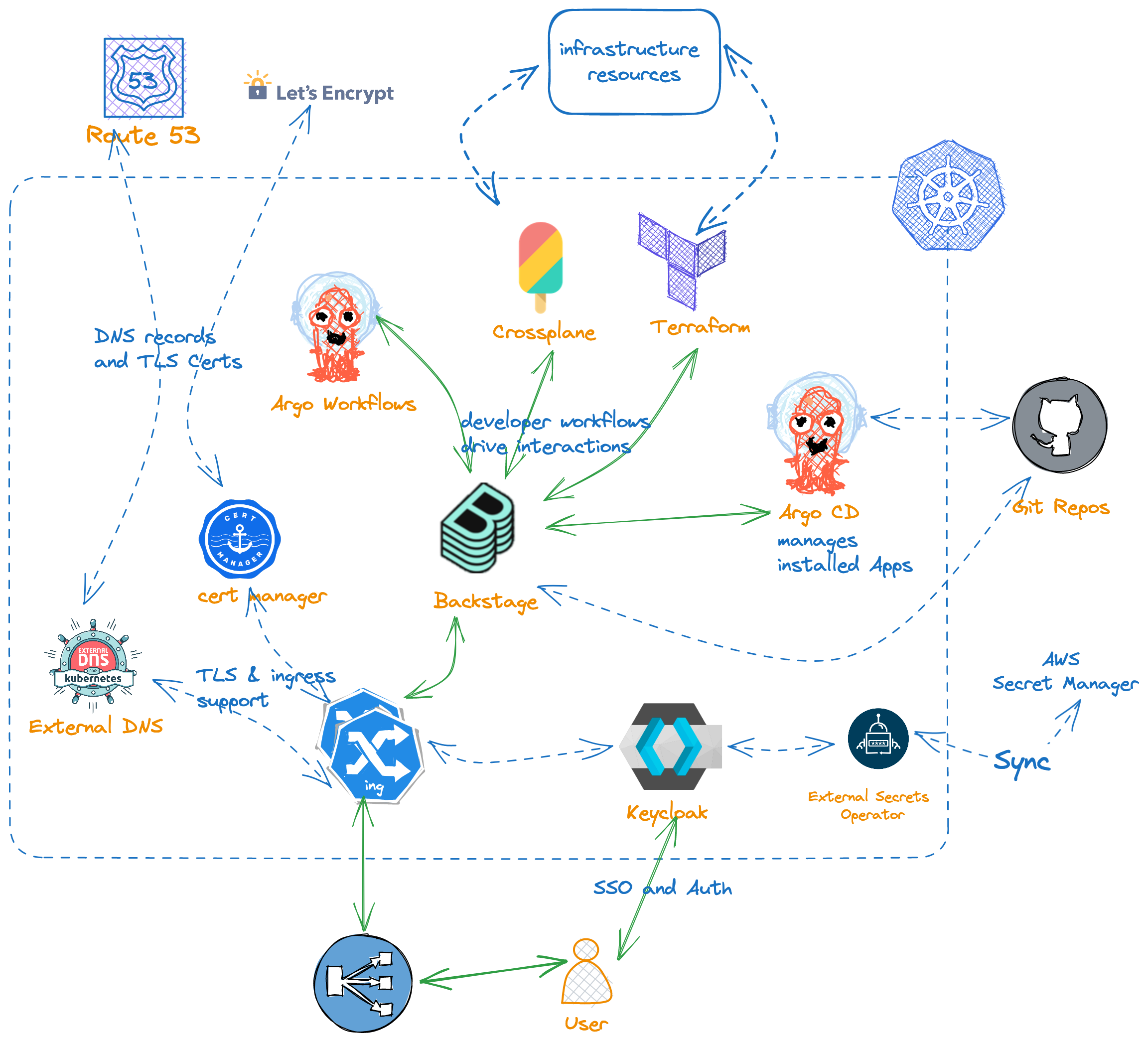

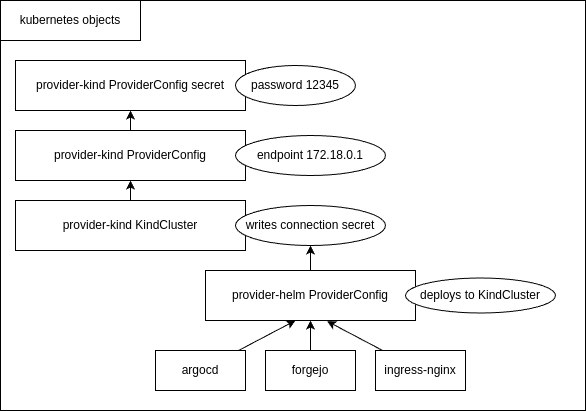

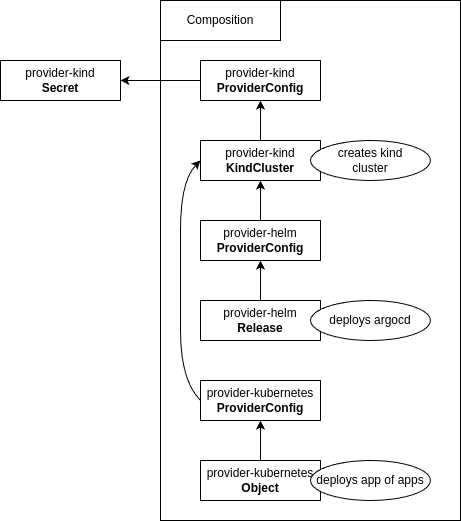

The next chart shows a system landscape of CNOE orchestration.

2024-04-PlatformEngineering-DevOpsDayRaleigh.pdf

Questions: What are the degrees of freedom in this chart? What variations with respect to environments and environmnent types exist?

CNOE in AWS

The next chart shows a context chart of CNOE orchestration.

Questions: What are the degrees of freedom in this chart? What variations with respect to environments and environmnent types exist?

2.2.3 - Tools

2.2.3.1 - Backstage

2.2.3.1.1 - Backstage Description

Backstage by Spotify can be seen as a Platform Portal. It is an open platform for building and managing internal developer tools, providing a unified interface for accessing various tools and resources within an organization.

Key Features of Backstage as a Platform Portal: Tool Integration:

Backstage allows for the integration of various tools used in the development process, such as CI/CD, version control systems, monitoring, and others, into a single interface. Service Management:

It offers the ability to register and manage services and microservices, as well as monitor their status and performance. Documentation and Learning Materials:

Backstage includes capabilities for storing and organizing documentation, making it easier for developers to access information. Golden Paths:

Backstage supports the concept of “Golden Paths,” enabling teams to follow recommended practices for development and tool usage. Modularity and Extensibility:

The platform allows for the creation of plugins, enabling users to customize and extend Backstage’s functionality to fit their organization’s needs. Backstage provides developers with centralized and convenient access to essential tools and resources, making it an effective solution for supporting Platform Engineering and developing an internal platform portal.

2.2.3.1.2 - Backstage Local Setup Tutorial

This document provides a comprehensive guide on the prerequisites and the process to set up and run Backstage locally on your machine.

Table of Contents

Prerequisites

Before you start, make sure you have the following installed on your machine:

Node.js: Backstage requires Node.js. You can download it from the Node.js website. It is recommended to use the LTS version.

Yarn: Backstage uses Yarn as its package manager. You can install it globally using npm:

npm install --global yarnGit

Docker

Setting Up Backstage

To install the Backstage Standalone app, you can use npx. npx is a tool that comes preinstalled with Node.js and lets you run commands straight from npm or other registries.

npx @backstage/create-app@latest

This command will create a new directory with a Backstage app inside. The wizard will ask you for the name of the app. This name will be created as sub directory in your current working directory.

Below is a simplified layout of the files and folders generated when creating an app.

app

├── app-config.yaml

├── catalog-info.yaml

├── package.json

└── packages

├── app

└── backend

- app-config.yaml: Main configuration file for the app. See Configuration for more information.

- catalog-info.yaml: Catalog Entities descriptors. See Descriptor Format of Catalog Entities to get started.

- package.json: Root package.json for the project. Note: Be sure that you don’t add any npm dependencies here as they probably should be installed in the intended workspace rather than in the root.

- packages/: Lerna leaf packages or “workspaces”. Everything here is going to be a separate package, managed by lerna.

- packages/app/: A fully functioning Backstage frontend app that acts as a good starting point for you to get to know Backstage.

- packages/backend/: We include a backend that helps power features such as Authentication, Software Catalog, Software Templates, and TechDocs, amongst other things.

Run the Backstage Application

You can run it in Backstage root directory by executing this command:

yarn dev

2.2.3.1.3 - Existing Backstage Plugins

Catalog:

- Used for managing services and microservices, including registration, visualization, and the ability to track dependencies and relationships between services. It serves as a central directory for all services in an organization.

Docs:

- Designed for creating and managing documentation, supporting formats such as Markdown. It helps teams organize and access technical and non-technical documentation in a unified interface.

API Docs:

- Automatically generates API documentation based on OpenAPI specifications or other API definitions, ensuring that your API information is always up to date and accessible for developers.

TechDocs:

- A tool for creating and publishing technical documentation. It is integrated directly into Backstage, allowing developers to host and maintain documentation alongside their projects.

Scaffolder:

- Allows the rapid creation of new projects based on predefined templates, making it easier to deploy services or infrastructure with consistent best practices.

CI/CD:

- Provides integration with CI/CD systems such as GitHub Actions and Jenkins, allowing developers to view build status, logs, and pipelines directly in Backstage.

Metrics:

- Offers the ability to monitor and visualize performance metrics for applications, helping teams to keep track of key indicators like response times and error rates.

Snyk:

- Used for dependency security analysis, scanning your codebase for vulnerabilities and helping to manage any potential security risks in third-party libraries.

SonarQube:

- Integrates with SonarQube to analyze code quality, providing insights into code health, including issues like technical debt, bugs, and security vulnerabilities.

GitHub:

- Enables integration with GitHub repositories, displaying information such as commits, pull requests, and other repository activity, making collaboration more transparent and efficient.

- CircleCI:

- Allows seamless integration with CircleCI for managing CI/CD workflows, giving developers insight into build pipelines, test results, and deployment statuses.

- Kubernetes:

- Provides tools to manage Kubernetes clusters, including visualizing pod status, logs, and cluster health, helping teams maintain and troubleshoot their cloud-native applications.

- Cloud:

- Includes plugins for integration with cloud providers like AWS and Azure, allowing teams to manage cloud infrastructure, services, and billing directly from Backstage.

- OpenTelemetry:

- Helps with monitoring distributed applications by integrating OpenTelemetry, offering powerful tools to trace requests, detect performance bottlenecks, and ensure application health.

- Lighthouse:

- Integrates Google Lighthouse to analyze web application performance, helping teams identify areas for improvement in metrics like load times, accessibility, and SEO.

2.2.3.1.4 - Plugin Creation Tutorial

Backstage plugins and functionality extensions should be writen in TypeScript/Node.js because backstage is written in those languages

General Algorithm for Adding a Plugin in Backstage

Create the Plugin

To create a plugin in the project structure, you need to run the following command at the root of Backstage:yarn new --select pluginThe wizard will ask you for the plugin ID, which will be its name. After that, a template for the plugin will be automatically created in the directory

plugins/{plugin id}. After this install all needed dependencies. After this install required dependencies. In example case this is"axios"for API requests

Emaple:yarn add axiosDefine the Plugin’s Functionality

In the newly created plugin directory, focus on defining the plugin’s core functionality. This is where you will create components that handle the logic and user interface (UI) of the plugin. Place these components in theplugins/{plugin_id}/src/components/folder, and if your plugin interacts with external data or APIs, manage those interactions within these components.Set Up Routes

In the main configuration file of your plugin (typicallyplugins/{plugin_id}/src/routs.ts), set up the routes. UsecreateRouteRef()to define route references, and link them to the appropriate components in yourplugins/{plugin_id}/src/components/folder. Each route will determine which component renders for specific parts of the plugin.Register the Plugin

Navigate to thepackages/appfolder and import your plugin into the main application. Register your plugin in theroutsarray withinpackages/app/src/App.tsxto integrate it into the Backstage system. It will create a rout for your’s plugin pageAdd Plugin to the Sidebar Menu

To make the plugin accessible through the Backstage sidebar, modify the sidebar component inpackages/app/src/components/Root.tsx. Add a new sidebar item linked to your plugin’s route reference, allowing users to easily access the plugin through the menu.Test the Plugin

Run the Backstage development server usingyarn devand navigate to your plugin’s route via the sidebar or directly through its URL. Ensure that the plugin’s functionality works as expected.

Example

All steps will be demonstrated using a simple example plugin, which will request JSON files from the API of jsonplaceholder.typicode.com and display them on a page.

Creating test-plugin:

yarn new --select pluginAdding required dependencies. In this case only “axios” is needed for API requests

yarn add axiosImplement code of the plugin component in

plugins/{plugin-id}/src/{Component name}/{filename}.tsximport React, { useState } from 'react'; import axios from 'axios'; import { Typography, Grid } from '@material-ui/core'; import { InfoCard, Header, Page, Content, ContentHeader, SupportButton, } from '@backstage/core-components'; export const TestComponent = () => { const [posts, setPosts] = useState<any[]>([]); const [loading, setLoading] = useState(false); const [error, setError] = useState<string | null>(null); const fetchPosts = async () => { setLoading(true); setError(null); try { const response = await axios.get('https://jsonplaceholder.typicode.com/posts'); setPosts(response.data); } catch (err) { setError('Ошибка при получении постов'); } finally { setLoading(false); } }; return ( <Page themeId="tool"> <Header title="Welcome to the Test Plugin!" subtitle="This is a subtitle"> <SupportButton>A description of your plugin goes here.</SupportButton> </Header> <Content> <ContentHeader title="Posts Section"> <SupportButton> Click to load posts from the API. </SupportButton> </ContentHeader> <Grid container spacing={3} direction="column"> <Grid item> <InfoCard title="Information Card"> <Typography variant="body1"> This card contains information about the posts fetched from the API. </Typography> {loading && <Typography>Загрузка...</Typography>} {error && <Typography color="error">{error}</Typography>} {!loading && !posts.length && ( <button onClick={fetchPosts}>Request Posts</button> )} </InfoCard> </Grid> <Grid item> {posts.length > 0 && ( <InfoCard title="Fetched Posts"> <ul> {posts.map(post => ( <li key={post.id}> <Typography variant="h6">{post.title}</Typography> <Typography>{post.body}</Typography> </li> ))} </ul> </InfoCard> )} </Grid> </Grid> </Content> </Page> ); };Setup routs in plugins/{plugin_id}/src/routs.ts

import { createRouteRef } from '@backstage/core-plugin-api';

export const rootRouteRef = createRouteRef({

id: 'test-plugin',

});

- Register the plugin in

packages/app/src/App.tsxin routes Import of the plugin:

import { TestPluginPage } from '@internal/backstage-plugin-test-plugin';

Adding route:

const routes = (

<FlatRoutes>

... //{Other Routs}

<Route path="/test-plugin" element={<TestPluginPage />} />

</FlatRoutes>

)

- Add Item to sidebar menu of the backstage in

packages/app/src/components/Root/Root.tsx. This should be added in to Root object as another SidebarItem

export const Root = ({ children }: PropsWithChildren<{}>) => (

<SidebarPage>

<Sidebar>

... //{Other sidebar items}

<SidebarItem icon={ExtensionIcon} to="/test-plugin" text="Test Plugin" />

</Sidebar>

{children}

</SidebarPage>

);

- Plugin is ready. Run the application

yarn dev

2.2.3.2 - CNOE

2.2.3.2.1 - Analysis of CNOE competitors

Kratix

Kratix is a Kubernetes-native framework that helps platform engineering teams automate the provisioning and management of infrastructure and services through custom-defined abstractions called Promises. It allows teams to extend Kubernetes functionality and provide resources in a self-service manner to developers, streamlining the delivery and management of workloads across environments.

Concepts

Key concepts of Kratix:

- Workload: This is an abstraction representing any application or service that needs to be deployed within the infrastructure. It defines the requirements and dependent resources necessary to execute this task.

- Promise: A “Promise” is a ready-to-use infrastructure or service package. Promises allow developers to request specific resources (such as databases, storage, or computing power) through the standard Kubernetes interface. It’s similar to an operator in Kubernetes but more universal and flexible. Kratix simplifies the development and delivery of applications by automating the provisioning and management of infrastructure and resources through simple Kubernetes APIs.

Pros of Kratix

Resource provisioning automation. Kratix simplifies infrastructure creation for developers through the abstraction of “Promises.” This means developers can simply request the necessary resources (like databases, message queues) without dealing with the intricacies of infrastructure management.

Flexibility and adaptability. Platform teams can customize and adapt Kratix to specific needs by creating custom Promises for various services, allowing the infrastructure to meet the specific requirements of the organization.

Unified resource request interface. Developers can use a single API (Kubernetes) to request resources, simplifying interaction with infrastructure and reducing complexity when working with different tools and systems.

Cons of Kratix

Although Kratix offers great flexibility, it can also lead to more complex setup and platform management processes. Creating custom Promises and configuring their behavior requires time and effort.

Kubernetes dependency. Kratix relies on Kubernetes, which makes it less applicable in environments that don’t use Kubernetes or containerization technologies. It might also lead to integration challenges if an organization uses other solutions.

Limited ecosystem. Kratix doesn’t have as mature an ecosystem as some other infrastructure management solutions (e.g., Terraform, Pulumi). This may limit the availability of ready-made solutions and tools, increasing the amount of manual work when implementing Kratix.

Humanitec

Humanitec is an Internal Developer Platform (IDP) that helps platform engineering teams automate the provisioning and management of infrastructure and services through dynamic configuration and environment management.

It allows teams to extend their infrastructure capabilities and provide resources in a self-service manner to developers, streamlining the deployment and management of workloads across various environments.

Concepts

Key concepts of Humanitec:

Application Definition:

This is an abstraction where developers define their application, including its services, environments, a dependencies. It abstracts away infrastructure details, allowing developers to focus on building and deploying their applications.Dynamic Configuration Management:

Humanitec automatically manages the configuration of applications and services across multiple environments (e.g., development, staging, production). It ensures consistency and alignment of configurations as applications move through different stages of deployment.

Humanitec simplifies the development and delivery process by providing self-service deployment options while maintaining centralized governance and control for platform teams.

Pros of Humanitec

Resource provisioning automation. Humanitec automates infrastructure and environment provisioning, allowing developers to focus on building and deploying applications without worrying about manual configuration.

Dynamic environment management. Humanitec manages application configurations across different environments, ensuring consistency and reducing manual configuration errors.

Golden Paths. best-practice workflows and processes that guide developers through infrastructure provisioning and application deployment. This ensures consistency and reduces cognitive load by providing a set of recommended practices.

Unified resource management interface. Developers can use Humanitec’s interface to request resources and deploy applications, reducing complexity and improving the development workflow.

Cons of Humanitec

Humanitec is commercially licensed software

Integration challenges. Humanitec’s dependency on specific cloud-native environments can create challenges for organizations with diverse infrastructures or those using legacy systems.

Cost. Depending on usage, Humanitec might introduce additional costs related to the implementation of an Internal Developer Platform, especially for smaller teams.

Harder to customise

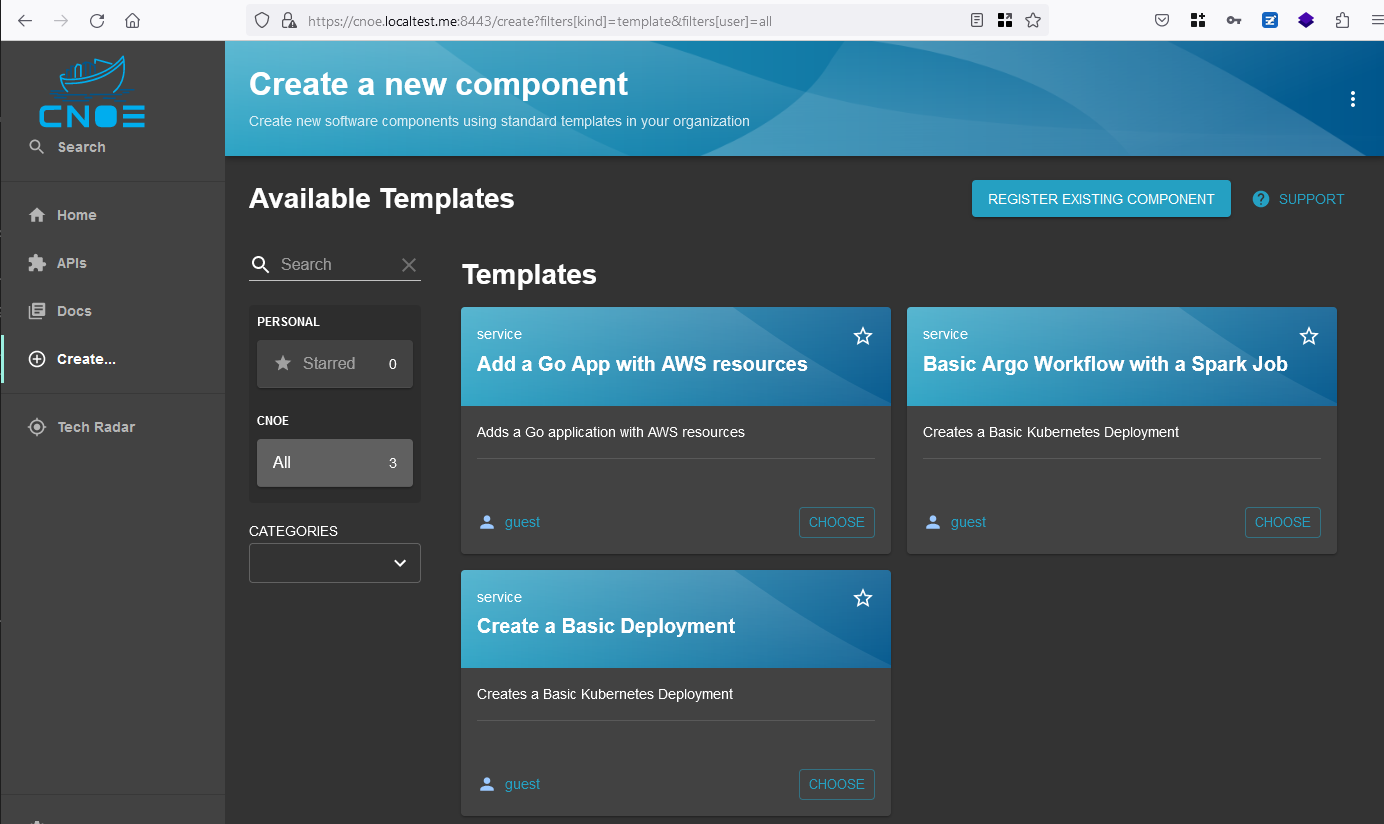

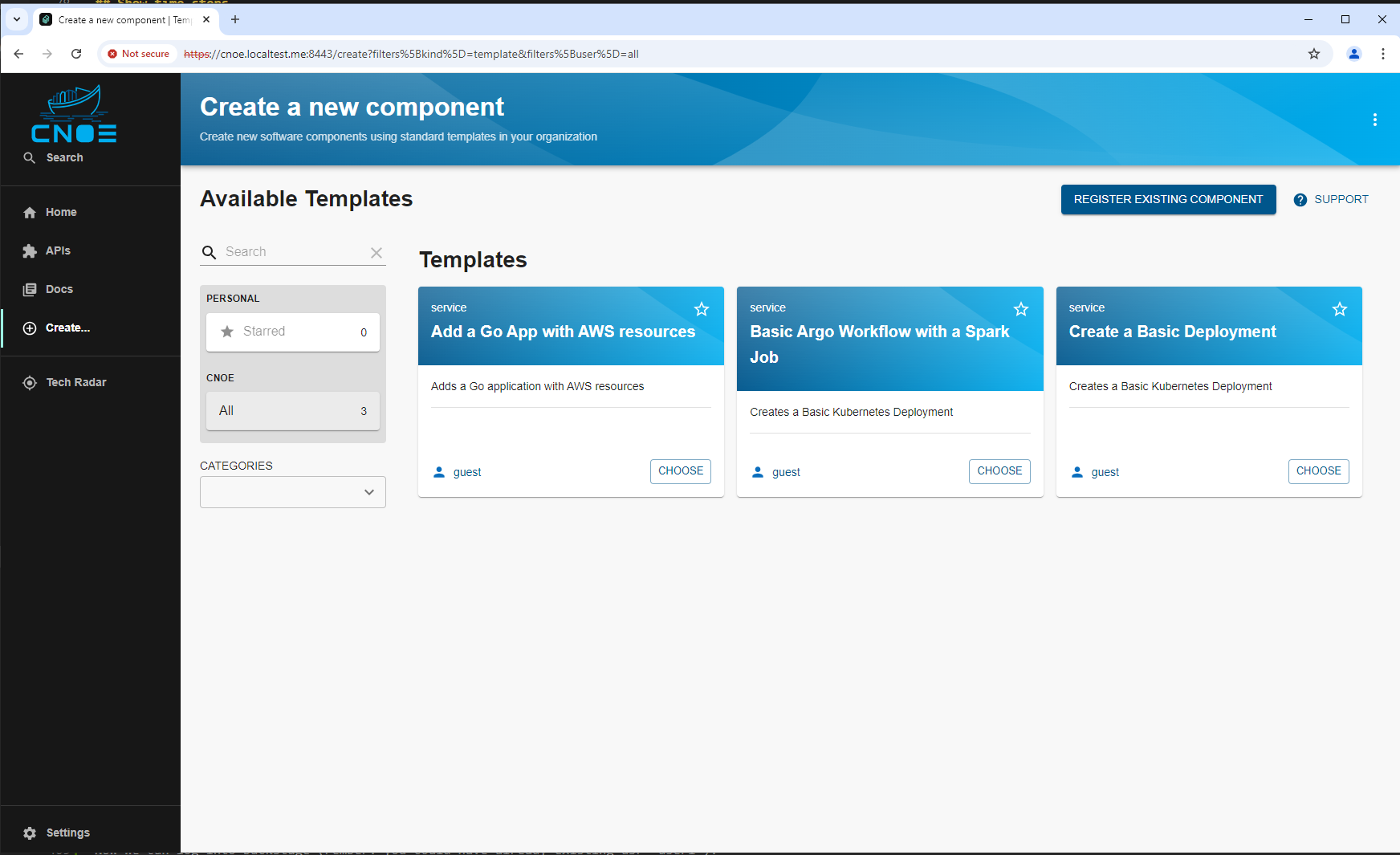

2.2.3.2.2 - Included Backstage Templates

2.2.3.2.2.1 - Template for basic Argo Workflow

Backstage Template for Basic Argo Workflow with Spark Job

This Backstage template YAML automates the creation of an Argo Workflow for Kubernetes that includes a basic Spark job, providing a convenient way to configure and deploy workflows involving data processing or machine learning jobs. Users can define key parameters, such as the application name and the path to the main Spark application file. The template creates necessary Kubernetes resources, publishes the application code to a Gitea Git repository, registers the application in the Backstage catalog, and deploys it via ArgoCD for easy CI/CD management.

Use Case

This template is designed for teams that need a streamlined approach to deploy and manage data processing or machine learning jobs using Spark within an Argo Workflow environment. It simplifies the deployment process and integrates the application with a CI/CD pipeline. The template performs the following:

- Workflow and Spark Job Setup: Defines a basic Argo Workflow and configures a Spark job using the provided application file path, ideal for data processing tasks.

- Repository Setup: Publishes the workflow configuration to a Gitea repository, enabling version control and easy updates to the job configuration.

- ArgoCD Integration: Creates an ArgoCD application to manage the Spark job deployment, ensuring continuous delivery and synchronization with Kubernetes.

- Backstage Registration: Registers the application in Backstage, making it easily discoverable and manageable through the Backstage catalog.

This template boosts productivity by automating steps required for setting up Argo Workflows and Spark jobs, integrating version control, and enabling centralized management and visibility, making it ideal for projects requiring efficient deployment and scalable data processing solutions.

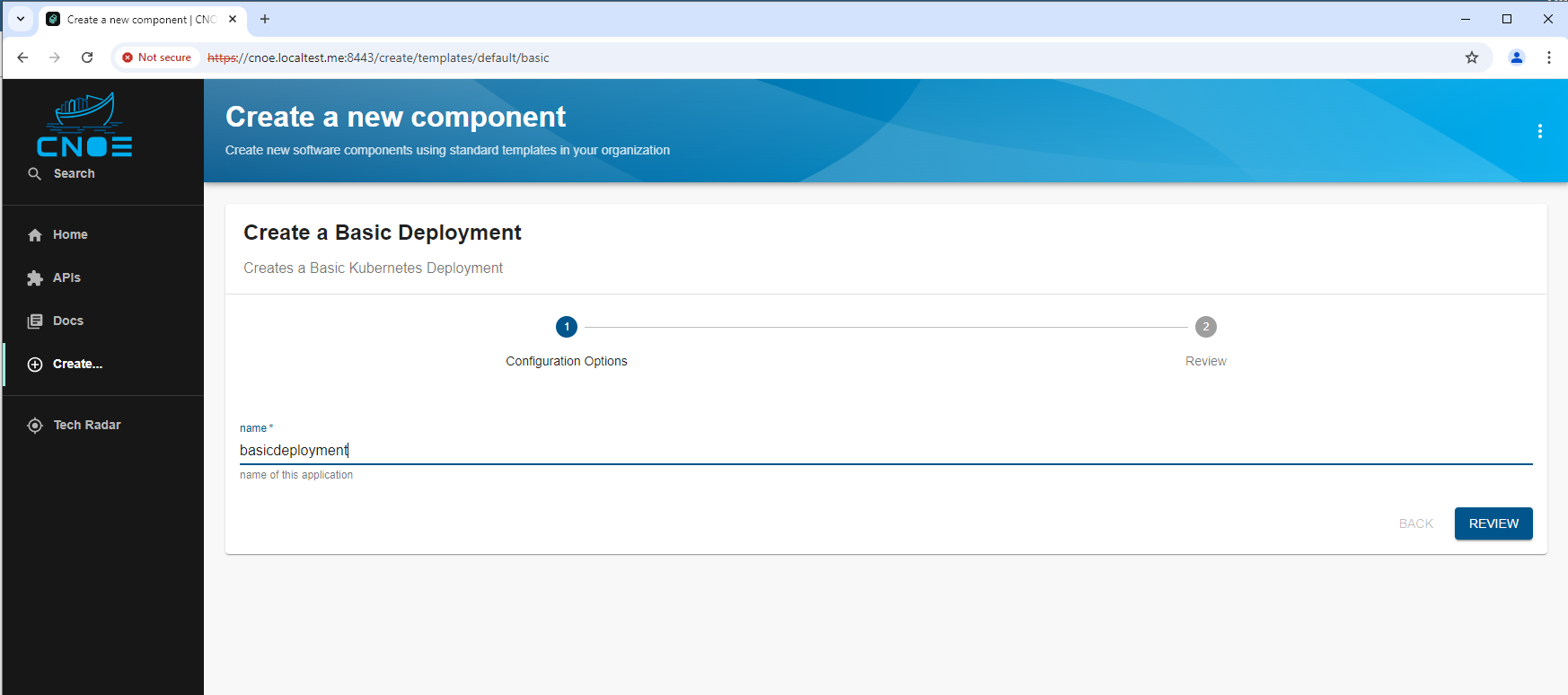

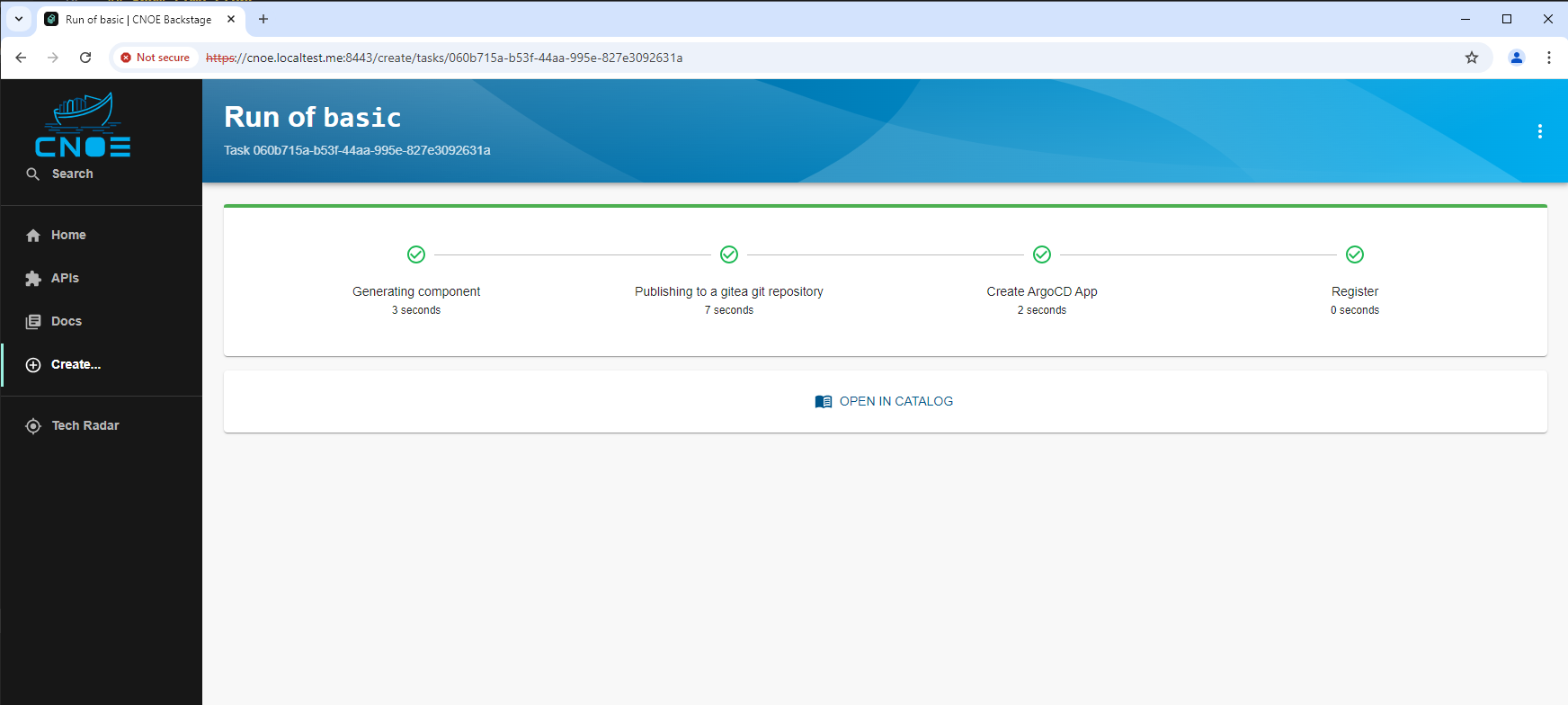

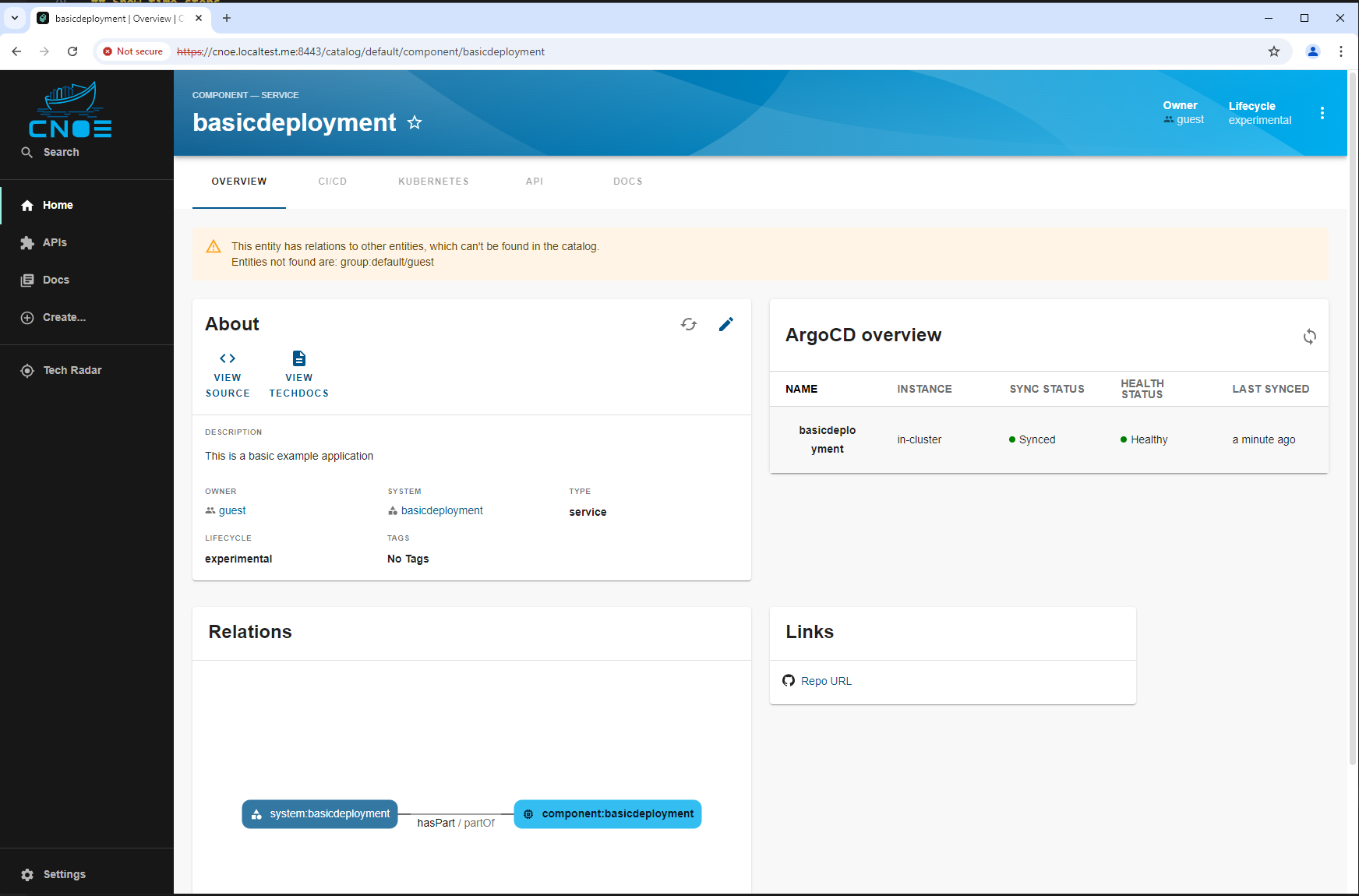

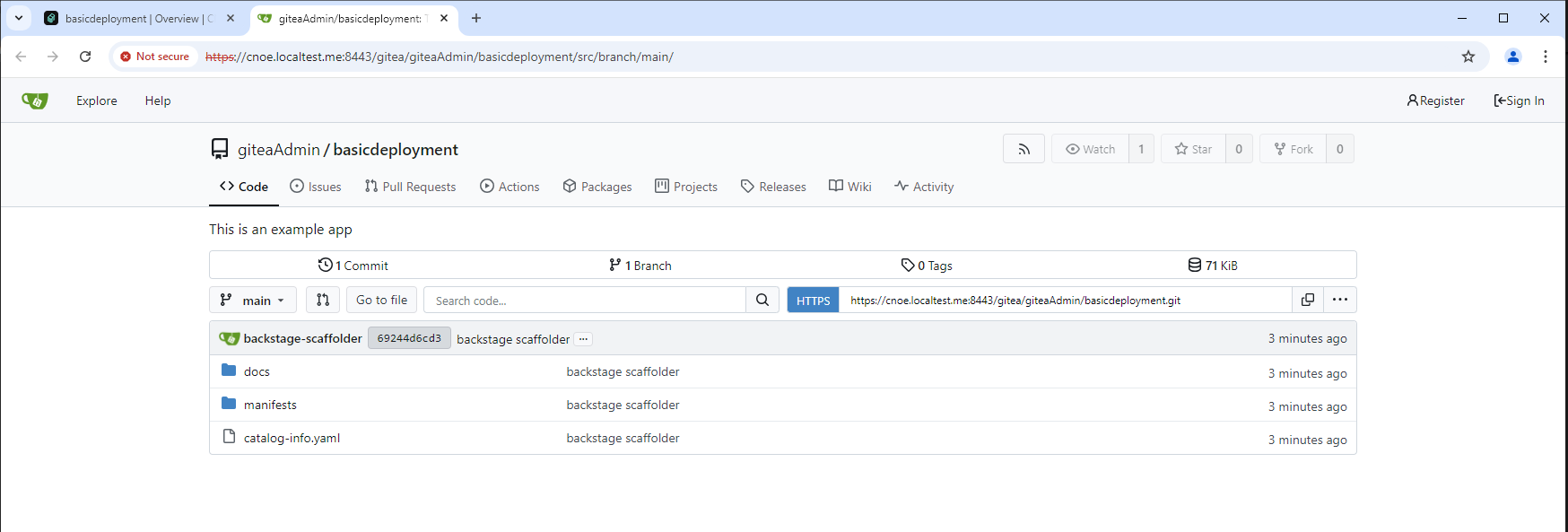

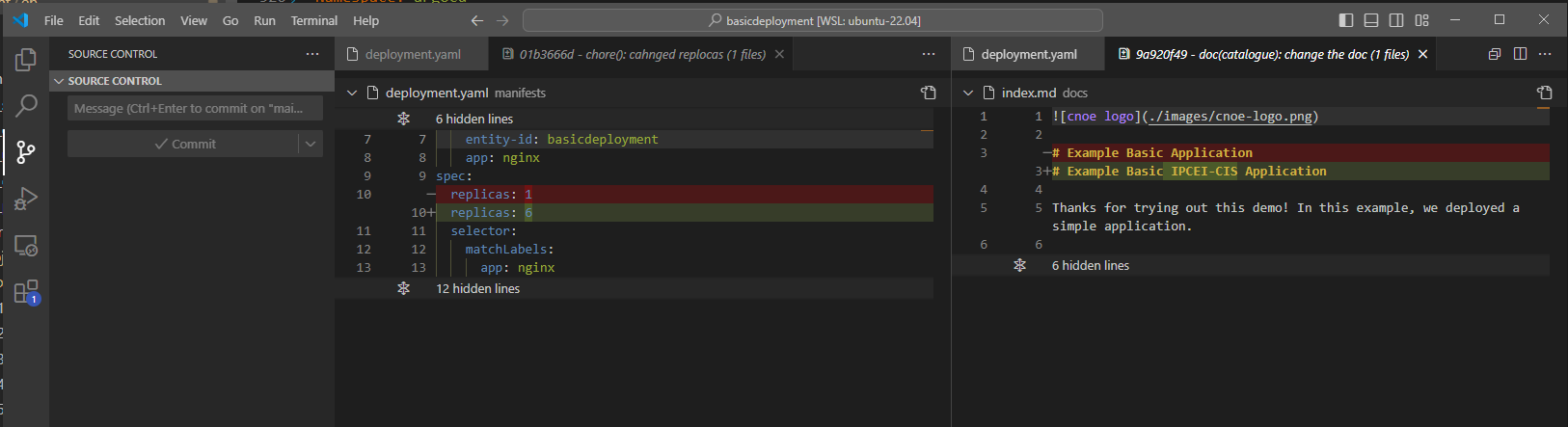

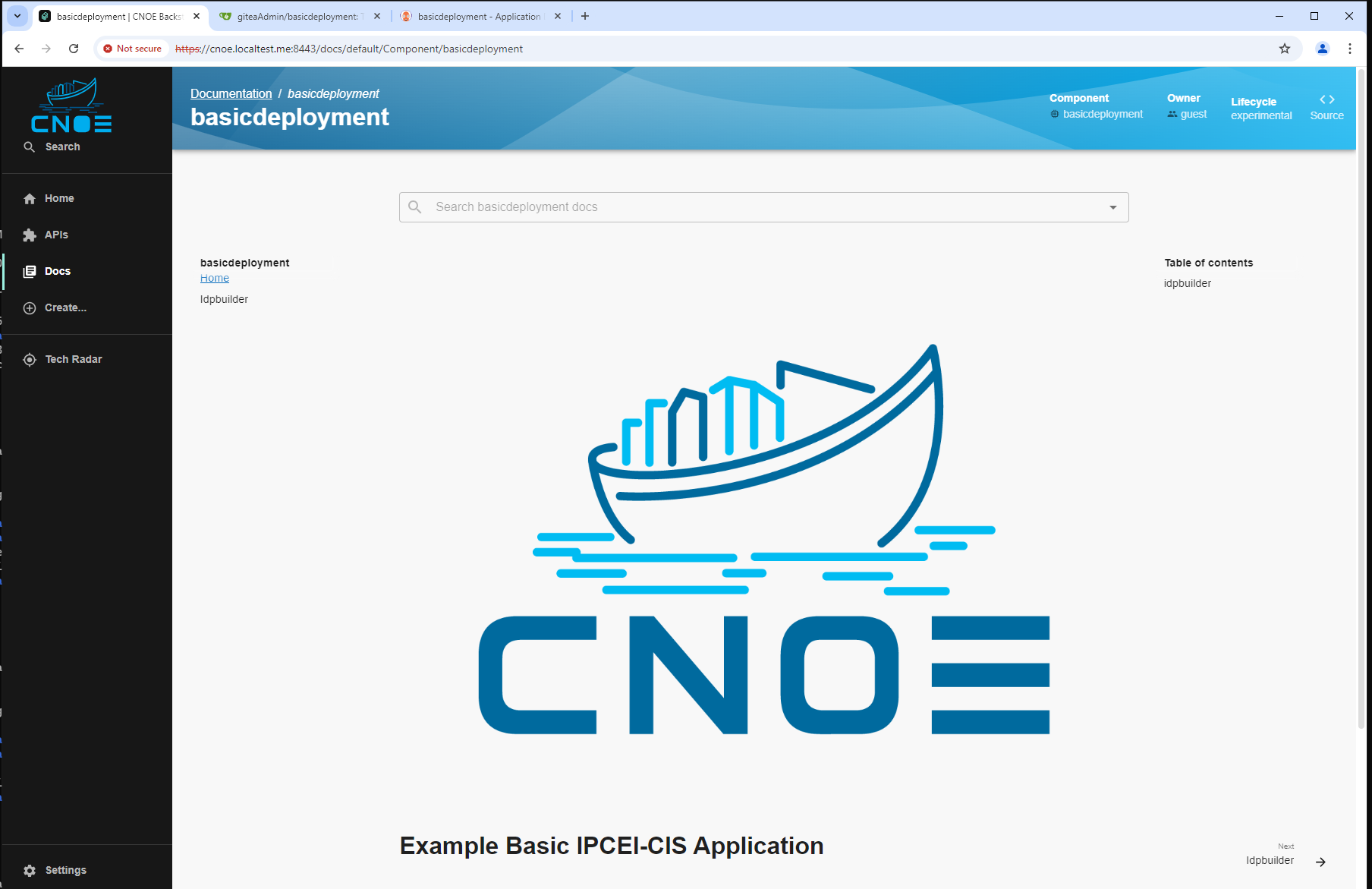

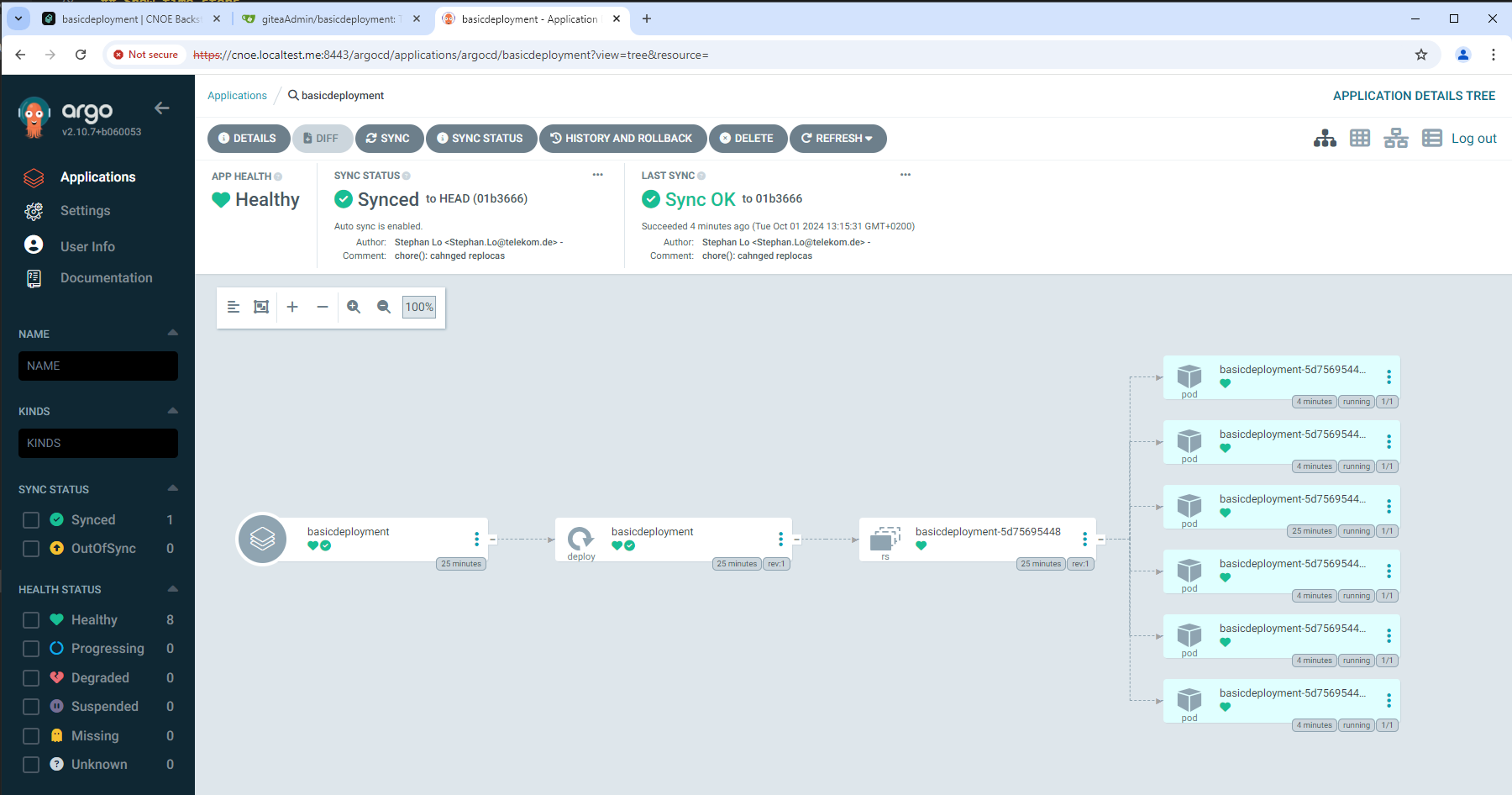

2.2.3.2.2.2 - Template for basic kubernetes deployment

Backstage Template for Kubernetes Deployment

This Backstage template YAML automates the creation of a basic Kubernetes Deployment, aimed at simplifying the deployment and management of applications in Kubernetes for the user. The template allows users to define essential parameters, such as the application’s name, and then creates and configures the Kubernetes resources, publishes the application code to a Gitea Git repository, and registers the application in the Backstage catalog for tracking and management.

Use Case

The template is designed for teams needing a streamlined approach to deploy applications in Kubernetes while automatically configuring their CI/CD pipelines. It performs the following:

- Deployment Creation: A Kubernetes Deployment YAML is generated based on the provided application name, specifying a basic setup with an Nginx container.

- Repository Setup: Publishes the deployment code in a Gitea repository, allowing for version control and future updates.

- ArgoCD Integration: Automatically creates an ArgoCD application for the deployment, facilitating continuous delivery and synchronization with Kubernetes.

- Backstage Registration: Registers the application in Backstage to make it discoverable and manageable via the Backstage catalog.

This template enhances productivity by automating several steps required for deployment, version control, and registration, making it ideal for projects where fast, consistent deployment and centralized management are required.

2.2.3.2.3 - idpbuilder

2.2.3.2.3.1 - Installation of idpbuilder

Local installation with KIND Kubernetes

The idpbuilder uses KIND as Kubernetes cluster. It is suggested to use a virtual machine for the installation. MMS Linux clients are unable to execute KIND natively on the local machine because of network problems. Pods for example can’t connect to the internet.

Windows and Mac users already utilize a virtual machine for the Docker Linux environment.

Prerequisites

- Docker Engine

- Go

- kubectl

- kind

Build process

For building idpbuilder the source code needs to be downloaded and compiled:

git clone https://github.com/cnoe-io/idpbuilder.git

cd idpbuilder

go build

The idpbuilder binary will be created in the current directory.

Start idpbuilder

To start the idpbuilder binary execute the following command:

./idpbuilder create --use-path-routing --log-level debug --package https://github.com/cnoe-io/stacks//ref-implementation

Logging into ArgoCD

At the end of the idpbuilder execution a link of the installed ArgoCD is shown. The credentianls for access can be obtained by executing:

./idpbuilder get secrets

Logging into KIND

A Kubernetes config is created in the default location $HOME/.kube/config. A management of the Kubernetes config is recommended to not unintentionally delete acces to other clusters like the OSC.

To show all running KIND nodes execute:

kubectl get nodes -o wide

To see all running pods:

kubectl get pods -o wide

Next steps

Follow this documentation: https://github.com/cnoe-io/stacks/tree/main/ref-implementation

Delete the idpbuilder KIND cluster

The cluster can be deleted by executing:

idpbuilder delete cluster

Remote installation into a bare metal Kubernetes instance

CNOE provides two implementations of an IDP:

- Amazon AWS implementation

- KIND implementation

Both are not useable to run on bare metal or an OSC instance. The Amazon implementation is complex and makes use of Terraform which is currently not supported by either base metal or OSC. Therefore the KIND implementation is used and customized to support the idpbuilder installation. The idpbuilder is also doing some network magic which needs to be replicated.

Several prerequisites have to be provided to support the idpbuilder on bare metal or the OSC:

- Kubernetes dependencies

- Network dependencies

- Changes to the idpbuilder

Prerequisites

Talos Linux is choosen for a bare metal Kubernetes instance.

- talosctl

- Go

- Docker Engine

- kubectl

- kustomize

- helm

- nginx

As soon as the idpbuilder works correctly on bare metal, the next step is to apply it to an OSC instance.

Add *.cnoe.localtest.me to hosts file

Append this lines to /etc/hosts

127.0.0.1 gitea.cnoe.localtest.me

127.0.0.1 cnoe.localtest.me

Install nginx and configure it

Install nginx by executing:

sudo apt install nginx

Replace /etc/nginx/sites-enabled/default with the following content:

server {

listen 8443 ssl default_server;

listen [::]:8443 ssl default_server;

include snippets/snakeoil.conf;

location / {

proxy_pass http://10.5.0.20:80;

proxy_http_version 1.1;

proxy_cache_bypass $http_upgrade;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

Start nginx by executing:

sudo systemctl enable nginx

sudo systemctl restart nginx

Building idpbuilder

For building idpbuilder the source code needs to be downloaded and compiled:

git clone https://github.com/cnoe-io/idpbuilder.git

cd idpbuilder

go build

The idpbuilder binary will be created in the current directory.

Configure VS Code launch settings

Open the idpbuilder folder in VS Code:

code .

Create a new launch setting. Add the "args" parameter to the launch setting:

{

"version": "0.2.0",

"configurations": [

{

"name": "Launch Package",

"type": "go",

"request": "launch",

"mode": "auto",

"program": "${fileDirname}",

"args": ["create", "--use-path-routing", "--package", "https://github.com/cnoe-io/stacks//ref-implementation"]

}

]

}

Create the Talos bare metal Kubernetes instance

Talos by default will create docker containers, similar to KIND. Create the cluster by executing:

talosctl cluster create

Install local path privisioning (storage)

mkdir -p localpathprovisioning

cd localpathprovisioning

cat > localpathprovisioning.yaml <<EOF

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- github.com/rancher/local-path-provisioner/deploy?ref=v0.0.26

patches:

- patch: |-

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/var/local-path-provisioner"]

}

]

}

- patch: |-

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

annotations:

storageclass.kubernetes.io/is-default-class: "true"

- patch: |-

apiVersion: v1

kind: Namespace

metadata:

name: local-path-storage

labels:

pod-security.kubernetes.io/enforce: privileged

EOF

kustomize build | kubectl apply -f -

rm localpathprovisioning.yaml kustomization.yaml

cd ..

rmdir localpathprovisioning

Install an external load balancer

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.14.8/config/manifests/metallb-native.yaml

sleep 50

cat <<EOF | kubectl apply -f -

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

namespace: metallb-system

spec:

addresses:

- 10.5.0.20-10.5.0.130

EOF

cat <<EOF | kubectl apply -f -

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: homelab-l2

namespace: metallb-system

spec:

ipAddressPools:

- first-pool

EOF

Install an ingress controller which uses the external load balancer

helm upgrade --install ingress-nginx ingress-nginx \

--repo https://kubernetes.github.io/ingress-nginx \

--namespace ingress-nginx --create-namespace

sleep 30

Execute idpbuilder

Modify the idpbuilder source code

Edit the function Run in pkg/build/build.go and comment out the creation of the KIND cluster:

/*setupLog.Info("Creating kind cluster")

if err := b.ReconcileKindCluster(ctx, recreateCluster); err != nil {

return err

}*/

Compile the idpbuilder

go build

Start idpbuilder

Then, in VS Code, switch to main.go in the root directory of the idpbuilder and start debugging.

Logging into ArgoCD

At the end of the idpbuilder execution a link of the installed ArgoCD is shown. The credentianls for access can be obtained by executing:

./idpbuilder get secrets

Logging into Talos cluster

A Kubernetes config is created in the default location $HOME/.kube/config. A management of the Kubernetes config is recommended to not unintentionally delete acces to other clusters like the OSC.

To show all running Talos nodes execute:

kubectl get nodes -o wide

To see all running pods:

kubectl get pods -o wide

Delete the idpbuilder Talos cluster

The cluster can be deleted by executing:

talosctl cluster destroy

TODO’s for running idpbuilder on bare metal or OSC

Required:

Add *.cnoe.localtest.me to the Talos cluster DNS, pointing to the host device IP address, which runs nginx.

Create a SSL certificate with

cnoe.localtest.meas common name. Edit the nginx config to load this certificate. Configure idpbuilder to distribute this certificate instead of the one idpbuilder distributes by idefault.

Optimizations:

Implement an idpbuilder uninstall. This is specially important when working on the OSC instance.

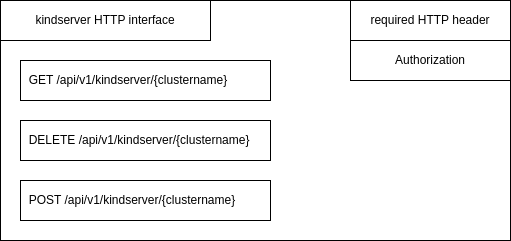

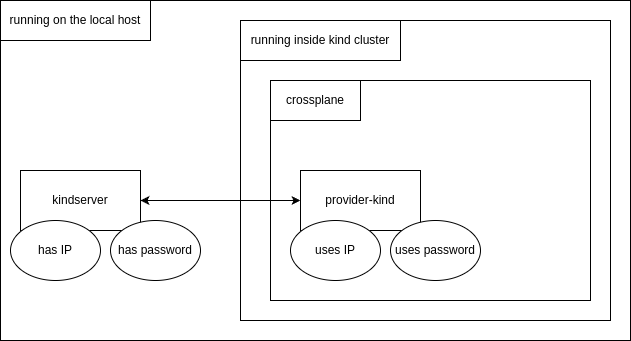

Remove or configure gitea.cnoe.localtest.me, it seems not to work even in the idpbuilder local installation with KIND.